From Salk Institute:

“This is a real bombshell in the field of neuroscience,” says Terry Sejnowski, Salk professor and co-senior author of the paper, which was published in eLife. “We discovered the key to unlocking the design principle for how hippocampal neurons function with low energy but high computation power. Our new measurements of the brain’s memory capacity increase conservative estimates by a factor of 10 to at least a petabyte, in the same ballpark as the World Wide Web.”

…

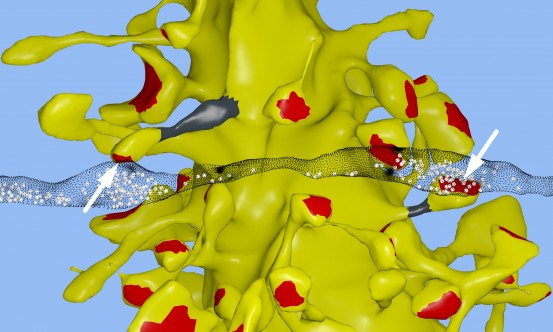

The Salk team, while building a 3D reconstruction of rat hippocampus tissue (the memory center of the brain), noticed something unusual. In some cases, a single axon from one neuron formed two synapses reaching out to a single dendrite of a second neuron, signifying that the first neuron seemed to be sending a duplicate message to the receiving neuron.

At first, the researchers didn’t think much of this duplicity, which occurs about 10 percent of the time in the hippocampus. But Tom Bartol, a Salk staff scientist, had an idea: if they could measure the difference between two very similar synapses such as these, they might glean insight into synaptic sizes, which so far had only been classified in the field as small, medium and large.

…

It was known before that the range in sizes between the smallest and largest synapses was a factor of 60 and that most are small.

But armed with the knowledge that synapses of all sizes could vary in increments as little as eight percent between sizes within a factor of 60, the team determined there could be about 26 categories of sizes of synapses, rather than just a few.

“Our data suggests there are 10 times more discrete sizes of synapses than previously thought,” says Bartol. In computer terms, 26 sizes of synapses correspond to about 4.7 “bits” of information. Previously, it was thought that the brain was capable of just one to two bits for short and long memory storage in the hippocampus.

“This is roughly an order of magnitude of precision more than anyone has ever imagined,” says Sejnowski.

What makes this precision puzzling is that hippocampal synapses are notoriously unreliable. When a signal travels from one neuron to another, it typically activates that second neuron only 10 to 20 percent of the time.

“We had often wondered how the remarkable precision of the brain can come out of such unreliable synapses,” says Bartol. One answer, it seems, is in the constant adjustment of synapses, averaging out their success and failure rates over time. The team used their new data and a statistical model to find out how many signals it would take a pair of synapses to get to that eight percent difference.

The researchers calculated that for the smallest synapses, about 1,500 events cause a change in their size/ability (20 minutes) and for the largest synapses, only a couple hundred signaling events (1 to 2 minutes) cause a change.

“This means that every 2 or 20 minutes, your synapses are going up or down to the next size. The synapses are adjusting themselves according to the signals they receive,” says Bartol.

…

The findings also offer a valuable explanation for the brain’s surprising efficiency. The waking adult brain generates only about 20 watts of continuous power—as much as a very dim light bulb.More.

The hope is to generate computers that can think. The problem with that view lies in an equivocation in one sentence in the PR: “Our memories and thoughts are the result of patterns of electrical and chemical activity in the brain.”

No. Those patterns are the evidence that these ideas are passing through, evidence that can be observed. In the same way, the rows of characters on a screen are evidence that the PR itself is passing through—in this case, patterns that were intended to be read for their meaning outside the brains in which they originated.

One wonders whether life consists mostly of patterns of information moving through time, perhaps more powerfully than we ever expected.

See also: Neuroscience tried wholly embracing naturalism, but then the brain got away

and

(from Salk Institute) Grafted plants can share epigenetic traits Researcher: Our study showed genetic information is actually flowing from one plant to the other. That’s the surprise to me.

Follow UD News at Twitter!

Here’s the abstract:

Information in a computer is quantified by the number of bits that can be stored and recovered. An important question about the brain is how much information can be stored at a synapse through synaptic plasticity, which depends on the history of probabilistic synaptic activity. The strong correlation between size and efficacy of a synapse allowed us to estimate the variability of synaptic plasticity. In an EM reconstruction of hippocampal neuropil we found single axons making two or more synaptic contacts onto the same dendrites, having shared histories of presynaptic and postsynaptic activity. The spine heads and neck diameters, but not neck lengths, of these pairs were nearly identical in size. We found that there is a minimum of 26 distinguishable synaptic strengths, corresponding to storing 4.7 bits of information at each synapse. Because of stochastic variability of synaptic activation the observed precision requires averaging activity over several minutes. (Public access) – Nanoconnectomic upper bound on the variability of synaptic plasticity, Bartol et al. November 30, 2015 Cite as eLife 2015;4:e10778