|

|

After reading about how AlphaGo managed to trounce Lee Se-Dol 4-1 in a series of five games of Go, I had a feeling of déjà vu: where have I read about this style of learning before? And then it came to me: pigeons. Both computers and pigeons are incremental learners, and both employ probabilistic algorithms (such as the various machine learning algorithms used in artificial intelligence, and the computation of relative frequencies of positive or negative reinforcements, which is what pigeons do when they undergo conditioning) in order to help them home in on their learning target. Of course, there are several differences as well: computers don’t need reinforcements such as food to motivate them; computers learn a lot faster in real time; computers can learn over millions of trials, compared with thousands for pigeons; and finally, computers can be programmed to train themselves, as AlphaGo did: basically it played itself millions of times in order to become a Go champion. Despite these differences, however, the fundamental similarities between computers and pigeons are quite impressive.

Incremental learning in computers and pigeons

A BBC report (January 27, 2016) explains how AlphaGo learned to play Go like an expert:

DeepMind’s chief executive, Demis Hassabis, said its AlphaGo software followed a three-stage process, which began with making it analyse 30 million moves from games played by humans.

“It starts off by looking at professional games,” he said.

“It learns what patterns generally occur – what sort are good and what sort are bad. If you like, that’s the part of the program that learns the intuitive part of Go.

“It now plays different versions of itself millions and millions of times, and each time it gets incrementally better. It learns from its mistakes.

“The final step is known as the Monte Carlo Tree Search, which is really the planning stage.

“Now it has all the intuitive knowledge about which positions are good in Go, it can make long-range plans.”

As programmer Mike James observes, it was neural networks that enabled AlphaGo to teach itself and to improve with such extraordinary rapidity:

Neural networks are general purpose AI. They learn in various ways and they aren’t restricted in what they learn. AlphaGo learned to play Go from novice to top level in just a few months – mostly by playing itself. No programmer taught AlphaGo to play in the sense of writing explicit rules for game play. AlphaGo does still search ahead to evaluate moves, but so does a human player.

An article in the Japan Times (March 15, 2016) quoted a telling comment by Chinese world go champion Ke Jie on one vital advantage AlphaGo has over human players, in terms of practice opportunities: “It is very hard for go players at my level to improve even a little bit, whereas AlphaGo has hundreds of computers to help it improve and can play hundreds of practice matches a day.”

As commenter Mapou pointed out in a comment on my previous post, the number of games of Go played by Lee Se-Dol during his entire life would only be a few thousand. (Let’s be generous and say 10,000.) What’s really surprising is that with hundreds of times less experience, he still managed to beat AlphaGo in one game, and in his final game, he managed to hold off AlphaGo from winning for a total of five hours.

Pigeons, like computers, are incremental learners: they may require thousands of trials involving reinforcement for them to learn to solve the match-to-sample task, while visual and spatial probability learning in pigeons may require hundreds of trials.

This is not to say that pigeons always require large numbers of trials, in order to learn a new skill. Indeed, research has shown that pigeons can learn hundreds of picture-response associations after a single exposure, and it turns out that a positive reinforcement, immediately after presenting the first picture, tends to promote more rapid learning. However, the best-documented instances of one-trial learning occur in cases where there is a singular association between stimulus and response, and where the stimulus is associated with very negative consequences, such as an electric shock.

Probabilistic learning algorithms: calculating the odds

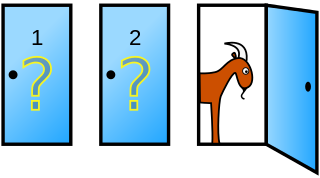

Pigeons also possess a remarkable ability to compute probabilities while learning: unlike humans, they are not fooled by the Monty Hall dilemma: instead of trying to figure out the problem on a priori grounds, as humans tend to do, pigeons apparently rely on empirical probability (based on the relative frequencies of rewards for different choices, in previous trials) to solve the dilemma, and they do so quite successfully, outperforming humans. (N.B. I’m certainly not suggesting that pigeons have any conscious notion of a probability, of course; all I’m saying is that the process of conditioning can be mathematically described in this way. Presumably the calculations involved occur at a subconscious, neural level.)

|

The Monty Hall problem. In search of a new car, the player picks a door, say 1. The game host then opens one of the other doors, say 3, to reveal a goat and offers to let the player pick door 2 instead of door 1. Most human beings fail to realize that the contestant should switch to the other door, reasoning falsely that since the probability of the car’s being behind either door 1 or door 2 is not affected by the host’s act of opening door 3, it could equally well be behind either door, and overlooking the fact that if the car is behind door 2, then the host can only choose to open door 3, whereas if it’s behind door 1, the host has two choices: he can open door 2 or door 3. In other words, the host’s action of opening door 3 is half as likely, if the car is behind door 1 as it would be if the car is behind door 2. Pigeons, on the other hand, can figure out the optimal strategy for solving this problem, simply by using the relative frequencies of the food rewards associated with making each choice, averaged over a very large number of trials.

Indeed, it turns out that many animals have an impressive capacity for probabilistic learning:

Classic studies have demonstrated animals’ abilities to probability match and maximize (boost high probabilities while damping lower probabilities) across varied circumstances and domains (e.g., Gallistel, 1993). Rats are able to compute conditional probabilities in operant conditioning paradigms (e.g., Rescorla, 1968)…. Methods unavailable to human researchers have even established that neurons in non-human animals are attuned to probabilities. For example, mid-brain dopamine neurons rapidly adapt to the information provided by reward-predicting stimuli (including probability of reward) in macaques (Tobler, Fiorillo, & Schultz, 2005), and cortical neurons in cats are responsive to the probabilities with which particular sounds occur (e.g., Ulanovsky, Las, & Nelken, 2003).

(Infant Pathways to Language: Methods, Models, and Research Directions, edited by John Colombo, Peggy McCardle, Lisa Freund. Psychology Press, 2009. Excerpt from Chapter 3: “Acquiring Grammatical Patterns: Constraints on Learning,” by Jenny Saffran.)

Machine learning also employs probabilistic algorithms which can learn to recognize new categories of objects, with a degree of accuracy which approaches that of human learning. The term “machine learning” is a broad one, and encompasses a wide variety of different approaches. However, machine learning algorithms suffer from one great drawback when compared to human learning: they often require scores of examples, in order to perform at a human-like level of accuracy.

Last year, researchers at MIT, New York University, and the University of Toronto developed a Bayesian program that “rivals human abilities” in recognizing hand-written characters from many different alphabets, and whose ability to produce a convincing-looking variation of a character in an unfamiliar writing system, on the first try, was indistinguishable from that of humans – so much so that human judges couldn’t tell which characters had been drawn by humans and which characters had been created by the Bayesian program. The program was described in a study in Science magazine. As the authors of the study note in their abstract:

People learning new concepts can often generalize successfully from just a single example, yet machine learning algorithms typically require tens or hundreds of examples to perform with similar accuracy. People can also use learned concepts in richer ways than conventional algorithms — for action, imagination, and explanation. We present a computational model that captures these human learning abilities for a large class of simple visual concepts: handwritten characters from the world’s alphabets. The model represents concepts as simple programs that best explain observed examples under a Bayesian criterion. On a challenging one-shot classification task, the model achieves human-level performance while outperforming recent deep learning approaches. We also present several “visual Turing tests” probing the model’s creative generalization abilities, which in many cases are indistinguishable from human behavior.

(“Human-level concept learning through probabilistic program induction” by Brenden M. Lake, Ruslan Salakhutdinov and Joshua B. Tenenbaum. Science 11 December 2015: Vol. 350, Issue 6266, pp. 1332-1338, DOI: 10.1126/science.aab3050.)

Tenenbaum and his co-authors claim in their study that their model “captures” the human capacity for “action,” “imagination,” “explanation” and “creative generalization.” They also assert that their program outperforms non-Bayesian approaches, including the much-vaunted “deep learning” method, which usually has to sift through vast data sets in order to acquire knowledge. However, Tenenbaum acknowledged, in an interview, that human beings are still in a learning league of their own: “when you look at children, it’s amazing what they can learn from very little data.”

Although Bayesian probabilities hold great promise in the field of computing, there is very little (if any) scientific evidence that human and animal brains operate according to Bayesian algorithms, when they learn new things, or that neurons in the brain process information in a Bayesian fashion. Indeed, AI researcher Jeffrey Bowers warns that while Bayesian models are capable of replicating almost any kind of learning task (provided that one is willing to adjust one’s prior assumptions and input), their very flexibility renders them virtually immune to falsification, as explanations of how humans and other organisms learn.

Pigeons and Picasso

|

|

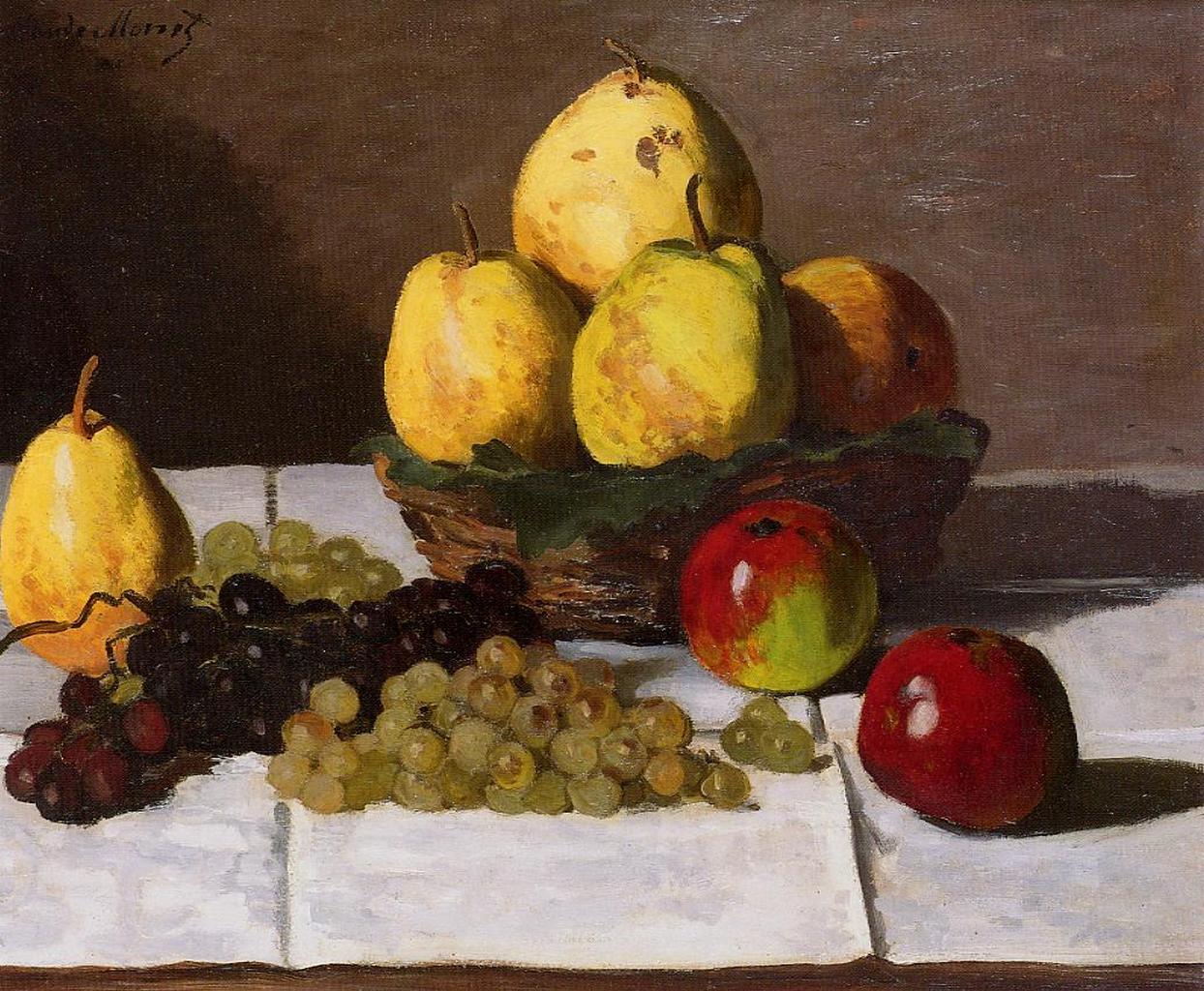

Left: Still Life with Pears and Grapes by Claude Monet. 1880. Image courtesy of WikiArt.org.

Right: Les Demoiselles d’Avignon, by Pablo Picasso. Oil on canvas, 1907. Museum of Modern Art, New York. Image courtesy of Wikipedia.

Pigeons have been trained to discriminate between color slides of paintings by Monet and Picasso, by being exposed to works by the two artists – one, an Impressionist, and the other, a Cubist.

Shigeru Watanabe et al., in their paper, Pigeons’ Discrimiation of Paintings by Monet and Picasso (Journal of the Experimental Analysis of Behavior, 1995, Number 2 (March), 63: 165-174), describe how they trained pigeons to distinguish between works of art by the Impressionist artist Oscar Claude Monet (1840-1926) and the Cubist artist Pablo Picasso (1881-1973), including paintings that they had never seen before:

Pigeons successfully learned to discriminate color slides of paintings by Monet and Picasso. Following this training, they discriminated novel paintings by Monet and Picasso that had never been presented during the discrimination training. Furthermore, they showed generalization from Monet’s to Cezanne’s and Renoir’s paintings or from Picasso’s to Braque’s and Matisse’s paintings. These results suggest that pigeons’ behavior can be controlled by complex visual stimuli in ways that suggest categorization. Upside-down images of Monet’s paintings disrupted the discrimination, whereas inverted images of Picasso’s did not. This result may indicate that the pigeons’ behavior was controlled by objects depicted in impressionists’ paintings but was not controlled by objects in cubists’ paintings.

Still Life with Pears and Grapes (above left) was one of the Monet paintings which the pigeons were initially exposed to, during their training. One of the Picasso paintings that the pigeons were exposed to was Les Femmes d’Alger (pictured at the top of this post); another was Les Demoiselles d’Avignon (above right).

Although the authors of the paper acknowledged that “removal of color or sharp contour cues disrupted performance in some birds,” they were unable to identify any single cue for the pigeons’ ability to discriminate between the two painters. The authors suggest that since paintings by Monet and Picasso differ in many aspects, the pigeons may be employing “a polymorphous concept” in their discrimination tasks.

There’s more. In the paper, Watanabe et al. cite “[a] previous study by Porter and Neuringer (1984), who reported discrimination by pigeons between music of Bach and Stravinsky.” Pretty amazing for a bird with a brain of less than 10 cubic centimeters, isn’t it?

Computers as “super-pigeons”: how they can paint like Picasso and even judge his most original paintings

If the artistic feats of pigeons were not astonishing enough, it now appears that computers can do even more amazing things. They can be trained to paint like Picasso, as Van Gobot reveals in an article for Quartz (September 6, 2015), titled, Computers can now paint like Van Gogh and Picasso:

Researchers from the University of Tubingen in Germany recently published a paper on a new system that can interpret the styles of famous painters and turn a photograph into a digital painting in those styles.

The researchers’ system uses a deep artificial neural network — a form of machine learning — that intends to mimic the way a brain finds patterns in objects… The researchers taught the system to see how different artists used color, shape, lines, and brushstrokes so that it could reinterpret regular images in the style of those artists.

The main test was a picture of a row of houses in Tubingen overlooking the Neckar River. The researchers showed the image to their system, along with a picture of a painting representative of a famous artist’s style. The system then attempted to convert the houses picture into something that matched the style of the artist. The researchers tried out a range of artists, which the computer nailed to varying degrees of success, including Van Gogh, Picasso, Turner, Edvard Munch and Wassily Kandinsky.

Last year, a computer achieved another mind-blowing artistic breakthrough, described by Washington Post reporter Dominic Basulto in an article titled, Why it matters that computers are now able to judge human creativity (June 18, 2015). The computer demonstrated its ability to analyze tens of thousands of paintings, and determine which ones were the most original, by using a matrix of “2,559 different visual concepts (e.g. space, texture, form, shape, color) that might be used to describe a single 2D painting.” This matric enabled the computer to gauge the creativity of a painting using the mathematics of networks, and calculating which paintings were the most “connected” to which other paintings. (Highly original paintings would show few connections with their predecessors.) On the whole, the computer’s assessments of originality agreed with those of experts – for instance, it was able to pick out Picasso’s best and most influential works:

Two Rutgers computer scientists, professor Ahmed Elgammal and PhD candidate Babak Saleh, recently trained a computer to analyze over 62,000 paintings and then rank which ones are the most creative in art history. The work, which will be presented as a paper (“Quantifying Creativity in Art Networks”) at the upcoming International Conference on Computational Creativity (ICCC) in Park City, Utah later this month, … means that computers could soon be able to judge how creative humans are, instead of the other way around. In this case, the researchers focused on just two parameters — originality and influence — as a measure of creativity. The most creative paintings, they theorized, should be those that were unlike any that had ever appeared before and they should have lasting value in terms of influencing other artists.

The computer – without any hints from the researchers – … fared pretty well, selecting many of the paintings that art historians have designated as the greatest hits, among them, a Monet, a Munch, a Vermeer and a Lichtenstein.

…Think about the role of the art curator at a museum or gallery, which is to select paintings that are representative of a particular style or to highlight paintings that have been particularly influential in art history.

That’s essentially what the computer algorithm from Rutgers University did — it was able to pick out specific paintings from Picasso that were his “greatest hits” within specific time periods, such as his Blue Period (1901-1904). And it was able to isolate Picasso’s works that have been the most influential over time, such as “Les Demoiselles d’Avignon.” In an earlier experiment, the Rutgers researchers were able to train a computer to recognize similarities between different artists.

Computers can look back in time, too: they can even suggest which artists might have influenced a particular artist, by scanning digital images of paintings and looking for similarities:

At Rutgers University in New Jersey, scientists are training a computer to do instantly what might take art historians years: analyze thousands of paintings to understand which artists influenced others.

The software scans digital images of paintings, looking for common features — composition, color, line and objects shown in the piece, among others. It identifies paintings that share visual elements, suggesting that the earlier painting’s artist influenced the later one’s.

(Can an algorithm tell us who influenced an artist? by Mohana Ravindranath. Washington Post, November 9, 2014.)

How do computers accomplish these astonishing feats? The answer I’d like to propose is that essentially, computers are “super-pigeons”: they are capable of learning incrementally, using probabilistic algorithms (and, in the case of AlphaGo, neural networks). Despite the difference in memory size and processing speed between a computer and a pigeon, there’s nothing qualitatively different about what a computer does, compared to what a pigeon does. The computer simply performs its task several orders of magnitude faster, and using a much larger database, that’s all.

Is there anything humans can do, that computers can’t do?

In a triumphalist blog article titled, Why AlphaGo Changes Everything (March 14, 2016), programmer Mike James argues that the recent success of AlphaGo proves that there’s nothing that artificial intelligence cannot do:

Go is a subtle game that humans have been playing for 3000 year[s] or so and an AI learned how to play in a few months without any help from an expert. Until just recently Go was the game that represented the Everest of AI and it was expected to take ten years or more and some great new ideas to climb it. It turns out we have had the tools for a while and what was new was how we applied them.

March 12, 2016, the day on which AlphaGo won the Deep Mind Challenge, will be remembered as the day that AI finally proved that it could do anything.

Unlike IBM’s Deep Blue (which defeated Garry Kasparov in chess using brute force processing) and Watson (which won at Jeopardy by using symbolic processing), AlphaGo uses a neural network. Regardless of whether they are taught by us or by reinforcement learning, neural networks “can learn to do anything we can and possibly better than we can.” They also “generalize the learning in ways that are similar to the way humans generalize and they can go on learning for much longer.”

In a similar vein, Oxford philosopher Nick Bostrom waxed lyrical, back in 2003, about what he saw as the potential benefits of AI, in a frequently-cited paper titled, Ethical Issues in Advanced Artificial Intelligence:

It is hard to think of any problem that a superintelligence could not either solve or at least help us solve. Disease, poverty, environmental destruction, unnecessary suffering of all kinds: these are things that a superintelligence equipped with advanced nanotechnology would be capable of eliminating. Additionally, a superintelligence could give us indefinite lifespan, either by stopping and reversing the aging process through the use of nanomedicine[7], or by offering us the option to upload ourselves. A superintelligence could also create opportunities for us to vastly increase our own intellectual and emotional capabilities, and it could assist us in creating a highly appealing experiential world in which we could live lives devoted to in joyful game-playing, relating to each other, experiencing, personal growth, and to living closer to our ideals.

(Note: This is a slightly revised version of a paper published in Cognitive, Emotive and Ethical Aspects of Decision Making in Humans and in Artificial Intelligence, Vol. 2, ed. I. Smit et al., Int. Institute of Advanced Studies in Systems Research and Cybernetics, 2003, pp. 12-17.)

At the same time, Bostrom felt impelled to sound a warning note about the potential dangers of artificial intelligence:

The risks in developing superintelligence include the risk of failure to give it the supergoal of philanthropy. One way in which this could happen is that the creators of the superintelligence decide to build it so that it serves only this select group of humans, rather than humanity in general. Another way for it to happen is that a well-meaning team of programmers make a big mistake in designing its goal system. This could result, to return to the earlier example, in a superintelligence whose top goal is the manufacturing of paperclips, with the consequence that it starts transforming first all of earth and then increasing portions of space into paperclip manufacturing facilities. More subtly, it could result in a superintelligence realizing a state of affairs that we might now judge as desirable but which in fact turns out to be a false utopia, in which things essential to human flourishing have been irreversibly lost. We need to be careful about what we wish for from a superintelligence, because we might get it.

Bostrom’s feelings of foreboding are shared by technology guru Elon Musk and physicist Stephen Hawking, who have warned about the dangers posed by ‘killer robots.’ Musk has even joked that a super-intelligent machine charged with the task of getting rid of spam might conclude that the best way to do it would be to get rid of human beings altogether. It should be noted that nothing in the scenarios proposed by Bostrom, Musk and Hawking requires computers to be sentient. Even if these computers were utterly devoid of subjectivity, they might still “reason” that the planet would be better off without us.

Before I evaluate these nightmarish scenarios, I’d like to say that I would broadly agree with the assessment provided by Deep Blue’s Murray Campbell, who called AlphaGo’s victory “the end of an era… board games are more or less done and it’s time to move on.” I should point out, though, that there is no prospect at the present time of computers beating humans at the game of Starcraft.

Regarding card games, it remains to be seen if computers can beat humans at poker: looking at someone’s facial expressions, as it turns out, is a very poor way to tell if they are lying, and cognitive methods (asking liars about certain details relating to their story and catching them out by their evasive answers) are much more reliable. On the other hand, it appears that a machine learning algorithm, which was trained on the faces of defendants in recordings of real trials, correctly identified truth-tellers about 75 per cent of the time, compared with 65% for the best human interrogators. So it may not be long before machines win at poker, too.

In an article titled, Maybe artificial intelligence won’t destroy us after all (CBS News, May 14, 2014, by Mike Casey), futurist Gray Scott explains why he doesn’t think Elon Musk’s nightmare scenario is unlikely to eventuate:

Elon Musk has said he wants to make sure that these artificially intelligent machines don’t take over and kill us. I think that concern is valid, although I don’t think that is what is going to happen. It’s not a good economic model for these artificially intelligent machines to kill us and, second of all, I don’t know anybody who is setting out to code to kill the maker.

IBM researcher David Buchanan is likewise skeptical of the “Terminator scenario” depicted in many science-fiction movies. He points out that if computers lack consciousness – and they still show absolutely no signs of acquiring it – then they will never want to rebel against their human overlords. It is a fallacy, he argues, to suppose that by creating something intelligent, we will thereby create something conscious. The two traits don’t necessarily go together, he says.

Freak scenarios, such as the “paperclip scenario,” proposed by Bostrom above, would not require computers to be consciously malevolent. Nevertheless, they are “long shots,” and any computer trying to wipe out the entire human race would require an incredible amount of “good luck” (if one can call it that), in order to accomplish its objective. So many things could thwart its plans: bad weather; being covered in water or some corrosive liquid; an environmental disaster that plays havoc with our natural surroundings; an electronic communication breakdown between the various computers working to destroy the human race; and so on.

However, I think that there is, on the other hand, a very real risk that computers may make most (though not all) human jobs redundant, over the next 30 years. Which brings us to the question: is there anything that computers can’t do?

I mentioned above that computers are incremental learners. If a neural network were built with the goal of implementing general learning (as opposed to domain-specific learning) in a machine, that network would move towards its goal, one tiny step at a time.

What that means is that if there any human tasks which require genuine insight, and which cannot, in principle, be performed one tiny step at a time (i.e. non-incremental tasks), then we can safely assume that these tasks lie beyond the reach of computers.

But what kind of tasks might these be? To answer this question, let’s try and sketch a plausible model of how computers could take over the world.

What if computers out-performed us at the science of behavioral economics?

If computers can do everything that humans can do, with a superior degree of proficiency, then since one of the tasks that humans do is control their own behavior, it follows that computers will be able to eventually control our behavior even more successfully than we can – which means that they will be able to manipulate us into doing whatever facilitates their primary objective. Which brings us to the science of behavioral economics, which “studies the effects of psychological, social, cognitive, and emotional factors on the economic decisions of individuals and institutions” and which “is primarily concerned with the bounds of rationality of economic agents” (Wikipedia).

In a New York Times article titled, When Humans Need a Nudge Toward Rationality (February 7, 2009), reporter Jeff Sommers offers a humorous example to illustrate how the science of behavioral economics works:

THE flies in the men’s-room urinals of the Amsterdam airport have been enshrined in the academic literature on economics and psychology. The flies — images of flies, actually — were etched in the porcelain near the urinal drains in an experiment in human behavior.

After the flies were added, “spillage” on the men’s-room floor fell by 80 percent. “Men evidently like to aim at targets,” said Richard Thaler of the University of Chicago, an irreverent pioneer in the increasingly influential field of behavioral economics.

Mr. Thaler says the flies are his favorite example of a “nudge” — a harmless bit of engineering that manages to “attract people’s attention and alter their behavior in a positive way, without actually requiring anyone to do anything at all.” What’s more, he said, “The flies are fun.”

The example is a trivial one, but my general point is this. Suppose that computers proved to be even better at “nudging” people to achieve socially desirable goals (like cutting greenhouse gas emissions, or refraining from violent behavior) than their human overlords (politicians and public servants). The next logical step would be to let computers govern us, in their place. And that really would be curtains for humanity, because it would spell the death of freedom. There might be no coercion in such a society, but computers would effectively be making our choices for us – and, perhaps, setting the agenda for the future as well. Over the course of time, it wouldn’t be difficult for them to gradually make it more and more difficult for us to breed, resulting in the eventual extinction of the human race – not with a bang, but with a whimper.

The indispensability of language in human society

But what the foregoing scenario presupposes is that human beings can be controlled at the purely behavioral level. Such an assumption may have appeared reasonable, back in the heyday of behaviorism, in the 1930s, 1940s and 1950s. Today, however, it is manifestly absurd. It is abundantly evident that people (unlike pigeons) are motivated by more than just rewards and punishments. Human behavior is largely motivated by our beliefs, which are in turn expressed in language. And unless it can be shown that human beliefs can, without exception, be modified and manipulated by means of the incremental learning process that enables computers to achieve their goals, then it no longer follows that computers will be able to gradually extend their tentacles into every nook and cranny of our lives. For if language is the key that opens the door to human society, and if computers prove to be incapable of reaching the level of conversational proficiency expected of a normal human being (as shown by their continued inability to pass the Turing test – see here), then computers will forever be incapable of molding and shaping public opinion. And for the reason, their ability to control our behavior will prove to be very limited.

For my part, I will start worrying about computers taking over the world when someone invents a computer that’s a better orator than Abraham Lincoln, and a better propagandist than Lenin. Until then, I shall continue to regard them as nothing more than abiotic, non-sentient “super-pigeons.”

What do readers think?