One of the challenges of our day is the commonplace reduction of intelligent, insightful action to computation on a substrate. That’s not just Sci Fi, it is a challenge in the academy and on the street — especially as AI grabs more and more headlines.

A good stimulus for thought is John Searle as he further discusses his famous Chinese Room example:

The Failures of Computationalism

John R. Searle

Department of Philosophy

University of California

Berkeley CA

The Power in the Chinese Room.Harnad and I agree that the Chinese Room Argument deals a knockout blow to Strong AI, but beyond that point we do not agree on much at all. So let’s begin by pondering the implications of the Chinese Room.

The Chinese Room shows that a system, me for example, could pass the Turing Test for understanding Chinese, for example, and could implement any program you like and still not understand a word of Chinese. Now, why? What does the genuine Chinese speaker have that I in the Chinese Room do not have?

The answer is obvious. I, in the Chinese room, am manipulating a bunch of formal symbols; but the Chinese speaker has more than symbols, he knows what they mean. That is, in addition to the syntax of Chinese, the genuine Chinese speaker has a semantics in the form of meaning, understanding, and mental contents generally.

But, once again, why?

Why can’t I in the Chinese room also have a semantics? Because all I have is a program and a bunch of symbols, and programs are defined syntactically in terms of the manipulation of the symbols.

The Chinese room shows what we should have known all along: syntax by itself is not sufficient for semantics. (Does anyone actually deny this point, I mean straight out? Is anyone actually willing to say, straight out, that they think that syntax, in the sense of formal symbols, is really the same as semantic content, in the sense of meanings, thought contents, understanding, etc.?)

Why did the old time computationalists make such an obvious mistake? Part of the answer is that they were confusing epistemology with ontology, they were confusing “How do we know?” with “What it is that we know when we know?”

This mistake is enshrined in the Turing Test(TT). Indeed this mistake has dogged the history of cognitive science, but it is important to get clear that the essential foundational question for cognitive science is the ontological one: “In what does cognition consist?” and not the epistemological other minds problem: “How do you know of another system that it has cognition?”

What is the Chinese Room about? Searle, again:

Imagine that a person—me, for example—knows no Chinese and is locked in a room with boxes full of Chinese symbols and an instruction book written in English for manipulating the symbols. Unknown to me, the boxes are called “the database” and the instruction book is called “the program.” I am called “the computer.”

People outside the room pass in bunches of Chinese symbols that, unknown to me, are questions. I look up in the instruction book what I am supposed to do and I give back answers in Chinese symbols.

Suppose I get so good at shuffling the symbols and passing out the answers that my answers are indistinguishable from a native Chinese speaker’s. I give every indication of understanding the language despite the fact that I actually don’t understand a word of Chinese.

And if I do not, neither does any digital computer, because no computer, qua computer, has anything I do not have. It has stocks of symbols, rules for manipulating symbols, a system that allows it to rapidly transition from zeros to ones, and the ability to process inputs and outputs. That is it. There is nothing else. [Cf. Jay Richards here.]

What is “strong AI”? Techopedia:

Strong artificial intelligence (strong AI) is an artificial intelligence construct that has mental capabilities and functions that mimic the human brain. In the philosophy of strong AI, there is no essential difference between the piece of software, which is the AI, exactly emulating the actions of the human brain, and actions of a human being, including its power of understanding and even its consciousness.

Strong artificial intelligence is also known as full AI.

In short, Reppert has a serious point:

. . . let us suppose that brain state A [–> notice, state of a wetware, electrochemically operated computational substrate], which is token identical to the thought that all men are mortal, and brain state B, which is token identical to the thought that Socrates is a man, together cause the belief [–> concious, perceptual state or disposition] that Socrates is mortal. It isn’t enough for rational inference that these events be those beliefs, it is also necessary that the causal transaction be in virtue of the content of those thoughts . . . [But] if naturalism is true, then the propositional content is irrelevant to the causal transaction that produces the conclusion, and [so] we do not have a case of rational inference. In rational inference, as Lewis puts it, one thought causes another thought not by being, but by being seen to be, the ground for it. But causal transactions in the brain occur in virtue of the brain’s being in a particular type of state that is relevant to physical causal transactions.

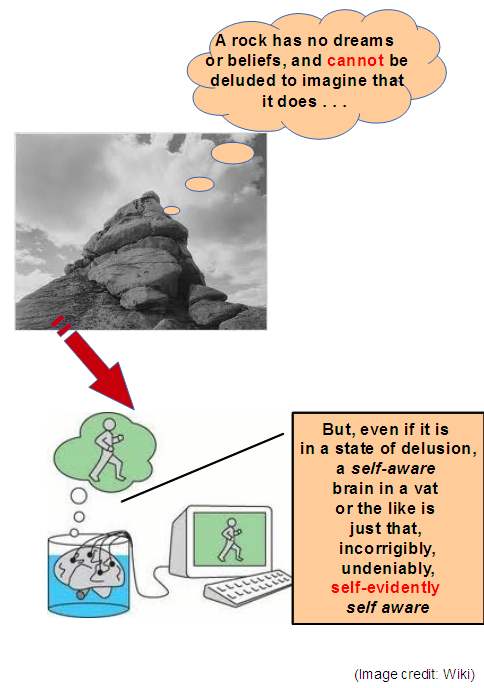

This brings up the challenge that computation [on refined rocks] is not rational, insightful, self-aware, semantically based, understanding-driven contemplation:

While this is directly about digital computers — oops, let’s see how they work —

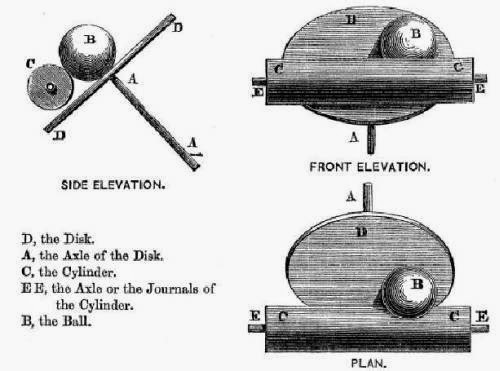

. . . but it also extends to analogue computers (which use smoothly varying signals):

. . . or a neural network:

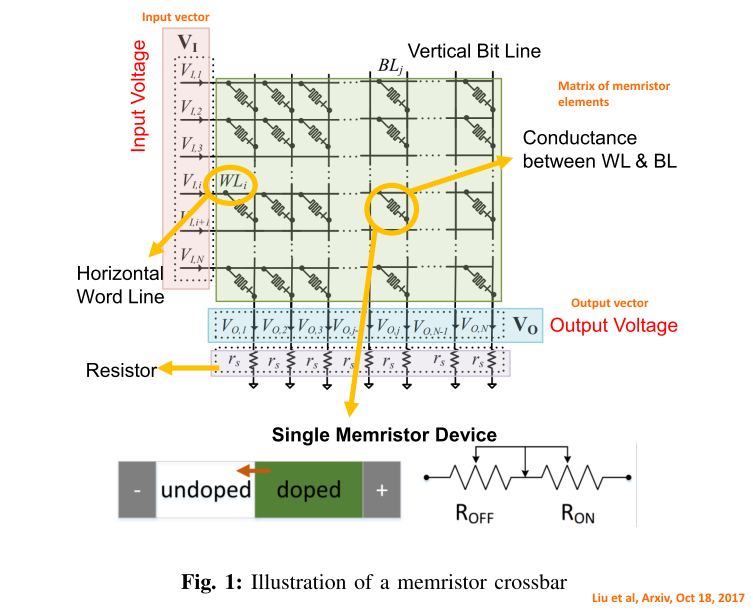

A similar approach uses memristors, creating an analogue weighted sum vector-matrix operation:

As we can see, these entities are about manipulating signals through physical interactions, not essentially different from Leibniz’s grinding mill wheels in Monadology 17:

It must be confessed, however, that perception, and that which depends upon it, are inexplicable by mechanical causes, that is to say, by figures and motions. Supposing that there were a machine whose structure produced thought, sensation, and perception, we could conceive of it as increased in size with the same proportions until one was able to enter into its interior, as he would into a mill. Now, on going into it he would find only pieces working upon one another, but never would he find anything to explain perception [[i.e. abstract conception]. It is accordingly in the simple substance, and not in the compound nor in a machine that the perception is to be sought . . .

In short, computationalism falls short.

I add [Fri May 31], that is, computational substrates are forms of general dynamic-stochastic systems and are subject to their limitations:

The alternative is, a supervisory oracle-controlled, significantly free, intelligent and designing bio-cybernetic agent:

As context (HT Wiki) I add [June 10] a diagram of a Model Identification Adaptive Controller . . . which, yes, identifies a model for the plant and updates it as it goes:

As I summarised recently:

What we actually observe is:

A: [material computational substrates] –X –> [rational inference]

B: [material computational substrates] —-> [mechanically and/or stochastically governed computation]

C: [intelligent agents] —-> [rational, freely chosen, morally governed inference]

D: [embodied intelligent agents] —-> [rational, freely chosen, morally governed inference]

The set of observations A through D imply that intelligent agency transcends computation, as their characteristics and capabilities are not reducible to:

– components and their device physics,

– organisation as circuits and networks [e.g. gates, flip-flops, registers, operational amplifiers (especially integrators), ball-disk integrators, neuron-gates and networks, etc],

– organisation/ architecture forming computational circuits, systems and cybernetic entities,

– input signals,

– stored information,

– processing/algorithm execution,

– outputs

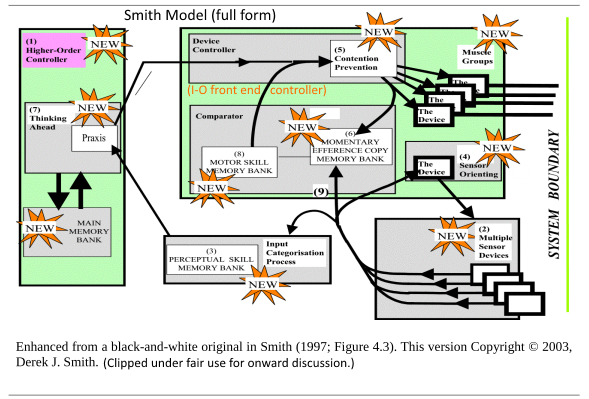

It may be useful to add here, a simplified Smith model with an in the loop computational controller and an out of the loop oracle that is supervisory, so that there may be room for pondering the bio-cybernetic system i/l/o the interface of the computational entity and the oracular entity:

In more details, per Eng Derek Smith:

So too, we have to face the implication of the necessary freedom for rationality. That is, that our minds are governed by known, inescapable duties to truth, right reason, prudence (so, warrant), fairness, justice etc. Rationality is morally governed, it inherently exists on both sides of the IS-OUGHT gap.

That means — on pain of reducing rationality to nihilistic chaos and absurdity — that the gap must be bridged. Post Hume, it is known that that can only be done in the root of reality. Arguably, that points to an inherently good necessary being with capability to found a cosmos. If you doubt, provide a serious alternative under comparative difficulties: ____________

So, as we consider debates on intelligent design, we need to reflect on what intelligence is, especially in an era where computationalism is a dominant school of thought. Yes, we may come to various views, but the above are serious factors we need to take such into account. END

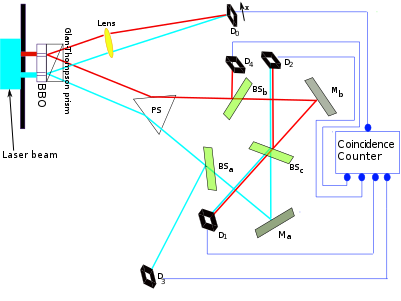

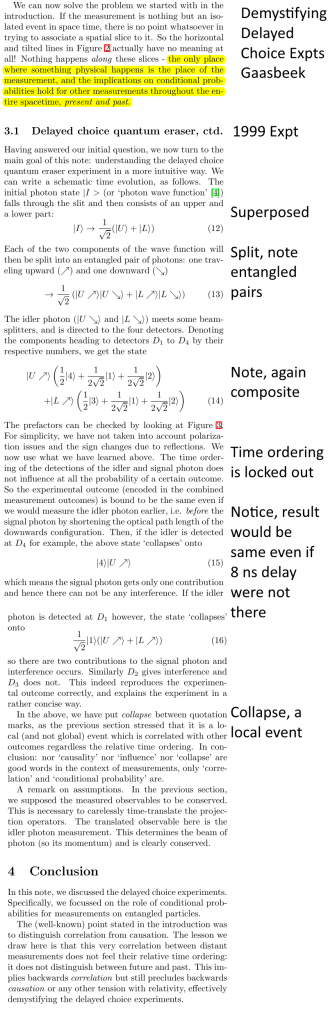

PS: As a secondary exchange developed on quantum issues, I take the step of posting a screen-shot from a relevant Wikipedia clip on the 1999 Delayed choice experiment by Kim et al:

The layout in a larger scale:

Gaasbeek adds:

Weird, but that’s what we see. Notice, especially, Gaasbeek’s observation on his analysis, that “the experimental outcome (encoded in the combined measurement outcomes) is bound to be the same even if we would measure the idler photon earlier, i.e. before the signal photon by shortening the optical path length of the downwards configuration.” This is the point made in a recent SEP discussion on retrocausality.

PPS: Let me also add, on radio halos:

and, Fraunhoffer spectra:

These document natural detection of quantised phenomena.