Just what is “chance”?

This point has come up as contentious in recent UD discussions, so let me clip the very first UD Foundations post, so we can look at a paradigm example, a falling and tumbling die:

2 –>As an illustration, we may discuss a falling, tumbling die:

Heavy objects tend to fall under the law-like natural regularity we call gravity. If the object is a die, the face that ends up on the top from the set {1, 2, 3, 4, 5, 6} is for practical purposes a matter of chance.

But, if the die is cast as part of a game, the results are as much a product of agency as of natural regularity and chance. Indeed, the agents in question are taking advantage of natural regularities and chance to achieve their purposes!

[Also, the die may be loaded, so that it will be biased or even of necessity will produce a desired outcome. Or, one may simply set a die to read as one wills.]

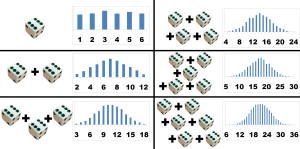

{We may extend this by plotting the (observed) distribution of dice . . . observing with Muelaner [here] , how the sum tends to a normal curve as the number of dice rises:}

Then, from No 21 in the series, we may bring out thoughts on the two types of chance:

Chance:

TYPE I: the clash of uncorrelated trains of events such as is seen when a dropped fair die hits a table etc and tumbles, settling to readings in the set {1, 2, . . . 6} in a pattern that is effectively flat random. In this sort of event, we often see manifestations of sensitive dependence on initial conditions, aka chaos, intersecting with uncontrolled or uncontrollable small variations yielding a result predictable in most cases only up to a statistical distribution which needs not be flat random.

TYPE II: processes — especially quantum ones — that are evidently random, such as quantum tunnelling as is the explanation for phenomena of alpha decay. This is used in for instance zener noise sources that drive special counter circuits to give a random number source. Such are sometimes used in lotteries or the like, or presumably in making one time message pads used in decoding.

{Let’s add a Quincunx or Galton Board demonstration, to see the sort of contingency we are speaking of in action and its results . . . here in a normal bell-shaped curve, note how the ideal math model and the stock distribution histogram align with the beads:}

[youtube AUSKTk9ENzg]

Why the fuss and feathers?

Because stating a clear enough understanding of what design thinkers are talking about when we refer to “chance” is now important given some of the latest obfuscatory talking points. So, bearing the above in mind, let us look afresh at a flowchart of the design inference process:

(So, we first envision nature acting by low contingency mechanical necessity such as with F = m*a . . . think a heavy unsupported object near the earth’s surface falling with initial acceleration g = 9.8 N/kg or so. That is the first default. Similarly, we see high contingency knocking out the first default — under similar starting conditions, there is a broad range of possible outcomes. If things are highly contingent in this sense, the second default is: CHANCE. That is only knocked out if an aspect of an object, situation, or process etc. exhibits, simultaneously: (i) high contingency, (ii) tight specificity of configuration relative to possible configurations of the same bits and pieces, (iii) high complexity or information carrying capacity, usually beyond 500 – 1,000 bits. And for more context you may go back to the same first post, on the design inference. And yes, that will now also link this for an all in one go explanation of chance, so there!)

Okie, let us trust there is sufficient clarity for further discussion on the main point. Remember, whatever meanings you may wish to inject into “chance,” the above is more or less what design thinkers mean when we use it — and I daresay, it is more or less what most people (including most scientists) mean by chance in light of experience with dice-using games, flipped coins, shuffled cards, lotteries, molecular agitation, Brownian motion and the like. At least, when hair-splitting debate points are not being made. It would be appreciated if that common sense based usage by design thinkers is taken into reckoning. END