We usually think of basic mathematics such as introductory calculus to be fairly solid. However, recent research by UD authors shows that calculus notation needs a revision.

Many people complain about ID by saying, essentially, “ID can’t be true because all of biology depends on evolution.” This is obviously a gross overstatement (biology as a field was just fine even before Darwin), but I understand the sentiment. Evolution is a basic part of biology (taught in intro biology), and therefore it would be surprising to biologists to find that fundamental pieces of it were wrong.

However, the fact is that oftentimes fundamental aspects of various fields are wrong. Surprisingly, this sometimes has little impact on the field itself. If premise A is faulty and leads to faulty conclusions, oftentimes workaround B can be invoked to get around A’s problems. Thus, A can work as long as B is there to get around its problems.

Anyway, I wanted to share my own experience of this with calculus. Some of you know that I published a Calculus book last year. My goal in this was mostly to counter-act the dry, boring, and difficult-to-understand textbooks that dominate the field. However, when it came to the second derivative, I realized that not only is the notation unintuitive, there is literally no explanation for it in any textbook I could find.

For those who don’t know, the notation for the first derivative is  . The first derivative is the ratio of the change in y (dy) compared to the change in x (dx). The notation for the second derivative is

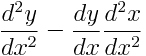

. The first derivative is the ratio of the change in y (dy) compared to the change in x (dx). The notation for the second derivative is  . However, there is not a cogent explanation for this notation. I looked through 20 (no kidding!) textbooks to find an explanation for why the notation was the way that it was.

. However, there is not a cogent explanation for this notation. I looked through 20 (no kidding!) textbooks to find an explanation for why the notation was the way that it was.

Additionally, I found out that the notation itself is problematic. Although it is written as a fraction, the numerator and denominator cannot be separated without causing math errors. This problem is somewhat more widely known, and has a workaround for it, known as Faa di Bruno’s formula.

My goal was to present a reason for the notation to my readers/students, so that they could more intuitively grasp the purpose of the notation. So, I decided that since no one else was providing an explanation, I would try to derive the notation myself.

Well, when I tried to derive it directly, it turns out that the notation is simply wrong (footnote – many mathematicians don’t like me using the terminology of “wrong”, but, I would argue that a fraction that can’t be treated like a fraction *is* wrong, especially when there is an alternative that does work like a fraction). Most people forget that  is, in fact, a quotient. Therefore, the proper rule to apply to this is the quotient rule (a first-year calculus rule). When you do this to the actual first derivative notation, the notation for the second derivative (the derivative of the derivative) is actually

is, in fact, a quotient. Therefore, the proper rule to apply to this is the quotient rule (a first-year calculus rule). When you do this to the actual first derivative notation, the notation for the second derivative (the derivative of the derivative) is actually  . This notation can be fully treated as a fraction, and requires no secondary formulas to work with.

. This notation can be fully treated as a fraction, and requires no secondary formulas to work with.

What does this have to do with Intelligent Design? Not much directly. However, it does show that, in any discipline, there is the possibility that asking good questions about basic fundamentals may lead to the revising of some of even the most basic aspects of the field. This is precisely what philosophy does, and I recommend the broader application of philosophy to science. Second, it shows that even newbies can make a contribution. In fact, I found this out precisely because I *was* a newbie. Finally, in a more esoteric fashion (but more directly applicable to ID), the forcing of everything into materialistic boxes limits the progress of all fields. The reason why this was not noticed before, I believe, is because, since the 1800s, mathematicians have not wanted to believe that infinitesimals are valid entities. Therefore, they were not concerned when the second derivative did not operate as a fraction – it didn’t need to, because it indeed wasn’t a fraction. Infinities and infinitesimals are the non-materialistic aspects of mathematics, just as teleology, purpose, and desire are the non-materialistic aspects of biology.

Anyway, for those who want to read the paper, it is available here:

Bartlett, Jonathan and Asatur Khurshudyan. 2019. Extending the Algebraic Manipulability of Differentials. Dynamics of Continuous, Discrete and Impulsive Systems, Series A: Mathematical Analysis 26(3):217-230.

I would love any comments, questions, or feedback.