One of the saddest aspects of the debates over the design inference on empirically reliable signs such as FSCO/I, is the way evolutionary materialist objectors and fellow travellers routinely insist on distorting the ID view, even after many corrections. (Kindly, note the weak argument correctives, accessible under the UD Resources Tab, which address many of these.)

Indeed, the introduction to the just liked WACs is forced to remark:

. . . many critics mistakenly insist that ID, in spite of its well-defined purpose, its supporting evidence, and its mathematically precise paradigms, is not really a valid scientific theory. All too often, they make this charge on the basis of the scientists’ perceived motives.

We have noticed that some of these false objections and attributions, largely products of an aggressive Darwinist agenda, have found their way into institutions of higher learning, the press, the United States court system, and even the European Union policy directives. Routinely, they find expression in carefully-crafted talking points, complete with derogatory labels and personal caricatures, all of which appear to have been part of a disinformation campaign calculated to mislead the public.

Many who interact with us on this blog recycle this misinformation. Predictably, they tend to raise notoriously weak objections that have been answered thousands of times . . .

Overnight, long-term objector RDF provides a case in point, despite his having been corrected many, many, many times over months and even years. So, it is appropriate to showcase the case in point I responded to just now, here at 47 in the WJM on a roll thread, filling in a few images and the like:

___________

>>RDF:

Pardon, but this — after all this time — needs correction:

my point is that if ID is proposing that a known cause of complexity is responsible for biological complexity, then ID is proposing that human beings were responsible – clearly a poor hypothesis. Alternatively, ID can propose an unknown cause that somehow has the same sort of mental and physical abilities as human beings. But in that case, ID would need to show evidence that this sort of thing exists.

Let’s take in slices:

>> my point is that if ID is proposing that a known cause of complexity>>

1: Design theory does not address simple complexity, but specified complexity, and particularly functionally specified complexity that requires a cluster of correct, properly arranged and coupled parts to achieve a function, often in life forms at cell based level using molecular nanotech, codes and algorithms . . . such as the protein synthesis process.

>> . . . is responsible for biological complexity,>>

2: Biological, FUNCTIONALLY SPECIFIC complex organisation, e.g. the protein synthesis system etc. (More generally, functionally specified, complex organisation and/or associated information, FSCO/I, requires many well-matched components, correctly arranged and coupled to achieve function, such as the glyph strings in this English text, or the algorithmic function of strings in D/RNA used to guide protein assembly in the ribosome.

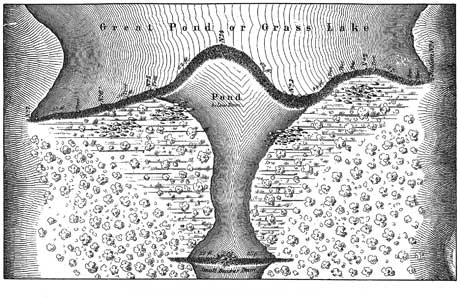

Where that constraint on configuration to achieve function locks us to isolated islands of function in the configuration space of possible arrangements of components. Thus, beyond 500 – 1,000 bits of specified complex arrangement to achieve function, we see a material blind search challenge on chance and mechanical necessity that is readily solved by intelligence, whether human [this text, underlying software and hardware] or beavers [dams adapted to stream specifics in a feat of impressive engineering] etc. Where we may simply measure FSCO/I using the Chi_500 threshold metric:

FSCO/I on the gamut of our solar system is detected when the following metric goes positive:

Chi_500 = I*S – 500, bits beyond the solar system threshold [with 1,000 bits being adequate for the observed cosmos]

in which I is a reasonable info metric, most easily seen as the string of Y/N questions to specify configuration in a field of possibilities, such as is commonly done with AutoCAD files or the like

with S a dummy variable defaulting to zero ( chance as default explanation of high contingency, cheerfully accepting the possibilities of false negatives), and set high on noting good reason and evidence of functional specificity, e.g. key-lock fitting of proteins sensitive to sequence and folding

where 500 bits gives us a “haystack” sufficiently large to overwhelm the capacity of 10^57 atoms for 10^17 s, each making 10^14 observations of chance configs for 500 bits per second [a fast chem rxn rate],

comparable to taking a one straw sized sample blindly from a cubical haystack of possible configs for 500 bits [3.27*10^150] that is 1,000 light years on the side, comparably thick as our galaxy . . . light setting out when William The Conqueror attacked Saxon England in 1066 AD would still not have crossed the stack today

so that if S = 1 and I > 500 bits, Chi_500 going positive convincingly points to design as best explanation as such a blind search of a haystack superposed on our galactic neighbourhood would with moral certainty beyond reasonable doubt produce naught but the typical finding: a straw

but by contrast, on trillions of observed cases, design is the reliably known cause of FSCO/I

3: The rhetorical substitution made here therefore dodges a substantial case and sets up a strawman caricature, for which — given longstanding, repeated corrections across months and years — the error involved unfortunately has to be willful.

>> then ID is proposing that human beings were responsible – clearly a poor hypothesis.>>

4: Strawman.

5: First, the very names involved are the design inference and the theory of intelligent design. At no point is there a process of inference to human action or any particular agent, only, to a process that is observed and known per observations to not only be adequate to produce the phenomenon FSCO/I, but on trillions of cases, the ONLY observed process to do this.

6: This, multiplied by needle in haystack blind search challenge analysis that points to the gross inadequacy of blind chance and mechanical necessity on the gamut of the solar system or observed cosmos to find relevant deeply isolated islands of function.

7: Where, starting with beavers and the like, we have no good reason to infer that humans exhaust actual much less possible intelligences capable of intelligently directed contingency or contrivance, i.e. design.

8: As a further level of misrepresentation, the design inference is about causal process not identification of specific classes of agents or particular agents. One first identifies that a factory fire is suspicious and then infers arson on signs, before going on to call in the detectives to try to detect the particular culprit. Signs, that indicate that more than blind chance and the mechanical necessities of starting and propagating a fire were at work.

9: This willful caricature, after years of correction, then sets up the next step:

>>Alternatively, ID can propose an unknown cause that somehow has the same sort of mental and physical abilities as human beings.>>

10: As has been pointed out to you, RDF, over and over again and stubbornly ignored in the rush to set up and knock over a favourite strawman caricature,

the design inference process sets up no unknown cause [here a synonym for an agent], but compares known, empirically evident causal factors and their characteristic or typical traces.

11: Mechanical necessity is noted for low contingency natural regularities, e.g. guavas and apples reliably drop from trees under initial acceleration 9.8 N/kg, and attenuating for the surface of a sphere at the distance to the moon, the force field accounts aptly for its centripetal acceleration, grounding Newtonian gravitation analysis.

12: Blind chance tends to cause high contingency, but stochastically controlled contingency similar to how a Monte Carlo simulation analysis explores reasonably likely clusters of possibilities in a highly contingent situation.

13: But, some needles can be too isolated and some haystacks too big relative to sampling resources, for us to reasonably expect to find one needle, much less the thousands that are in just the so-called simple cell, i.e. the cluster of proteins and the nanomachines involved.

14: So, we are epistemically entitled to infer that the only vera causa plausible process that accounts for the needles coming up trumps is design. That is, intelligently directed contingency or contrivance.

15: Where also, the base of trillions of observations showing that design is the reliably known — and ONLY actually observed — causal process accounting for such FSCO/I makes it also a very strong, reliable sign of design as key causal factor involved where it is observed.

16: This bit of inductive reasoning then exposes the selectively hyperskeptical rhetorical agenda in:

>>But in that case, ID would need to show evidence that this sort of thing exists.>>

17: Designers exist, human, beaver and more. Where, we have no good reason whatsoever to assume, assert, insinuate or imply that human and similar cases exhaust possible cases of designers. So, designers exist and are therefore possible.

18: Likewise, FSCO/I on very strong empirical basis, is a highly reliable index of design.

19: Therefore, until someone can reasonably show otherwise empirically, we are inductively entitled to take the occurrence of FSCO/I — even in unexpected or surprising contexts — as evidence of design as relevant causal process.

20: So, why the implicit demand for separate, direct empirical evidence of designers in the remote unobserved past of origins? Why, by contrast with being very willing to assign causal success to very implausible mechanisms for FSCO/I such as chance and necessity — not needle in haystack plausible, not ever observed to account for FSCO/I?

21: Selective hyperskepticism joined to flip-side hypercredulity to substitute a drastically inferior explanation. In the wider context, typically for fear and loathing of the possibility of . . . shudder . . “A Divine Foot” in the door of the halls of evolutionary materialism dominated science.

22: Of course, ever since 1984, with Thaxton et al, design theorists have been careful to be conservative, noting that in effect for the case of what we see in the living cell and wider biological life, a molecular nanotech lab some generations beyond Venter et al would be adequate. But so locked in a death-battle with bogeyman “Creationists” are the materialists and fellow travellers that they too often will refuse to acknowledge any point, regardless of warrant, that could conceivably give hope to Creationists.

23: So, the issues of duties to reason, truth and fairness are predictably given short shrift.

24: Oddly, most such activists are typically missing in action when we point out, from the thought of lifelong agnostic and Nobel-equivalent Prize-holding Astrophysicist, Sir Fred Hoyle and others, the evidence of cosmological fine tuning that sets up a world in which we can have C-chemistry, aqueous medium, protein using cell based life on the five or six most abundant elements points to cosmological design; most credibly by a powerful, skilled and purposeful designer who set up physics itself to be the basis for such a world.

25: Here’s a key comment — just one of several — by Sir Fred:

From 1953 onward, Willy Fowler and I have always been intrigued by the remarkable relation of the 7.65 MeV energy level in the nucleus of 12 C to the 7.12 MeV level in 16 O. If you wanted to produce carbon and oxygen in roughly equal quantities by stellar nucleosynthesis, these are the two levels you would have to fix, and your fixing would have to be just where these levels are actually found to be. Another put-up job? . . . I am inclined to think so. A common sense interpretation of the facts suggests that a super intellect has “monkeyed” with the physics as well as the chemistry and biology, and there are no blind forces worth speaking about in nature. [F. Hoyle, Annual Review of Astronomy and Astrophysics, 20 (1982): 16.]

It seems that ideology rules the roost in present day origins science thinking (and in science education), even at the price of clinging to the inductively implausible in order to repudiate anything that might conceivably hint that design best accounts for our world. >>

___________

It is high time to take duties of care to fairness, accuracy and truth seriously, and to actually address the inductive evidence and the needle in haystack analysis challenge on the merits. END