The concept of information is central to intelligent design. In previous discussions, we have examined the basic concept of information, we have considered the question of when information arises, and we have briefly dipped our toes into the waters of Shannon information. In the present post, I put forward an additional discussion regarding the latter, both so that the resource is out there front and center and also to counter some of the ambiguity and potential confusion surrounding the Shannon metric.

As I have previously suggested, much of the confusion regarding “Shannon information” arises from the unfortunate twin facts that (i) the Shannon measurement has come to be referred to by the word “information,” and (ii) many people fail to distinguish between information about an object and information contained in or represented by an object. Unfortunately, due to linguistic inertia, there is little to be done about the former. Let us consider today an example that I hope will help address the latter.

At least one poster on these pages takes regular opportunity to remind us that information must, by definition, be meaningful – it must inform. This is reasonable, particularly as we consider the etymology of the word and its standard dictionary definitions, one of which is: “the act or fact of informing.”

Why, then, the occasional disagreement about whether Shannon information is “meaningful”?

The key is to distinguish between information about an object and information contained in or represented by an object.

A Little String Theory

Consider the following two strings:

String 1:

kqsifpsbeiiserglabetpoebarrspmsnagraytetfs

String 2:

The first string is an essentially-random string of English letters. (Let’s leave aside for a moment the irrelevant question of whether anything is truly “random.” In addition, let’s assume that the string does not contain any kind of hidden code or message. Treat it for what it is intended to be: a random string of English letters.)

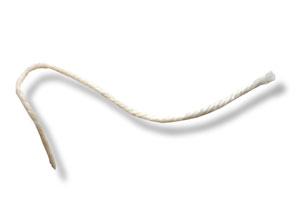

The second string is, well, a string. (Assume it is a real string, dear reader, not just a photograph – we’re dealing with an Internet blog; if we were in a classroom setting I would present students with a real physical string.)

There are a number of instructive similarities between these two strings. Let’s examine them in detail.

Information about a String

It is possible for us to examine a string and learn something about the string.

String of Letters

Regarding String 1 we can quickly determine some characteristics about the string and can make the following affirmative statements:

1. This string consists of forty-two English letters.

2. This string has only lower-case characters.

3. This string has no spaces, numerals, or special characters.

It is possible for us to determine the foregoing based on our understanding of English characters, and given certain parameters (for example, I have provided as a given in this case that we are dealing with English characters, rather than random squiggles on the page, etc.). It is also possible to generate these affirmative statements about the string because the creator of the statements has a framework within which to create such statements to convey those three pieces of information, namely, the English language.

In addition to the above quickly-ascertainable characteristics of the string, we could think of additional characteristics if we were to try.

For example, let’s assume that some enterprising fellow (we’ll call him Shannon) were to come up with an algorithm that allowed us to determine how much information could – in theory – be contained in a string consisting of those 3 characteristics: a string with forty-two English letters, using only lower-case characters, and with no spaces, numerals, or special characters. Let’s even assume that Shannon’s algorithm required some additional given parameters in this particular case, such as the assumption that all possible letters occurred at least once, that all letters could occur with the relative frequency at which they show up in the string and so forth. Shannon has also, purely as a convenience for discussing the results of his algorithm, given us a name for the unit of measurement resulting from his algorithm: the “bit.”

In sum, what Shannon has come up with is a series of parameters, a system for identifying and analyzing a particular characteristic of the string of letters. And within the confines of that system – given the parameters of that system and the particular algorithm put forward by Shannon – we can now plug in our string and create another affirmative statement about that characteristic of the string. In this case, we plug in the string, and Shannon’s algorithm spits out “one hundred sixty-eight bits.” As a result, based on Shannon’s system and based on our ability in the English language to describe characteristics of things, we can now write a fourth affirmative statement about how many bits are required to convey the string:

4. This string requires one hundred sixty-eight bits.

Please note that the above 4 affirmative pieces of information about the string are by no means comprehensive. We could think of another half dozen characteristics of the string without trying too hard. For example, we could measure the string by looking at the number of characters of a certain height, or those that use only straight lines, or those that have an enclosed circle, or those that use a certain amount of ink, and on and on. This is not an idle example. Font makers right up to the present day still take into consideration these kinds of characteristics when designing fonts, and, indeed, publishers can be notoriously picky about which font they publish in. As long as we lay out with reasonable detail the particular parameters of our analysis and agree upon how we are going to measure them, then we can plug in our string, generate a numerical answer and generate additional affirmative statements about the string in question. And – note this well – it is every bit as meaningful to say “the string requires X amount of ink” as to say “the string requires X bits.”

Now, let us take a deep breath and press on by looking back to our statement #4 about the number of bits. Where did that statement #4 come from? Was it contained in or represented by the string? No. It is a statement that was (i) generated by an intelligent agent, (ii) using rules of the English language, and (iii) based on an agreed-upon measurement system created by and adopted by intelligent agents. Statement #4 “The string rquires one hundred sixty-eight bits” is information – information in the full, complete, meaningful, true sense of the word. But, and this is key, it was not contained in the artifact itself; rather, it was created by an intelligent agent, using the tools of analysis and discovery, and articulated using a system of encoded communication.

Much of the confusion arises in discussions of “Shannon information” because people reflexively assume that by running a string through the Shannon algorithm and then creating (by use of that algorithm and agreed-upon communication conventions) an affirmative, meaningful, information-bearing statement about the string, that we have somehow measured meaningful information contained in the string. We haven’t.

Some might argue that while this is all well and good, we should still say that the string contains “Shannon information” because, after all, that is the wording of convention. Fair enough. As I said at the outset, we can hardly hope to correct an unfortunate use of terminology and decades of linguistic inertia. But we need to be very clear that the so-called Shannon “information” is in fact not contained in the string. The only meaning we have anywhere here is the meaning Shannon has attached to the description of one particular characteristic of the string. It is meaning, in other words, created by an intelligent agent upon observation of the string and using the conventions of communication, not in the string itself.

Lest anyone is still unconvinced, let us hear from Shannon himself:

“The fundamental problem of communication is that of reproducing at one point either exactly or approximately a message selected at another point. Frequently the messages have meaning; that is they refer to or are correlated according to some system with certain physical or conceptual entities. These semantic aspects of communication are irrelevant to the engineering problem. (bold added)”*

Furthermore, in contrast to the string we have been reviewing, let us look at the following string:

“thisstringrequiresonehundredsixtyeightbits”

What makes this string different from our first string? If we plug this new string into the Shannon algorithm, we come back with a similar result: 168 bits. The difference is that in the first case we were simply ascertaining a characteristic about the string. In this new case the string itself contains or represents meaningful information.

String of Cotton

Now let us consider String 2. Again, we can create affirmative statements about this string, such as:

1. This string consists of multiple smaller threads.

2. This string is white.

3. This string is made of cotton.

Now, critically, we can – just as we did with our string of letters – come up with other characteristics. Let’s suppose, for example, that some enterprising individual decides that it might be useful to know how long the string is. So we come up with a system that uses some agreed-upon parameters and a named unit of measurement. Hypothetically, let’s call it, say, a “millimeter.” So now, based on that agreed-upon system we can plug in our string and come up with another affirmative statement:

4. This string is one hundred sixty-eight millimeters long.

This is a genuine piece of information – useful, informative, meaningful. And it was not contained in the string itself. Rather, it was information about the string, created by an intelligent agent, using tools of analysis and discovery, and articulated in an agreed-upon communications convention.

It would not make sense to say that String 2 contains “Length information.” Rather, I assign a length value to String 2 after I measure it with agreed-upon tools and an agreed-upon system of measurement. That length number is now a piece of information which I, as an intelligent being, have created and which can be encoded and transmitted just like any other piece of information and communicated to describe String 2.

After all, where does the concept of “millimeter” come from? How is it defined? How is it spelled? What meaning does it convey? The concept of “millimeter” was not learned by examining String 2; it was not inherent in String 2. Indeed, everything about this “millimeter” concept was created by intelligent beings, by agreement and convention, and by using rules of encoding and transmission. Again, nothing about “millimeter” was derived by or has anything inherent to do with String 2. Even the very number assigned to the “millimeter” measurement has meaning only because we have imposed it from the outside.

One might be tempted to protest: “But String 2 still has a length, we just need to measure it!”

Of course. If by having a “length” we simply mean that it occupies an area of space. Yes, it has a physical property that we define as length, which when understood at its most basic, simply means that we are dealing with a three-dimensional object existing in real space. That is, after all, what a physical object is. That is to say: the string exists. And that is about all we can say about the string unless and until we start to impose – from the outside – some system of measurement or comparison or evaluation. In other words, we can use information that we create to describe the object that exists before us.

Systems of Measurement

There is no special magic or meaning or anything inherently more substantive in the Shannon measurement than in any other system of measurement. It is no more substantive to say that String 1 contains “Shannon information” than to say String 2 contains “Length information.” This is true notwithstanding the unfortunate popularity of the former term and the blessed absence in our language of the latter term.

This may seem rather esoteric, but it is a critical point and one that, once grasped, will help us avoid no small number of rhetorical traps, semantic games, and logical missteps:

Information can be created by an intelligent being about an object or to describe an object; but information is not inherently contained in an object by its mere existence.

We need to avoid the intellectual trap of thinking that just because a particular measurement system calls its units “bits” and has unfortunately come to be known in common parlance as Shannon “information,” that such a system is any more substantive or meaningful or informative or inherently deals contains more “information” than a measurement system that uses units like “points” or “gallons” or “kilograms” or “millimeters.”

To be sure, if a particular measurement system gains traction amongst practitioners as an agreed-upon system, it can then prove useful to help us describe and compare and contrast objects. Indeed, the Shannon metric has proven very useful in the communications industry; so too, the particular size and shape and style of the characters in String 1 (i.e., the “font”) is very useful in the publishing industry.

The Bottom String Line

Intelligent beings have the known ability to generate new information by using tools of discovery and analysis, with the results being contained in or represented in a code, language, or other form of communication system. That information arises as a result of, and upon application of, those tools of discovery and can then be subsequently encoded. And that information is information in the straight-forward, ordinary understanding of the word: that which informs and is meaningful. In contrast, an object by its mere existence, whether a string of letters or a string of cotton, does not contain information in and of itself.

So if we say that Shannon information is “meaningful,” what we are really saying is that the statement we made – as intelligent agents, based on Shannon’s system and using our English language conventions – the statement that we made to describe a particular characteristic of the string, is meaningful. That is of course true, but not because the string somehow contains that information, but rather because the statement we created is itself information – information created by us as intelligent agents and encoded and conveyed in the English language.

This is just as Shannon himself affirmed. Namely, the stuff in String 1 has, in and of itself, no inherent meaning. And the stuff that has the meaning (the statement we created about the number of bits) is meaningful precisely because it informs, because it contains information, encoded in the conventions of the English language, and precisely because it is not just “Shannon information.”

—–

* Remember that Shannon’s primary concern was that of communication. More narrowly, the technology of communication systems. The act of communication and the practical requirements for communication, yes, are usually related to, but are not the same thing as information. Remembering this can help keep things straight.