Dr Liddle, commenting on the Cambrian Robot thread (itself a takeoff on the Pre- Cambrian Rabbit thread), observes at comment no 5:

the ribosome is part of a completely self-replicating entity.

The others aren’t.

The ribosome didn’t “make itself” alone but the organism that it is a component of was “made” by another almost identical organism, which copied itself in order to produce the one containing the ribosome in question.

It is probably true that the only non-self-replicating machines are those designed by the intelligent designers we call people.

But self-replication with modification, I would argue, is the alternate explanation for what would otherwise look like it was designed by an intelligent agent.

I don’t expect you to agree, but it seems to me it’s a point that at least needs to be considered . . .

The matter is important enough to be promoted to a full post — UD discussion threads can become very important. So, let us now proceed . . .

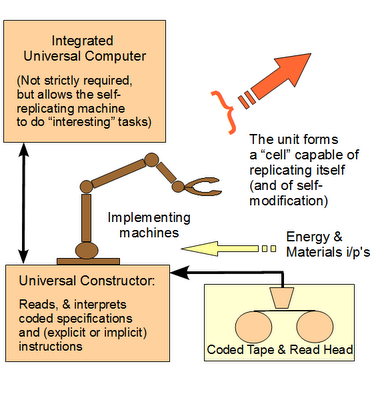

In fact, the living cell implements a kinematic von Neumann Self Replicator [vNSR], which is integrated into a metabolising automaton:

Fig. A: A kinematic vNSR, generally following Tempesti’s presentation (Source: KF/IOSE)

Why is that important?

First, such a vNSR is a code-based, algorithmic, irreducibly complex object that requires:

That is, we see here an irreducibly complex set of core components that must all be present in a properly organised fashion for a successful self-replicating machine to exist. [Take just one core part out, and self-replicating functionality ceases: the self-replicating machine is irreducibly complex (IC).]

This irreducible complexity is compounded by the requirement (i) for codes, requiring organised symbols and rules to specify both steps to take and formats for storing information, and (v) for appropriate material resources and energy sources.

Immediately, we are looking at islands of organised function for both the machinery and the information in the wider sea of possible (but mostly non-functional) configurations.

In short, outside such functionally specific — thus, isolated — information-rich hot (or, “target”) zones, want of correct components and/or of proper organisation and/or co-ordination will block function from emerging or being sustained across time from generation to generation. So, once the set of possible configurations is large enough and the islands of function are credibly sufficiently specific/isolated, it is unreasonable to expect such function to arise from chance, or from chance circumstances driving blind natural forces under the known laws of nature.

Q: Per our actual direct observation and experience, what is the best explanation for algorithms, codes and associated irreducibly complex clusters of implementing machines?

A: Design as causal process, and thus such entities serve as signs pointing to design and behind design, presumably one or more designers. Indeed, presence of such entities would normally count in our minds as reliable signs of design. And thence, as evidence pointing to designers, the known cause of design.

So, why is this case seen as so different?

Precisely because these cases are in self-replicating entities. That is, the focus is on the chain of descent from one generation to the other, and it is suggested that sufficient variation can be introduced by happenstance and captured across time by reproductive advantages that one or a few original ancestral cells can give rise to the biodiversity we see ever since the Cambrian era.

But is that really so, especially once we see the scope of involved information in the context of the available resources of the atoms in our solar system or the observed cosmos — the cosmos that can influence us in a world where the speed of light seems to be a speed limit?

William Paley, in Ch II of his Natural Theology (1806) provides a first clue. Of course, some will immediately object to the context, a work of theology. But in fact good science has been done by theologians and good science can appear in works of theology [just as how some very important economics first appeared in what has been called tracts for the times, e.g. Keynes’ General Theory], so let us look at the matter on the merits:

So, the proper foci are (i) the issue of self-replication as an ADDITIONAL capacity of a separately functional, organised complex entity, and (ii) the difference between generations 2, 3, 4 . . . and generation no 1. That is, first: once there is a sub-system with the stored information and additional complex organisation and step by step procedures to replicate an existing functional complex organised, entity then this is an additional case of FSCO/I to be explained.

Hardly less important, the key issue is not the progress from one generation of self-replication to the next, but the origin of such an order of system: “the [original] cause of the relation of its parts to their use.”

Third, we do need to establish that cumulative minor variations and selection on functional and/or reproductive advantages, would surmount the barrier of information generation without intelligent direction, especially where codes and algorithms are involved.

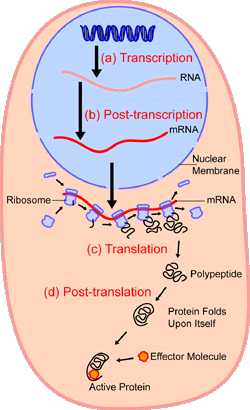

In the case of the Ribosome in the living cell these three levels interact:

Fig. B: Protein synthesis and the role of the ribosome as “protein amino acid chain factory” (Source: Wiki, GNU. See also a medically oriented survey here. )

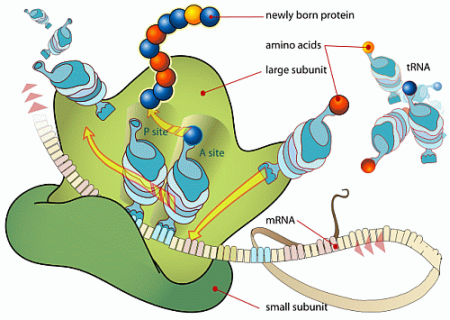

Fig. C: The Ribosome in action, as a digital code-controlled “protein amino acid chain factory.” Notice the role of the tRNA as an amino acid [AA] taxi and as a nano-scale position-arm device with a tool-tip, i.e. a robot-arm. Also, notice the role of mRNA as an incrementally advanced digitally coded step by step assembly control tape. (Source: Wiki, public domain.)

But — as Dr Liddle suggested — isn’t this just a matter of templates controlled by the chemistry, much as the pebbles on Chesil beach, UK, grade based on whether a new pebble will fit the gaps in the existing pile of pebbles? And, what is a “code” anyway?

Let us begin from what a code is, courtesy Collins English Dictionary:

code, n

1. (Electronics & Computer Science / Communications & Information) a system of letters or symbols, and rules for their association by means of which information can be represented or communicated for reasons of secrecy, brevity, etc. binary code Morse code See also genetic code

2. (Electronics & Computer Science / Communications & Information) a message in code

3. (Electronics & Computer Science / Communications & Information) a symbol used in a code

4. a conventionalized set of principles, rules, or expectations a code of behaviour

5. (Electronics & Computer Science / Communications & Information) a system of letters or digits used for identification or selection purposes . . .

[Collins English Dictionary – Complete and Unabridged © HarperCollins Publishers 1991, 1994, 1998, 2000, 2003]

Immediately, we see that codes are complex, functionally organised information-related constructs, designed to store or convey (and occasionally to conceal) information. They are classic artifacts of design, and given the conventional assignment of symbols and rules for their association to convey meaning by reference to something other than themselves, we see why: codes embed and express intent or purpose and what philosophers call intentionality.

But at the same time, codes are highly contingent arrangements of elements, so could they conceivably be caused by chance and/or natural affinities of things we find in nature? That is, couldn’t rocks falling off the cliff at the cliff end of Chesil beach spontaneously arrange themselves into a pile saying: “Welcome to Chesil beach?”

This brings up the issues of depth of isolation of islands of function in a space of possible configurations, and it brings up the issue of meaningfulness as a component of function. Also lurking is the question of what we deem logically possible as a prior causal state of affairs.

Of course, any particular arrangement of pebbles is possible, as pebbles are highly contingent. But if we were to see beach pebbles at Chesil (or the fairly similar Palisadoes Jamaica long beach leading out to Port Royal and forming the protecting arm for port Kingston) spelling out the message just given or a similar one, we would suspect design tracing to an intelligence as the most credible cause. This is because the particular sort of meaningful, functional configuration just seen is so deeply isolated in the space of possibilities for tumbling pebbles, that we instinctively distinguish logical possibility from practical observability on chance plus blind natural mechanisms, vs an act of art or design that points to an artist or designer with requisite capacity.

But that is in the everyday world of observables, where we know that such artists are real. In the deep past of origins, some would argue, we have no independent means of knowing that such designers were possible, and it is better to infer to natural factors however improbable.

But in this lurks a cluster of errors. First, ALL of the deep past is unobservable so we are inferring on best explanatio0n from currently observed patterns to a reasonable model of the past.

Second, on infinite monkeys analysis grounds closely related to the foundations of the second law of thermodynamics, we know that such configurations are so exceedingly isolated in vast sets of possibilities that it is utterly implausible on the gamut of the observed cosmos. Such a message is not sufficiently likely to be credibly observable by undirected chance and necessity. And from experience we know that the sets of symbols and rules making up a code are well beyond the comp0lexity involved in 125 byes of information. That is, 1.07 * 10^301 possibilities, more than ten times the square of the 10^150 or so possibilities for Planck time quantum states of the 10^80 atoms of our observed universe.

Third, there is a lurking begging of the question: in effect the assertion of so-called methodological naturalism would here block the door to the possibility of evidence pointing to the logically possible state of affairs that life is the product of design. We can see this from a now notorious declaration by Lewontin:

To Sagan, as to all but a few other scientists, it is self-evident that the practices of science provide the surest method of putting us in contact with physical reality, and that, in contrast, the demon-haunted world rests on a set of beliefs and behaviors that fail every reasonable test . . . .

[From: “Billions and Billions of Demons,” NYRB, January 9, 1997. Bold emphasis added.]

That looks uncommonly like closed-minded question-begging, and — as Johnson pointed out — falls of its own weight, once it is squarely faced. We can safely lay this to one side.

But also, there is a chicken-egg problem. One best pointed out by clipping from a bit further along in the thread at 30 (which clips from Dr Liddle at 26):

the common denominator in the “robots” itemized in the OP, it seems to me, is that they are products of decision-trees in which successful prototypes are repeated,

a: as created by intelligent designers, in a context of coded programs used in their operation

usually with variation, and less successfuly prototypes are abandoned.

b: Again, by intelligently directed choice

In two of the cases, the process is implemented by intelligent human beings, who do the replicating externally, as it were (usually), set the criteria for success/failure, and only implement variants that have a pre-sifted high probability of success.

c: In short, you acknowledge the point, i.e that it is intelligence that is seen empirically as capable of developing a robot (and presumably the arms and legs of Fig A are similar to the position arm device in B).

In the third case (the ribosome) I suggest that the replicating is intrinsic to the “robot” itself,

d: The problem here is the irreducible complexity in getting to function, as outlined again just above.

in that it is a component within a larger self-replicating “robot”;

e: it is a part of both the self-replicating facility and the metabolic mechanism, and uses a position-arm coded entity the tRNA that key lock fits the mRNA tape that is advanced step by step in protein assembly, and has as well a a starting and a halting process.

the criteria for success/failure is simply whether the thing succeeds in replicating,

f: The entity has to succeed at making proteins, which are in turn essential to its operations, i.e this is chicken-egg irreducible complexity. I gather something like up to 75 helper molecules are involved

and the variants are not pre-sifted so that even variants with very little chance of success are produced, and replicate if they can.

g: If the ribosome does not work right the first time, there can be no living cell that can either metabolise or self-replicate.

h: For that to happen, the ribosome has to have functioning examples of the very item it produces — code based, functioning proteins.

i: In turn, the DNA codes for the proteins have to be right, and have to embrace the full required set, another case of irreducible complexity.

j: In short, absent the full core self replicating and metabolic system right from get-go, the system will not work.

But in both scenarios, the result is an increasingly sophisticated, responsive, and effective “robot”.

k: This sort of integrated irreducible complexity embedding massive FSCO/I has only one observed solution and cause: intelligent design.

l: In addition, the degree of complexity involved goes well beyond the search resources of the observed cosmos to credibly come up with a spontaneous initial working config.

m: So, we see here a critical issue for the existence of viable cell based life, and it points to the absence of a root for the darwinian style tree of life.

So, once we rule out a priori materialism, and allow evidence that is an empirically reliable sign of intelligence to speak for itself, design becomes a very plausible explanation for the origin of life.

What about the origin of major body plans?

After all, isn’t this “just” a matter of descent with gradual modification?

The problem here is that this in effect assumes that all of life constitutes a vast connected continent of functional forms joined by fine incremental gradations. What is the directly observed evidence for that? NIL, the fossils — the only direct evidence of former life forms — notoriously are dominated by suddenness of appearance, stasis of form and disappearance or continuation into the modern era. That’s why Gould et al developed punctuated equlibria.

But the problem is deeper than that: we are dealing with code based, algorithmic entities.

Algorithms and codes are riddled with irreducible complexities and so don’t smoothly grade from say a Hello World to a full bore operating system. Nor can we plausibly plead that more sophisticated code modules naturally emerge from the Hello World module through chance variation and trial and error discovery of novel function so that the libraries can then be somehow linked together to form more sophisticated algorithms. Long before we got to that stage the 125 byte threshold would have repeatedly been passed.

In short, origin of major new body plans by embryogenesis is not plausibly explained on chance plus necessity, i.e. we do not have a viable chance plus necessity path to novel body plans.

To my mind, this makes design a very plausible — and arguably the best — causal explanation for the origin of both life and novel body plans.

So, what do you think of this? Why? END