|

This is a post about two scientists, united in their passion about one crazy idea. Brain scientist and serial entrepreneur Jeff Stibel thinks that the Internet is showing signs of intelligence and may already be conscious, according to a recent BBC report. So does neuroscientist Christof Koch (pictured above). Koch, who has done a lot of pioneering work on the neural basis of consciousness, was the Lois and Victor Troendle Professor of Cognitive and Behavioral Biology at California Institute of Technology from 1986 until September 2012, when he took up a new job as Chief Scientific Officer of the Allen Institute for Brain Science in Seattle.

Stibel, who is nothing if not passionate about his cause, refers to the Internet as a “global brain” and claims that it is starting to develop “real intelligence, not artificial intelligence.” Stibel wants to help build this global intelligence: that is what drives him. He even describes the Internet as a new life-form, which may one day evolve on its own. Readers can view him talking about his project here.

Koch also waxes poetic on the intelligence of the Internet. Here is what he said in a recent interview with Atlantic journalist Steve Paulson, in a report titled, The Nature of Consciousness: How the Internet Could Learn to Feel (August 22, 2012):

Are you saying the Internet could become conscious, or maybe already is conscious?

Koch: That’s possible. It’s a working hypothesis that comes out of artificial intelligence. It doesn’t matter so much that you’re made out of neurons and bones and muscles. Obviously, if we lose neurons in a stroke or in a degenerative disease like Alzheimer’s, we lose consciousness. But in principle, what matters for consciousness is the fact that you have these incredibly complicated little machines, these little switching devices called nerve cells and synapses, and they’re wired together in amazingly complicated ways. The Internet now already has a couple of billion nodes. Each node is a computer. Each one of these computers contains a couple of billion transistors, so it is in principle possible that the complexity of the Internet is such that it feels like something to be conscious. I mean, that’s what it would be if the Internet as a whole has consciousness. Depending on the exact state of the transistors in the Internet, it might feel sad one day and happy another day, or whatever the equivalent is in Internet space.

You’re serious about using these words? The Internet could feel sad or happy?

Koch: What I’m serious about is that the Internet, in principle, could have conscious states. Now, do these conscious states express happiness? Do they express pain? Pleasure? Anger? Red? Blue? That really depends on the exact kind of relationship between the transistors, the nodes, the computers. It’s more difficult to ascertain what exactly it feels. But there’s no question that in principle it could feel something.

In the course of the interview, Koch also expressed his personal sympathy with the idea that consciousness is a fundamental feature of the universe, like space, time, matter and energy. Koch is also open to the philosophy of panpsychism, according to which all matter has some consciousness associated with it, although the degree of consciousness varies enormously, depending on the complexity of the system. He acknowledges, however, that most neuroscientists don’t share his views on such matters.

After taking a pot shot at Cartesian dualism (which was espoused by the late neuroscientist and Nobel prizewinner Sir John Eccles), Koch argues that consciousness boils down to connectivity:

Unless you believe in some magic substance attached to our brain that exudes consciousness, which certainly no scientist believes, then what matters is not the stuff the brain is made of, but the relationship of that stuff to each other. It’s the fact that you have these neurons and they interact in very complicated ways. In principle, if you could replicate that interaction, let’s say in silicon on a computer, you would get the same phenomena, including consciousness.

So how does the Internet stack up against the human brain? Slate reporter Dan Falk, who interviewed Koch by phone recently, quotes Koch as calculating that the Internet already has 1000 times as many connections as the human brain, in a report titled, Could the Internet Ever “Wake Up”? And would that be such a bad thing? (September 20, 2012):

In his book Consciousness: Confessions of a Romantic Reductionist, published earlier this year, he makes a rough calculation: Take the number of computers on the planet — several billion — and multiply by the number of transistors in each machine — hundreds of millions — and you get about a billion billion, written more elegantly as 10^18. That’s a thousand times larger than the number of synapses in the human brain (about 10^15)…

In a phone interview, Koch noted that the kinds of connections that wire together the Internet — its “architecture” — are very different from the synaptic connections in our brains, “but certainly by any measure it’s a very, very complex system. Could it be conscious? In principle, yes it can.”

…We can’t pin down the date when the Internet surpasses our brains in complexity, he says, “but clearly it is going to happen at some point.”

A neuron is not a transistor

|

Diagram of a typical myelinated vertebrate motoneuron. Image courtesy of LadyofHats and Wikipedia.)

There’s an implied assumption in the foregoing discussion, that one neuron equals one transistor. I don’t think so. One blogger, who calls himself The Buckeye Monkey, puts it this way in a thoughtful article:

A transistor is not a neuron, not even close. A transistor is just a simple electronic switch…

See how it only has 3 contacts? The one on the left is the input, the one in the middle is the control, and the one on the right is the output. By applying a small voltage on the control (middle) you turn on the switch and allow current to flow from the input to the output…

Making out like 1 transistor = 1 neuron is beyond nonsense, it’s asinine…

The fact is our brains are not simply evolution’s version of electronic computers. Our brains are electro-chemical computing devices. Each neuron can have 1000 connections to other neurons, and the chemical soup of hormones sloshing around in our skulls can have drastic effects on how they processes information. Every neuron receives a vast array of input signals from other neurons and turn that into their own complicated firing pattern that is not fully understood. They are not simple on/off switches. They are a hell of a lot more sophisticated then that.

Flaws in the calculations

With the greatest respect to Professor Koch, I have to say that his calculations are badly wrong, too. I would refer him to an article by Professor David Deamer, of the Department of Biomolecular Engineering, University of California, entitled Consciousness and Intelligence in Mammals: Complexity thresholds, in the Journal of Cosmology, 2011, Vol. 14. The upshot of Deamer’s calculation is that even if you think that consciousness resides in matter (as Stibel, Koch and Deamer all do), then the Internet still falls a long way short of the human brain, in terms of its complexity. In fact, it falls 40 orders of magnitude short.

In the article, Deamer proposes a way to estimate complexity in the mammalian brain using the number of cortical neurons, their synaptic connections and the encephalization quotient. His calculation assumes that the following three (materialistic) postulates hold:

The first postulate is that consciousness will ultimately be understood in terms of ordinary chemical and physical laws…

The second postulate is that consciousness is related to the evolution of anatomical complexity in the nervous system…. The second postulate suggests that consciousness can emerge only when a certain level of anatomical complexity has evolved in the brain that is directly related to the number of neurons, the number of synaptic connections between neurons, and the anatomical organization of the brain…

This brings us to the third postulate, that consciousness, intelligence, self-awareness and awareness are graded, and have a threshold that is related to the complexity of nervous systems. I will now propose a quantitative formula that gives a rough estimate of the complexity of nervous systems. Only two variables are required: the number of units in a nervous system, and the number of connections (interactions) each unit has with other units in the system. The formula is simple: C(complexity)=log(N)*log(Z) where N is the number of units and Z is the average number of synaptic inputs to a single neuron.

Deamer gets his figures for the human brain (and other animal brains) from Roth and Dicke’s 2005 article, Evolution of the brain and intelligence (Trends Cognitive Sciences 9: 250-257). The human brain contains 11,500,000,000 cortical neurons. That’s N in his formula. Log(N) is about 10.1. Z, the number of synapses per neuron, is astonishingly high: “Each human cortical neuron has approximately 30,000 synapses per cell.” Thus log(Z) is about 4.5. According to Deamer’s complexity formula, the complexity of the human brain is 10.1 x 4.5, or 45.5.

What about other animals’ brains? How complex are they?

|

A dog looking at himself in the mirror. Like most animals, dogs show no sign of self-recognition when looking at themselves in the mirror. Image courtesy of Georgia Pinaud and Wikipedia.

How do non-human animals compare with us? The raw figures are as follows: elephant 45, chimpanzee 44.1, dolphin 43.6, gorilla 43.2, horse 39.1, dog 37.8, rhesus (monkey) 39.1, cat 32.7, opossum 31.8, rat 31, mouse 23.4. Deamer then makes an adjustment for these animals, based on their body sizes and encephalization quotients: “The complexity equation then becomes C=log(N*EQa/EQh)*log(Z), where EQa is the animal EQ and EQh is the human EQ, taken to be 7.6.”

The normalized complexity figures are now as follows: humans 45.5, dolphins 43.2, chimpanzees 41.8, elephant 41.8, gorilla 40.0, rhesus (monkey) 36.5, horse 34.8, dog 34.4, cat 32.7, rat 25.4, opossum 24.9, mouse 23.2.

Commenting on the results, Deamer remarks:

If we asked a hundred thoughtful colleagues to rank this list of mammals according to their experience and observations, I predict that their lists, when averaged to reduce idiosyncratic choices, would closely reflect the calculated ranking. It is interesting that all six animals with normalized complexity values of 40 and above are self-aware according to the mirror test, the rhesus monkey is borderline at 36.5, while the animals with complexity values of 35 and below do not exhibit this behavior. This jump between C = 36.5 and 40 appears to reflect a threshold related to self-awareness.

Although mammals with normalized complexity values between 40 and 43.2 are self-aware and are perhaps conscious in a limited capacity, they do not exhibit what we recognize as human intelligence. It seems that a normalized complexity value of 45.5 is required for human consciousness and intelligence, that is, 10 – 20 billion neurons, each on average with 30,000 connections to other neurons, and an EQ of 7.6. Only the human brain has achieved this threshold.

Deamer concludes his article with a prediction:

…[B]ecause of the limitations of computer electronics, it will be virtually impossible to construct a conscious computer in the foreseeable future. Even though the number of transistors (N) in a microprocessor chip now approaches the number of neurons in a mammalian brain, each chip has a Z of 2, that is, its input-output response is directly connected to just two other transistors. This is in contrast to a mammalian neuron, in which function is modulated by thousands of synaptic inputs and output relayed to hundreds of other neurons. According to the quantitative formula described above, the complexity of the human nervous system is log(N)*log(Z)=45.5, while that of a microprocessor with 781 million transistors is 8.9*0.3=2.67, many orders of magnitude less… Interestingly, for the nematode the calculated complexity C=3.2, assuming an average of 20 synapses per neuron, so the functioning nervous system of this simple organism could very well be computationally modeled.

So there you have it. A microprocessor with around 1 billion transistors is in the same mental ballpark as … a worm. Rather an underwhelming result, don’t you think?

“What about the Internet as a whole?” you might ask. As we saw above, the number of transistors (N) in the entire Internet is 10^18, so log(N) is 18. log(Z) is log(2) or about 0.3, so C=(18*0.3)=5.4. That’s right: on Deamer’s scale, the complexity of the entire Internet is a miserable 5.4, or 40 orders of magnitude less than that of the human brain, which stands at 45.5.

Remember that Deamer’s formula is a logarithmic one, using logarithms to base 10. What that means is that the human brain is, in reality, 10,000,000,000,000,000,000,000,000,000,000,000,000,000 times more complex than the entire Internet! And that’s based on explicitly materialistic assumptions about consciousness.

I somehow don’t think we’re going to be seeing a conscious Internet for some time yet.

To be fair, Deamer does point out that “what the microprocessor lacks in connectivity can potentially be compensated in part by speed, which in the most powerful computers is measured in teraflops compared with the kilohertz activity of neurons.” For argument’s sake, I’m going to apply that figure to the Internet as a whole. 10^12 divided by 10^3 is 10^9, so let’s lop off nine zeroes. That still makes the human brain 10,000,000,000,000,000,000,000,000,000,000 or 10^31 times more complex than the entire Internet.

Moore’s law: definitely no Internet consciousness before 2115, and probably never

|

Gordon Moore in 2006. Image courtesy of Steve Jurvetson and Wikipedia.

Moore’s law tells us that over the history of computing hardware, the number of transistors on integrated circuits doubles approximately every two years. How long will it take, at that rate, for the Internet to catch up with the human brain? the answer is (1/log(2))*31, or 103 years from now. That’s in the year 2115.

That’s assuming, of course, that Moore’s law continues to hold that long. Who says so? Why, Moore himself! Here’s Wikipedia:

On 13 April 2005, Gordon Moore stated in an interview that the law cannot be sustained indefinitely: “It can’t continue forever. The nature of exponentials is that you push them out and eventually disaster happens.” He also noted that transistors would eventually reach the limits of miniaturization at atomic levels:

In terms of size [of transistors] you can see that we’re approaching the size of atoms which is a fundamental barrier, but it’ll be two or three generations before we get that far — but that’s as far out as we’ve ever been able to see. We have another 10 to 20 years before we reach a fundamental limit. By then they’ll be able to make bigger chips and have transistor budgets in the billions.

So it appears that even if we one day figured out how to build a conscious Internet, we’d never be able to afford to make one. I have to say I think that’s a rather fortunate thing. Although I don’t believe that the reflective consciousness which distinguishes human beings can be boiled down to neuronal connections (see my recent post, Is meaning located in the brain?), I nevertheless think that the primary consciousness which is found in most (and perhaps all) mammals and birds (and possibly also in cephalopods, such as octopuses) is the product of the interconnectivity of the neurons in their brains. An artificial entity which possessed all the world’s stored data and which had the same mental abilities as a dog or a dolphin could do quite a bit of mischief, if it had a mind to, and I think it’s dangerously naive to assume that such an entity would be benevolent. It’s far more likely that it would be amoral, spiteful or even insane (from loneliness). So I for one am somewhat relieved to discover that the Internet will never be out to get us.

Why the idea of a conscious Internet is biologically naive

Numerical calculations aside, Koch’s claim that the Internet could be conscious is biologically flawed, as was pointed out recently by Professor Daniel Dennett (who was also Jeff Stibel’s mentor) in the above-cited article (September 20, 2012) by Slate journalist Dan Falk:

“The connections in brains aren’t random; they are deeply organized to serve specific purposes,” Dennett says. “And human brains share further architectural features that distinguish them from, say, chimp brains, in spite of many deep similarities. What are the odds that a network, designed by processes serving entirely different purposes, would share enough of the architectural features to serve as any sort of conscious mind?”

Dennett also pointed out that while the Internet had a very high level of connectivity, the difference in architecture “makes it unlikely in the extreme that it would have any sort of consciousness.”

Physicist Sean Carroll, who was also interviewed for the article, was also dismissive of the idea that the Internet would ever be conscious, even as he acknowledged that there was nothing stopping the Internet from having the computational capacity of a conscious brain:

“Real brains have undergone millions of generations of natural selection to get where they are. I don’t see anything analogous that would be coaxing the Internet into consciousness…. I don’t think it’s at all likely.”

Even on Darwinian grounds, then, the idea of a conscious Internet appears to be badly flawed.

What are the neural requirements for consciousness?

|

Midline structures in the brainstem and thalamus necessary to regulate the level of brain arousal. Small, bilateral lesions in many of these nuclei cause a global loss of consciousness. Image (courtesy of Wikipedia) taken from Christof Koch (2004), The Quest for Consciousness: A Neurobiological Approach, Roberts, Denver, CO, with permission from the author under license.

While we’re about it, we might ask: what are the neural requirements for consciousness? Well, that depends on what kind of consciousness you’re talking about. Neuroscientists distinguish two kinds of consciousness – primary consciousness and higher-order consciousness – according to a widely cited paper by Dr. James Rose, entitled, The Neurobehavioral Nature of Fishes and the Question of Awareness and Pain (Reviews in Fisheries Science, 10(1): 1–38, 2002):

Although consciousness has multiple dimensions and diverse definitions, use of the term here refers to two principal manifestations of consciousness that exist in humans (Damasio, 1999; Edelman and Tononi, 2000; Macphail, 1998): (1) “primary consciousness” (also known as “core consciousness” or “feeling consciousness”) and (2) “higher-order consciousness” (also called “extended consciousness” or “self-awareness”). Primary consciousness refers to the moment-to-moment awareness of sensory experiences and some internal states, such as emotions. Higher-order consciousness includes awareness of one’s self as an entity that exists separately from other entities; it has an autobiographical dimension, including a memory of past life events; an awareness of facts, such as one’s language vocabulary; and a capacity for planning and anticipation of the future. Most discussions about the possible existence of conscious awareness in non-human mammals have been concerned with primary consciousness, although strongly divided opinions and debate exist regarding the presence of self-awareness in great apes (Macphail, 1998)…

Although consciousness has been notoriously difficult to define, it is quite possible to identify its presence or absence by objective indicators. This is particularly true for the indicators of consciousness assessed in clinical neurology, a point of special importance because clinical neurology has been a major source of information concerning the neural bases of consciousness. From the clinical perspective, primary consciousness is defined by: (1) sustained awareness of the environment in a way that is appropriate and meaningful, (2) ability to immediately follow commands to perform novel actions, and (3) exhibiting verbal or nonverbal communication indicating awareness of the ongoing interaction (Collins, 1997; Young et al., 1998). Thus, reflexive or other stereotyped responses to sensory stimuli are excluded by this definition. (PDF, p. 5)

According to a paper by A. K. Seth, B. J. Baars and D. B. Edelman, entitled, Criteria for consciousness in humans and other mammals (Consciousness and Cognition, 14 (2005), 119–139), primary consciousness has three distinguishing features at the neurological level:

Physiologically, three basic facts stand out about consciousness.

2.1. Irregular, low-amplitude brain activity

Hans Berger discovered in 1929 that waking consciousness is associated with low-level, irregular activity in the raw EEG, ranging from about 20–70 Hz (Berger, 1929). Conversely, a number of unconscious states—deep sleep, vegetative states after brain damage, anesthesia, and epileptic absence seizures—show a predominance of slow, high-amplitude, and more regular waves at less than 4 Hz (Baars, Ramsoy, & Laureys, 2003). Virtually all mammals studied thus far exhibit the range of neural activity patterns diagnostic of both conscious states…

2.2. Involvement of the thalamocortical system

In mammals, consciousness seems to be specifically associated with the thalamus and cortex (Baars, Banks, & Newman, 2003)… To a first approximation, the lower brainstem is involved in maintaining the state of consciousness, while the cortex (interacting with thalamus) sustains conscious contents. No other brain regions have been shown to possess these properties… Regions such as the hippocampal system and cerebellum can be damaged without a loss of consciousness per se.

2.3. Widespread brain activity

Recently, it has become apparent that conscious scenes are distinctively associated with widespread brain activation (Srinivasan, Russell, Edelman, & Tononi, 1999; Tononi, Srinivasan, Russell, & Edelman, 1998c). Perhaps two dozen experiments to date show that conscious sensory input evokes brain activity that spreads from sensory cortex to parietal, prefrontal, and medial-temporal regions; closely matched unconscious input activates mainly sensory areas locally (Dehaene et al., 2001). Similar findings show that novel tasks, which tend to be conscious and reportable, recruit widespread regions of cortex; these tasks become much more limited in cortical representation as they become routine, automatic and unconscious (Baars, 2002)…

Together, these first three properties indicate that consciousness involves widespread, relatively fast, low-amplitude interactions in the thalamocortical core of the brain, driven by current tasks and conditions. Unconscious states are markedly different and much less responsive to sensory input or endogenous activity.

The neural requirements for higher-order consciousness, which is only known to occur in human beings, are even more demanding, as Rose points out in his article, where he contrasts the neurological prerequisites for primary and higher-order consciousness:

Primary consciousness appears to depend greatly on the functional integrity of several cortical regions of the cerebral hemispheres especially the “association areas” of the frontal, temporal, and parietal lobes (Laureys et al., 1999, 2000a-c). Primary consciousness also requires the operation of subcortical support systems such as the brainstem reticular formation and the thalamus that enable a working condition of the cortex. However, in the absence of cortical operations, activity limited to these subcortical systems cannot generate consciousness (Kandel et al., 2000; Laureys et al., 1999, 2000a; Young et al., 1998). Wakefulness is not evidence of consciousness because it can exist in situations where consciousness is absent (Laureys et al., 2000a-c). Dysfunction of the more lateral or posterior cortical regions does not eliminate primary consciousness unless this dysfunction is very anatomically extensive (Young et al., 1998).

Higher-order consciousness depends on the concurrent presence of primary consciousness and its cortical substrate, but the additional complexities of this consciousness require functioning of additional cortical regions. For example, long-term, insightful planning of behavior requires broad regions of the “prefrontal” cortex. Likewise, awareness of one’s own bodily integrity requires activity of extensive regions of parietal lobe cortex (Kolb and Whishaw, 1995). In general, higher-order consciousness appears to depend on fairly broad integrity of the neocortex. Widespread degenerative changes in neocortex such as those accompanying Alzheimer’s disease, or multiple infarcts due to repeated strokes, can cause a loss of higher-order consciousness and result in dementia, while the basic functions of primary consciousness remain (Kandel et al., 2000; Kolb and Whishaw, 1995). (PDF, pp. 5-6)

No consciousness without a neocortex: another reason why the Internet will never wake up

|

Anatomical subregions of the cerebral cortex. The neocortex is the outer layer of the cerebral hemispheres. It is made up of six layers, labelled I to VI (with VI being the innermost and I being the outermost). The neocortex part of the brain of mammals. A homologous structure also exists in birds. Image (courtesy of Wikipedia) taken from Patrick Hagmann et al. (2008) “Mapping the Structural Core of Human Cerebral Cortex,” PLoS Biology 6(7): e159. doi:10.1371/journal.pbio.0060159.

“That’s all very well,” some readers may object, “but who says that the Internet has to have a brain like ours? Why couldn’t it still be conscious, even with a very different architecture?” But that’s extremely unlikely, according to the above-cited article by Dr. James Rose:

It is a well-established principle in neuroscience that neural functions depend on specific neural structures. Furthermore, the form of those structures, to a great extent, dictates the properties of the functions they subserve. If the specific structures mediating human pain experience, or very similar structures, are not present in an organism’s brain, a reasonably close approximation of the pain experience can not be present. If some form of pain awareness were possible in the brain of a fish, which diverse evidence shows is highly improbable, its properties would necessarily be so different as to not be comparable to human-like experiences of pain and suffering…

… There may be other cortical regions and processes that are important for the totality of the pain experience. The most important point here is that the absolute dependence of pain experience on neocortical functions is now well established (Price, 1999; Treede et al., 1999).

It is also revealing to note that the cortical regions responsible for the experience of pain are essentially the same as the regions most vital for consciousness. Functional imaging studies of people in a persistent vegetative state due to massive cortical dysfunction (Laureys et al., 1999, 2000a,b) showed that unconsciousness resulted from a loss of brain activity in widespread cortical regions, but most specifically the frontal lobe, especially the cingulate gyrus, and parietal lobe cortex. (PDF, pp. 27, 18)

Thus according to Rose, a structure with a radically different structure from our neocortex would have a different function: whatever it would be for, it wouldn’t be consciousness. Therefore the odds that two structures as radically different as the human brain and the Internet would both be capable of supporting consciousness are very low indeed.

The human brain: skilfully engineered over a period of 3.5 million years

Many neo-Darwinists seem to be under the completely false impression that the human brain is merely a scaled-up, more powerful version of the chimpanzee brain. Nothing could be further from the truth: the two brains are radically different. In addition to the massive growth in the human brain over the last three million years, there have also been massive reorganizational changes in the human brain, which are not easy to account for on a Darwinian paradigm. The major reorganizational changes, which are listed in the paper,“Evolution of the Brain in Humans – Paleoneurology” by Ralph Holloway, Chet Sherwood, Patrick Hof and James Rilling (in The New Encyclopedia of Neuroscience, Springer, 2009, pp. 1326-1334), include the following:

(1) Reduction of primary visual striate cortex, area 17, and relative increase in posterior parietal cortex, between 2.0 and 3.5 million years ago;

(2) Reorganization of the frontal lobe (Third inferior frontal convolution, Broca’s area, widening prefrontal), between 1.8 and 2.0 million years ago;

(3) Cerebral asymmetries in the left occipital, right-frontal petalias, arising between 1.8 and 2.0 million years ago; and

(4) Refinements in cortical organization to a modern Homo pattern (1.5 million years ago to present).

Concerning the second reorganization, which affected Broca’s region, Holloway et al. write:

Certainly, the second reorganizational pattern, involving Broca’s region, cerebral asymmetries of a modern human type and perhaps prefrontal lobe enlargement, strongly suggests selection operating on a more cohesive and cooperative social behavioral repertoire, with primitive language a clear possibility. By Homo erectus times, ca. 1.6–1.7 MYA [million years ago – VJT], the body plan is essentially that of modern Homo sapiens – perhaps somewhat more lean-muscled bodies but statures and body weights within the modern human range. This finding indicates that relative brain size was not yet at the modern human peak and also indicates that not all of hominid brain evolution was a simple allometric exercise…

But that’s not all. A little over half a million years ago, the brains of our ancestors underwent a revolution which made it possible for them to make long-term commitments and control their impulses much better than ever before. Was this the crucial step that made us morally aware beings?

Homo heidelbergensis: one of us?

|

|

(Left: An artistic depiction of Heidelberg man, courtesy of Jose Luis Martinez Alvarez and Wikipedia.

Right: A hand-axe made by Heidelberg man 500,000 years ago in Boxgrove, England. Image courtesy of Midnightblueowl and Wikipedia.)

An additional reorganization of the brain occurred over 500,000 years ago, with the emergence of Homo heidelbergensis (Heidelberg man), according to Benoit Dubreuil’s article, Paleolithic public goods games: why human culture and cooperation did not evolve in one step (abstract only available online) (Biology and Philosophy, 2010, 25:53–73, DOI 10.1007/s10539-009-9177-7). Heidelberg man, who emerged in Africa and who also lived in Europe and Asia, was certainly a skilled hunter: a recent report by Alok Jha in The Guardian (15 November 2012) reveals that he was hunting animals with extra-lethal stone-tipped wooden spears, as early as half a million years ago. He also had a brain size of 1100-1400 cubic centimeters, which fell within the modern human range. Some authorities believe that Homo heidelbergensis possessed a primitive form of language. No forms of art or sophisticated artifacts by Heidelberg man other than stone tools have been discovered, although red ocher, a mineral that can be used to create a red pigment, has been found at a site in France.

In his article, Paleolithic public goods games: why human culture and cooperation did not evolve in one step, Benoit Dubreuil argues that around 500,000 years ago, big-game hunting (which is highly rewarding in terms of food, if successful, but is also very dangerous for the hunters, who might easily get gored by the animals they are trying to kill) and life-long monogamy (for the rearing of children whose prolonged infancy and whose large, energy-demanding brains would have made it impossible for their mothers to feed them alone, without a committed husband who would provide for the family) became entrenched features of human life. Dubreuil refers to these two activities as “cooperative feeding” and “cooperative breeding,” and describes them as “Paleolithic public good games” (PPGGs).

Dubreuil points out that for both of these activities, there would have been a strong temptation to defect when the going got tough: to run away from a big mammoth or walk out on one’s spouse and children. Preventing this anti-social behavior would have required extensive reorganization of the pre-frontal cortex (PFC) of the human brain, which plays a vital role in impulse control, in human beings. Thus it is likely that Homo heidelbergensis had a well-developed brain with a large pre-frontal cortex, that allowed him to keep his selfish impulses in check, for the good of his family and his tribe:

I present evidence that Homo heidelbergensis became increasingly able to secure contributions form others in two demanding Paleolithic public good games (PPGGs): cooperative feeding and cooperative breeding. I argue that the temptation to defect is high in these PPGGs and that the evolution of human cooperation in Homo heidelbergensis is best explained by the emergence of modern-like abilities for inhibitory control and goal maintenance. These executive functions are localized in the prefrontal cortex and allow humans to stick to social norms in the face of competing motivations. This scenario is consistent with data on brain evolution that indicate that the largest growth of the prefrontal cortex in human evolution occurred in Homo heidelbergensis and was followed by relative stasis in this part of the brain. One implication of this argument is that subsequent behavioral innovations, including the evolution of symbolism, art, and properly cumulative culture in modern Homo sapiens, are unlikely to be related to a reorganization of the prefrontal cortex, despite frequent claims to the contrary in the literature on the evolution of human culture and cognition. (Abstract)

…Homo heidelbergensis was able to stick to very demanding cooperative arrangements in connection with feeding and breeding. The fact that such behaviors appear in our evolution much before art or symbolism, I contend, implies that human culture and cooperation did not evolve in one step… (pp. 54-55)

We know that the prefrontal cortex plays a central role in executive functions. The dorsolateral cortex, more particularly, one of the latest maturing parts of the prefrontal cortex in children, is associated with inhibitory control and goal maintenance in all kind of social tasks (Sanfey et al. 2003; van ‘t Wout et al. 2006; Knoch et al. 2006). I explain in [the] Section “Brain evolution and the case for a change in the PFC” why I think that a change in this part of the brain can be parsimoniously linked to the behavioral evolution found in Homo heidelbergensis. (p. 57)

There are serious and well-known limitations to the reconstruction of brain evolution… Consequently, I will not claim that there has been a single reorganization of the PFC in the human lineage and that it happened in Homo heidelbergensis. I will rather contend that, if there is only one point in our lineage where such reorganization happened, it was in all likelihood there. (p. 64)

Holloway et al. concur with Dubreuil’s assessment that the pre-frontal cortex, which plays an important part in impulse control, has not changed much in the last half million years, for they acknowledge that it was the same in Neanderthal man as in Homo sapiens:

…The only difference between Neandertal and modern human endocasts is that the former are larger and more flattened. Most importantly, the Neandertal prefrontal lobe does not appear more primitive.

It appears that Heidelberg man (Homo heidelbergensis) had not only the moral capacity for self-restraint, but certain limited artistic capcities as well. In his monograph, The First Appearance of Symmetry in the Human Lineage: where Perception meets Art (careful: large file!) (Symmetry, 2011, 3, 37-53; doi:10.3390/3010037), Dr. Derek Hodgson argues that later Acheulean handaxes have distinctively aesthetic features – in particular, a concern for symmetry. I would invite the reader to have a look at the handaxes in Figure 1 on page 40, which date back to 750,000 years ago – either at or just before the time when Heidelberg man emerged. Hodgson comments:

The fact that the first glimmerings of an “aesthetic” concern occurred at least 500,000 BP [years before the present – VJT] in a species that was not fully modern (either late Homo erectus or Homo heidelbergensis) suggests that the aesthetic sensibility of modern humans has extremely ancient beginnings. (p. 47)

The emergence of modern man (200,000 years ago)

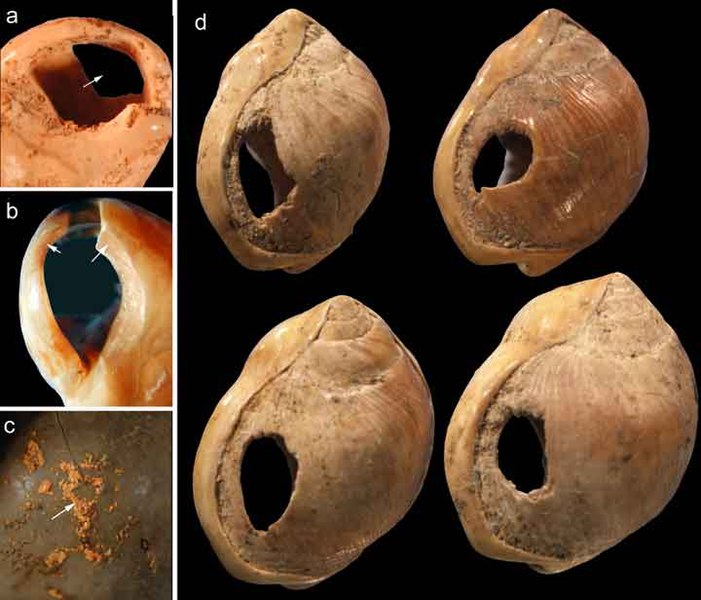

|

75,000 year old shell beads from Blombos Cave, South Africa, made by Homo sapiens. Image courtesy of Chris Henshilwood, Francesco d’Errico and Wikipedia.

Nevertheless, it seems that in terms of his symbolic and artistic abilities, Heidelberg man fell short of what modern human beings could do. To quote Dubreuil again:

The relative stability of the PFC [prefrontal cortex] during the last 500,000 years can be contrasted with changes in other brain areas. One of the most distinctive features of Homo sapiens’ cranium morphology is its overall more globular structure. This globularization of Homo sapiens’ cranium occurred between 300,[000] and 100,000 years ago and has been associated with the relative enlargement of the temporal and/or parietal lobes (Lieberman et al. 2002; Bruner et al. 2003; Bruner 2004, 2007; Lieberman 2008). (p. 67)

The temporoparietal cortex is certainly involved in many complex cognitive tasks. It plays a central role in attention shifting, perspective taking, episodic memory, and theory of mind (as mentioned in Section “The role of perspective taking”), as well as in complex categorization and semantic processing (that is where Wernicke’s area is located)…

I have argued elsewhere (Dubreuil 2008; Henshilwood and Dubreuil 2009) that a change in the attentional abilities underlying perspective taking and high-level theory of mind best explains the behavioral changes associated with modern Homo sapiens, including the evolution of symbolic and artistic components in material culture. (p. 68)

So there we have it. The human brain is “fearfully and wonderfully made,” in the words of Psalm 139. It is extremely doubtful whether prideful man, with his much-vaunted Internet, will ever approach the level of skill and complexity shown by the Intelligent Designer who carefully engineered his brain, over a period of several million years.