|

I have greatly enjoyed reading three recent posts on memory by Professor Michael Egnor, an accomplished neurosurgeon with more than 20 years’ experience. In his first post, Recalling Nana’s Face: Does Your Brain Store Memories?, Professor Egnor criticized what he regards as two pernicious myths regarding memory: first, the popular notion that the brain stores actual memories themselves; and second, the more sophisticated (but equally mistaken) notion that what the brain stores is coded information which enables us to retrieve memories at will. Neurologist and skeptic Dr. Steven Novella, who is an assistant professor at Yale University School of Medicine, published a reply, accusing Dr. Egnor of faulty reasoning – a claim echoed by Dr. P.Z. Myers on his blog. Dr. Egnor responded in a second post, More Serious Problems with Representation in the Brain: Remembering the Battle of Hastings. Dr. Novella then published a second reply, to which Dr. Egnor wrote a final response, titled, Brains on Fire: Dr. Steven Novella Explains, “The Mind Is the Fire of the Brain”.

I have no background in neuroscience; my background is in philosophy. However, the issues raised in Dr. Egnor’s posts piqued my interest, and as my Ph.D. was on the subject of animal minds, I’d like to offer a few comments of my own that may serve to sharpen the philosophical discussion of memory. I should state at the outset that I think some of Dr. Egnor’s arguments against materialism could have been expressed more clearly. Personally, I have absolutely no problem with the notion that the brain stores information (in the form of representations) which enables me to remember facts and episodes in my life. As to how I access and decode this information, in the majority of cases I would say that I do not find the information: it comes to me, whenever I give my brain a little time to do its job. However, on those occasions when I am unable to readily access certain information that I have previously committed to memory, I can resort to meta-cognitive strategies – a process which is properly described as recollecting (or conscious recall), rather than remembering. And because a strategy is essentially a rule, I would argue that it cannot have a physical location in my brain. Thus I would fully endorse Dr. Egnor’s conclusion that our capacity to recall semantic information at will is not a physical capacity.

What is a memory, anyway?

I’d like to begin with Dr. Egnor’s definition of memory. He writes:

It’s helpful to begin by considering what memory is — memory is retained knowledge. Knowledge is the set of true propositions. Note that neither memory nor knowledge nor propositions are inherently physical. They are psychological entities, not physical things. Certainly memories aren’t little packets of protein or lipid stuffed into a handy gyrus, ready for retrieval when needed for the math quiz.

Dr. Egnor is making two claims here: first, that memory is a kind of knowledge (namely, retained knowledge); and second, that knowledge is inherently propositional. In his reply, Dr. Novella objects to this definition:

Memories don’t have to be true, and they don’t have to be propositions. You can remember an image, a sound, an idea (true or false), an association, a feeling, facts, and skills, including specific motor tasks.

A far more accurate an useful definition of memory would be stored information.

It seems to me that Dr. Novella has a valid point here. Professor Robert Feldmann, the author of a leading psychology textbook, Essentials of Understanding Psychology (University of Massachusetts, 10th edition, 2011) defines memory as “the process whereby we encode, store and retrieve information” (Chapter 20, p. 209). That’s a fairly standard definition.

As Dr. Novella remarks, memory comes in many different varieties. In their article, “The Role of Consciousness in Memory” in the online journal Brains, Minds and Media (July 4, 2005), Franklin, Baars, Ramamurthy and Ventura distinguish several kinds of human memory systems. In their classification scheme, short-term systems include: (i) sensory memory (which “holds incoming sensory data in sensory registers and is relatively unprocessed,” and decays very quickly, in just “hundreds of milliseconds”); (ii) working memory, or “the manipulable scratchpad of the mind,” which “holds sensory data, both endogenous (for example, visual images and inner speech) and exogenous (sensory), together with their interpretations” over a period of seconds; and (iii) transient episodic memory (TEM), defined as “an episodic memory with a decay rate measured in hours.” In addition there are various kinds of long-term memory: (i) procedural memory (commonly defined as “knowing how” as opposed to “knowing that”), which we use when we remember how to ride a bicycle or tie our shoe-laces; (ii) perceptual memory, which plays a role in the recognition, categorization and interpretation of sensory stimuli; and (iii) declarative memory, which refers to memories that can be consciously recalled, and comes in two varieties: (a) an episodic memory for particular events, which some authors refer to as autobiographical memory (e.g. “Where did you go on vacation last summer?”) that requires one to access events that occurred at a specific time and place of their occurrence, and (b) a semantic memory for general facts about the world (e.g. zebras have four legs; the Battle of Hastings was in 1066). In the interests of accuracy, I should state that while a couple of the categories proposed by Franklin et al. (namely, sensory memory, perceptual memory, transient episodic memory) aren’t universally accepted in the field of memory research, most of their categories are generally recognized.

Obviously, sensory memory, which holds relatively unprocessed sensory data, cannot be described as a form of knowledge. Nor can working memory, which includes memories of visual images and inner speech, in addition to exogenous sensory data, be properly called knowledge. If we look at the various kinds of long-term memory, then we can indeed speak of knowledge, but only in the case of semantic memory and autobiographical memory could this knowledge be called propositional. On the other hand, procedural memory, which involves knowing how to perform tasks such as riding a bike, is not propositional. Nor can perceptual memory, which allows animals to categorize sensory stimuli.

I conclude that any satisfactory definition of “memory” will have to be a broad one, and that most of what we remember cannot be described as propositional knowledge.

Can the brain store memories?

Next, Dr. Egnor asserts that it makes no sense to speak of the brain as storing actual memories. As he puts it (I’m conflating material from two posts here):

The brain is a physical thing. A memory is a psychological thing. A psychological thing obviously can’t be “stored” in the same way a physical thing can. It’s not clear how the term “store” could even apply to a psychological thing…

Memories are not the kind of things that can be stored. Representations of memories can be stored, … but memories can’t be stored.

…[M]emories are psychological things. They have neither mass nor volume nor location, and the assertion that they can actually be stored in anything is unintelligible — no less unintelligible than the assertion that the square root of a number can be a color or that mumbles can be stuffed in pockets.

Dr. Egnor’s argument is somewhat unclear to me at this point. He could be arguing that memory is a psychological state, and that psychological states cannot have a physical location. But this claim seems to be simply mistaken. Take my psychological state of seeing an orange, which is on a table in front of me. Does this psychological state of mine have a physical location? Unquestionably: it takes place within my body – more specifically, within my eyes, nervous system and brain. And as Dr. James Rose points out in his highly informative article, The Neurobehavioral Nature of Fishes (Reviews in Fisheries Science, 10(1): 1–38, 2002), it is only when the incoming sensory signals reach my neocortex that I will become conscious of an object in front of me. So we could perhaps say that my conscious state of seeing an orange resides in my neocortex. And if this conclusion strikes some readers as odd, then I would ask them: what about a dog’s psychological state of seeing an orange? Is anyone going to deny that it has a physical location?

|

Or again, take an animal’s feeling of anger at another animal that provoked it. The animal’s anger is a psychological state, but at the same time, we can re-describe it as a physical state. Indeed, the Greek philosopher Aristotle (384-322 B.C., pictured above, courtesy of Wikipedia) famously defined anger as “a boiling of the blood or warm substance surround the heart” in his De Anima, Book I, Part 1, and even today, we commonly use the phrase, “That makes my blood boil,” to refer to something that makes us feel mad. And while his knowledge of the brain (which he mistakenly believed was for cooling the heart) was quite primitive, his insight that emotions are physical as well as psychological states was surely correct. Although it would sound strange to say that my brain feels angry, it would be perfectly appropriate for me to say that I feel the emotion of anger with my entire body.

Alternatively, Dr. Egnor could be arguing that memory is a form of knowledge, and that knowledge cannot have a physical location. Once again, this seems to be incorrect. For there seems to be nothing wrong with the statement that my knowledge of how to ride a bicycle resides in my head – more specifically, in my cerebellum. And we would surely say that an animal’s knowledge of some new motor skill that it had acquired by training resided in its brain. So it seems that at least some forms of knowledge can have a physical location.

Finally, Dr. Egnor could be arguing that propositions cannot have a physical location. This seems to be what he actually means, judging from the helpful example he gives in another post:

Right now I remember that I have an appointment at noon….

My memory is my thought that I have an appointment at noon.

Now, Dr. Egnor is surely correct when he asserts that propositions cannot have a physical location, any more than they can have a color or a shape. To ascribe a location to a proposition would be committing a category mistake. But in order to argue that declarative memory (which we use to retrieve propositional knowledge) cannot have a physical location, Dr. Egnor needs to establish an additional premise: namely, that whenever we commit facts or episodes in our lives to memory, we store them as propositions. This is precisely what Dr. Steven Novella denies, in his first reply to Dr. Egnor: “Memories … don’t have to be propositions,” he writes. If I understand him aright, Dr. Novella is proposing that the brain stores information that we assemble into propositions when we retrieve it.

I conclude, then, that all Dr. Egnor has shown is that we do not store propositions in our memory. However, it may well be that we store information which, when retrieved, enables us to formulate propositions that we can utter aloud (or think silently). Of course, that still leaves us with the obvious question: who is it that assembles this information into propositions? There seems to be no way to eliminate all references to the “self” from explanations of how we manage to express our ideas in language. That poses a conundrum for reductionist materialism. However, an emergent materialist might argue that the self is a higher-level reality that somehow emerges from the activities of the brain.

Can the brain store representations?

We have seen that propositional information cannot be stored in the brain. The fallback position for a materialist would be to say that the brain stores representations instead. Dr. Egnor is quite willing to grant that the brain can store representations:

Now you may believe — as most neuroscientists and too many philosophers (who should know better) mistakenly believe — that although of course memories aren’t “stored” in brain tissue per se, engrams of memories are stored in the brain, and are retrieved when we remember the knowledge encoded in the engram. Indeed neuroscientists believe that they have found things in the brain very much like engrams of some sort, that encode a memory like a code encodes a message…

Representations of memories can [indeed] be stored, and representations of all kinds are stored on computers and in books and in photo albums all the time…

But there’s an immediate problem: how does the brain represent propositions – or for that matter, the abstract concepts that these propositions describe?

For most of our memories and thoughts — those that are not pure images — the concept of representation in the brain simply doesn’t make sense…

When I remember that the Battle of Hastings was in 1066, I am not remembering an image at all. I am simply remembering that the Battle of Hastings was in 1066 — I’m remembering a fact, not any kind of image.

How could a fact — not an image — be represented in the brain? How can a concept be represented in the brain? How could synapses represent mercy or justice or humility? How can logical and mathematical concepts be represented in the brain? What would the brain representation for imaginary numbers be?

The assertion that the brain stores memories makes no sense.

|

However, Dr. Novella could reply that computers like IBM’s Watson (pictured above, image courtesy of Wikipedia), which are purely physical entities, can certainly answer questions posed in natural language, such as: “When was the Battle of Hastings?” and “What’s an imaginary number?” The fact that Watson can answer these questions in full sentences demonstrates that it must be possible, after all, for a physical entity to encode information from which propositions can be put together. And if Watson can do it, why can’t the human brain? Of course, Dr. Egnor could reply that Watson has no understanding of what it is doing, which is certainly true. Nevertheless, the indisputable fact is that Watson is outputting novel propositions – often about very abstract topics such as logic and mathematics – on the basis of the information it stores in its memory.

So, how does the brain encode the information relating to the Battle of Hastings? This morning, as I lay in bed, I pondered this problem, and mentally reviewed what I knew about the battle. Here’s what I came up with:

The Battle of Hastings was a battle BETWEEN the army of France (more precisely, Normandy) and England, IN the year 1066, AT a town called Hastings, on the (south? / east? / south-east?) coast of England. The Norman army, led by ?????, was the VICTOR. The English army, led by Harold (Harald?) LOST the battle. [What happened to Harold, I wonder?]

It should be quite clear that my memory of the battle was based on a web of associations, based on the mental schema that I use to store information in my brain about battles: a battle is an event between two armies (each led by one individual), at a particular time and place, with one (and only one) victor. It isn’t difficult to imagine how a network of visual and auditory associations in the brain could encode this information. The trickier question is how we retrieve it -a question I’ll discuss below.

As I lay in bed this morning, I felt very annoyed with myself for not being able to remember the king who led the Norman army. So I fell back on an old trick: I went through each letter of the alphabet, in the hope that this would trigger a memory of the king’s name. I paused at L … Louis? But the only famous King Louis whom I could think of from around that time was Louis IX of France – and he lived well in the 13th century, which was too late for him to have fought in the Battle of Hastings. I stopped again at P … Philip? No; Philip the Fair lived in the fourteenth century. Then I came to R … Robert? No; that didn’t ring a bell. OK. What about S … Stephen? That didn’t sound right, either. Finally, I came to W … of course! William the Conqueror! How stupid of me to have forgotten that.

I then turned to the English king. Probably his name was Harold, I decided: Harald was the Norwegian spelling. (I found out later that Harold actually had a brother named Harald.) But what happened to Harold? Was he killed? Or did he abdicate? If so, what did he do after that? I couldn’t remember, even though I had previously seen a replica of the Bayeux Tapestry somewhere. Bryan Talbot, a British comic book artist, has aptly called it “the first known British comic strip.” (I found out later that Harold had been killed in battle.)

|

I then remembered an old rhyme that I’d read in Sir Walter Scott’s Ivanhoe as a child, which encapsulated the resentment felt by the Saxons towards their Norman conquerors:

Norman saw on English oak

On English neck a Norman yoke

Norman spoon to English dish

And England ruled as Normans wish

Blithe world in England never will be more

Till England’s rid of all the four.

I had to piece the third and fourth lines of the rhyme together, as my memory was a little rusty, and as it turned out, my memory of the first part of the fifth line was faulty, too. This memory of mine was (at least partly) an episodic memory, relating to something I’d read 40 years previously. But the connections in my brain were still there.

Representations of what?

But at this point a problem arises. If the brain stores representations, then what are they representations of? Dr. Egnor thinks that they must be representations of propositions, and for this reason, he finds the notion that such representations are stored in the brain utterly absurd:

How could a fact — not an image — be represented in the brain?

He continues (bold emphases mine):

The difference between a memory and a representation of a memory is obvious. Right now I remember that I have an appointment at noon. I’m writing down “appointment at noon” on my calendar.

My memory is my thought that I have an appointment at noon.

The representation of my memory is the written note on my calendar.

Does my brain contain a representation of my memory that I have an appointment at noon?

If my brain state is a representation of my memory that I have an appointment at noon, what in the brain state maps to what in the memory? More incisively, what in the brain state could map to anything in the memory? How could my brain state represent my memory of my appointment? An actual written note in my cortex? A little calendar in my hippocampus? A tiny alarm set to go off in my auditory area? How, pray tell, could a brain state map to a thought, especially a thought that is not an image?

But the materialist has a ready reply here: he/she could argue that what the brain represents is not propositions but events. There is nothing inherently nonsensical in the idea that my brain can store a representation of an event such as the Battle of Hastings: all that is required is a suitable mental schema. I have already sketched the mental schema whereby a battle might be represented; other kinds of events will have their own unique schemas.

Now of course, Dr. Egnor could reply that this pushes the problem upstairs: who (or what) is creating the schemas for representing these different kinds of events? And how does this “Somebody” (or Something) convert events into propositions that can be couched in human language? It seems that we are forced to postulate a mysterious “agent in charge,” who makes these key decisions. Furthermore, it appears that this this decision cannot be identified with any physical process, because no material process can be equated with a purely formal activity such as propositional reasoning. My own view is that there is indeed a “self” or “I” which is capable of engaging in non-bodily activities (such as reasoning) in addition to bodily acts. I’ll say more about human agency below. However, the point I want to make here is that while we cannot meaningfully speak of the brain encoding facts or propositions, there is nothing inherently absurd about the notion that the brain can represent events.

Dr. Egnor also writes:

Materialists are also incoherent when they claim that the representation just is the memory. If the representation is the memory, it’s not a representation.

As we saw above, for Dr. Egnor, paradigmatic cases of human memories involve propositions: memories that something is the case (or is not the case). As he puts it in his illustration: “My memory is my thought that I have an appointment at noon.” And something cannot simultaneously be both a proposition and a representation of a proposition. A materialist would reject this account: he/she would say that there are events that take place in the external world, that the brain encodes representations of those events, and that a memory of an event is simply a representation of that event in the brain. Propositions are descriptions of those events, which we formulate after we have retrieved them from our memories. Hence on the materialist account, memories are quintessentially of events rather than that such-and-such events happened.

To be as fair as possible to the materialist, I think that the foregoing account is an intelligible one. It is coherent, and there is nothing obviously wrong with it.

Can the brain store and retrieve representations?

Finally, Dr. Egnor argues that even if the brain can store representations of memories, the brain cannot explain our ability to retrieve these representations at will (I’m conflating material from all three of his posts here):

…[L]et’s imagine via some materialist miracle (materialism is shot through with miracles) that I can map my memory of my appointment at noon to a brain state. But of course the map in my brain would need two things: it would have to be located and it would need to be read (maps don’t read themselves). So I would have to have some (unconscious) memory of where the brain map was and some (unconscious) memory of how to read the map, as well as some entity (homunculus?) who would read the map.

So even if my memory that I have an appointment at noon were represented in a brain state, I still have not solved the problem of memory. It still remains unexplained how the representation is accessed, decoded, and read.

Note: hand waving about “integrated… overlapping… massive parallel processing” and other neurobabble won’t do. Any map needs to be accessed and read, or it can’t be a map.

The concept of representation of memory in the brain is unintelligible.

…[R]epresentation in the brain is a highly problematic concept, because the act of representation presupposes memory and intentionality and intellect and will and all sorts of mental acts that are precisely the kind of things that materialists claim are explained by representation. If a memory in the brain were stored as a representation, one would have to presuppose a memory of the code or map that linked the representation to the memory and a memory of the location of the representation in the brain so it could be accessed. Representation presupposes memory, so it can’t explain memory.

Two responses a materialist might make to Egnor’s argument

(1) We don’t locate our memories; they locate us

After reading Dr. Egnor’s argument, a couple of thoughts occurred to me. The first was that Dr. Egnor was assuming (on the basis of introspection) that we can actively retrieve memories, at least sometimes. But a materialist might argue that it is not we who locate our memories; rather, it is our memories which locate us. In reality, memories “come to” us, and even when we think we are actively retrieving them, what is really happening is that the brain’s network of associations automatically triggers their recovery, without our having to do anything. If this picture is correct, then the question of how we manage to locate our memories simply does not arise.

A recent experience of mine nicely illustrates this point. I wanted to telephone a family member whom I hadn’t called in a while. I tried to remember their number and drew a blank. I didn’t panic. Instead, I decided to wait a few seconds, as I knew from past experience that if I waited, the number would come to mind. And sure enough, it did. I didn’t find the memory; the memory found me.

(2) The argument relies on faulty assumptions

The genesis of the argument

|

The Cartesian Theater: Objects experienced are represented within the mind of the observer. Image courtesy of Wikipedia.

The second thought I had (much later on) was that Dr. Egnor’s argument sounded familiar: I’d read it somewhere before. In fact, I was pretty sure that I’d actually made the same argument, on a previous occasion. And sure enough, I had – five years ago, as it turned out. Back in 2009, I was very impressed with an essay I’d recently read, written by the philosopher and parapsychology researcher Dr. Stephen Braude, and titled, “Memory without a trace” (European Journal of Parapsychology 21, Special Issue (2006): 182-202), which put forward arguments very similar to Dr. Egnor’s. I was even more heartened to find that the philosopher Dr. John Sutton had put forward some of the same arguments as Dr. Braude in an article titled, Memory in the Stanford Encyclopedia of Philosophy, where he wrote:

How does the postulated trace come to play a part in the present act of recognition or recall? Trace theorists must resist the idea that it is interpreted or read by some internal homunculus who can match a stored trace with a current input, or know just which trace to seek out for a given current purpose. Such an intelligent inner executive explains nothing (Gibson 1979, p. 256; Draaisma 2000, pp.212-29), or gives rise to a vicious Rylean regress in which further internal mechanisms operate in some “corporeal studio” (Ryle 1949/1963, p. 36; Malcolm 1970, p. 64).

But then the trace theorist is left with a dilemma. If we avoid the homunculus by allowing that the remembering subject can just choose the right trace, then our trace theory is circular, for the abilities which the memory trace was meant to explain are now being invoked to explain the workings of the trace (Bursen 1978, pp. 52-60; Wilcox and Katz 1981, pp. 229-232; Sanders 1985, pp. 508-10). Or if, finally, we deny that the subject has this circular independent access to the past, and agree that the activation of traces cannot be checked against some other veridical memories, then (critics argue) solipsism or scepticism results. There seems to be no guarantee that any act of remembering does provide access to the past at all: representationist trace theories thus cut the subject off from the past behind a murky veil of traces (Wilcox and Katz 1981, p. 231; Ben-Zeev 1986, p. 296).

Epistemic problems relating to the specificity and accuracy of memory

I excitedly broadcasted these arguments in a comment on Clive Hayden’s November 2009 post, Robert Wright and the Evolution of Compassion, in response to long-time commenter Mark Frank (who sometimes blogs on the Website, The Skeptical Zone). I argued as follows:

Let’s return to the argument cited above. The epistemic problems we face here are that: (i) current traces in the brain cannot uniquely specify the past events that generated them; and (ii) there is no general guarantee of their accuracy.

You [Mark Frank] write that there must be something wrong with Braude’s argument because “it applies equally to computer memory.” I disagree. Computers don’t do epistemology. They don’t ask themselves, “How do I know my memories are accurate?” Of course, we can ask that question about a computer’s memory, because we can trace the processes according to which computers encode, store and retrieve data. These processes sometimes generate errors, and we can usually rectify these.

I then noted that one way of attempting to rebut these epistemic arguments was to bite the bullet and reject the demand for incorrigible access to the past. This is what Dr. Sutton had seemed to suggest, in his essay, where he wrote:

The past is not uniquely specified by present input, and there is no general guarantee of accuracy: but the demand for incorrigible access to the past can be resisted.

In his essay, Dr. Sutton had also been honest enough to admit that there are weighty problems associated with the question of how memories can represent anything at all. As he put it:

How can memory traces represent past events or experiences? How can they have content? …In stating the causal theory of memory, Martin and Deutscher argued that an analysis of remembering should include the requirement that (in cases of genuine remembering) “the state or set of states produced by the past experience must constitute a structural analogue of the thing remembered” (1966, pp. 189-191), although they denied that the trace need be a perfect analogue, “mirroring all the features of a thing”. But is there a coherent notion of structural isomorphism to be relied on here? If memory traces are not seen as images in the head, somehow directly resembling their objects, and if we are to cash out unanalysed and persistent metaphors of imprinting, engraving, copying, coding, or writing (Krell 1990, pp. 3-7), then what kind of “analogue” is the trace?

Now, I was aware that cognitive scientists had a reply to these objections – namely, that our memories of past events are actually reconstructions rather than recordings. But this solution, I argued, only raised further questions regarding how we can trust the accuracy of our memories:

To deal with these problems, cognitive scientists are forced to suppose that memory is a constructive process, and that the traces are “dynamic” – i.e. in a state of perpetual flux. Although Sutton maintains that “there is no reason to think that ‘constructed memories’ must be false,” I feel compelled to respond that that is no reason why we should trust them.

I might add that structural isomorphism is far less problematic for computer memory than it is for human memory. But then, computers do a lot fewer things with their memories than we do, and in a much more humdrum, routine fashion. And they don’t ask skeptical questions.

In short: the notion of a “memory trace” in the brain that mirrors the past event that originally caused it does not hold up to philosophical and scientific scrutiny. There are good grounds for thinking such a trace could not exist. And whatever “traces” do reside in the brain carry no assurance of their reliability, even in general terms. If that’s not a problem for materialism, I don’t know what is.

Further problems relating to semantic memory

I then concluded by quoting a passage from Braude’s essay, arguing that the materialistic “trace theory” would only be capable of explaining episodic memories, of past experiences. However, such a theory would not work for semantic information:

If trace theory has any plausibility at all, it seems appropriate only for those situations where remembering concerns past experiences, something which apparently could be represented and which also could resemble certain triggering objects or events later on. But we remember many things that aren’t experiences at all, and some things that aren’t even past — for example, the day and month of my birth, the time of a forthcoming appointment, that the whale is a mammal, the sum of a triangle’s interior angles, the meaning of “anomalous monism.” Apparently, then, Kohler’s point about trace activation and the need for similarity between trace, earlier event, and triggering event, won’t apply to these cases at all. So even if trace theory was intelligible, it wouldn’t be a theory about memory generally. (Emphasis mine – VJT.)

I ended on a triumphant note:

…[I]f materialism cannot even account for memory, then why should we believe that it can account for higher-order mental acts, such as human compassion, or reasoning?

Content-addressable computer memory: what Braude, Sutton and I had overlooked

|

Steely Dan performing in 2007. Image courtesy of Wikipedia.

As they say, pride comes before a fall. It wasn’t long before materialists commenting on the same thread came up with a reply to my arguments. One skeptic, scrofulous, patiently pointed out that Braude and Sutton had relied on the same false assumptions about the nature of human memory (emphases mine):

Braude and Sutton are making the same mistake: each is assuming that an independent mechanism is necessary in order to identify the appropriate trace and “read it out”.

That is how conventional computer memory works. The items to be remembered are stored in particular locations, each of which has a unique numerical address. To retrieve a datum, the appropriate numerical address must be presented to the memory system. Braude and Sutton are effectively asking “Who remembers the address? And if the address itself is stored in memory, who remembers the address where the address is stored? And what about the address where the address of the address is stored? And so on…”

Apparently, neither Braude nor Sutton is aware that there is another kind of memory, known as content-addressable memory (or CAM), wherein memory locations are activated not by address, but by virtue of their contents. In this scheme, an input pattern is presented to all of the memory locations at once. A particular memory location will activate itself based on how closely its contents match the input pattern.

Human memory is clearly much more like CAM than it is like ordinary computer memory.

For example, this morning I saw a reference to the Rio Grande in an article I was reading. Immediately, the song King of the World by Steely Dan started playing in my mind. It contains the line “Any man left on the Rio Grande is the king of the world, as far as I know” (great song, by the way. You can listen to it here).

Obviously, my brain didn’t go through a list of each song in its memory, one by one, playing them back and looking for one containing the phrase “Rio Grande”. That would have taken forever. Instead, the appropriate memory trace was activated because of its similarity to the input pattern — in this case the phrase “Rio Grande”.

Further evidence that human memory is like a CAM is that the input pattern doesn’t have to be identical to the stored pattern in order to activate a trace. I ran across the name “Sebastian Cote” during a web search yesterday, and I immediately thought of Sebastian Coe, a British distance runner who held the world record for the mile in the 1980′s. I had never seen the name “Sebastian Cote” before, so there was clearly nothing in my brain that said “when you see the name ‘Sebastian Cote’, go and activate the trace stored at this location” (the one containing Sebastian Coe). Instead, that trace was activated based on the similarity between the input pattern “Sebastian Cote” and the stored pattern “Sebastian Coe”.

CAM can also explain some of the confusions our memories generate. A friend of mine once attributed the song “Hello It’s Me” to Dolph Lundgren. Todd Rundgren is the singer who did that song; Dolph Lundgren is an actor. If our memories were accessed by location, this sort of confusion would not occur.

Human memory is clearly CAM-like, and CAMs do not suffer from the problem raised by Braude and Sutton.

How computers can check the accuracy of their memories

In response to my argument that a materialist account of memory fails to guarantee its reliability, a commenter named Mr. Nakashima pointed out that even computers have a mechanism which enables them to check the reliability of their memories:

I think if we can analogize to computer memory at all, then there are analogs to epistemology in the computer arena. One is the checksum, which is used to verify that a number of memory cells have not changed. The error correcting code is similar. At another level of analogy, we have the database journal.

When these are combined, we have systems that know when they have suffered a ‘stroke’, such as a cosmic ray changing the values stored in memory, and a way of recovering the correct version of that memory.

We also have systems of reasoning that can explain their conclusions going back to ground facts such as “I observed it.” “I was taught it.” “I deduced it through this logical operation.”

Why a non-materialistic account of memory is even worse than a materialistic one

In addition, a commenter named Seversky argued that a non-materialistic account of memory was even more problematic than a materialistic one:

If we assume the alternative, that memory is not stored locally in the brain but elsewhere, with the brain being some sort of interface device or transceiver, the problems are multiplied. Not only does the problem of explaining how memory works remain but also have to explain where and in what form memories are stored. If it is some kind of transcendental common mass storage domain, how do we locate and retrieve just our memories from all the others? Whatever the problems inherent in any explanation of brain-based storage, they are multiplied massively with such an alternative.

Memory: fallible but usually correct

Finally, skeptical commenter Mark Frank addressed my concerns about the reliability of memory, and about how the brain might store semantic memories:

You ask why should we trust [our memories]? This seems rather easy to answer. Because in practice we find that they are mostly reasonably accurate as determined by consistency with our other memories, other people’s memories, and current observations. But in any case there seems to be no reason why adding a dash of the supernatural should make them more trustworthy.

A slightly different question is why do we trust our memories? This is different from why should we trust them. When I remember going to the cinema last night it is not based on a careful evaluation of its consistency with other events. I am certain because I remember it so vividly. There is also the phenomenon of recognition. I may be sure I have seen a face or situation before without knowing when or where (and this process may also be in error sometimes – déjà vu). This strikes me as an evolved facet of the way our mind/brain works. As in many other areas we have evolved a propensity to trust our senses and memories without stopping to evaluate them…

Finally let’s deal with non-episodic memory. Remembering is many different things and these different things may have different physical explanations. Semantic memory is broadly the ability to use certain facts (e.g. that the ipconfig command will give me the IP address of a router) in a wide variety of situations. The physical explanation is that there is something in our brain that causes me to reconstruct that fact when I need to. I see no reason why it has to correspond to a past experience – although clearly past experiences have created this ability in my brain. Indeed this is what learning in the cognitive brain is all about.

That’s quite enough.

Readers who are interested in current scientific hypotheses regarding the location of semantic information in the brain might like to have a look here.

My response to the skeptics

In my response to the substantive points made by these skeptical commenters, I graciously conceded that they had done a good job of defending a materialistic account of memory, although some questions remained unaddressed:

After reading all your comments, I have to acknowledge that Braude’s original argument that memories cannot possibly be stored in the brain, which I cited above in #13 and #23, is by no means as compelling as I had imagined, although I still think it has a good deal of merit, for reasons I shall outline below.

Scrofulous’ suggestion (#24) that memories stored in the brain may be content-addressable certainly makes a lot of sense, and I think it’s a satisfactory response to Braude’s infinite regress argument.

I will also take on board Mr. Nakashima’s point that computers can be said to check their own memories, using algorithms such as checksum, and can also recover them. However, it would be a category mistake to attribute the cognitive attitude of skepticism to a computer purely on the basis of its ability to detect and correct its own faults – and I don’t think you would wish to do that, anyway.

Seversky makes the excellent point that difficult as it is to suppose that memories are stored in the brain, our problems are multiplied many times over if we suppose them to be stored somewhere else – e.g. in some immaterial realm. I agree – and so does Braude. That was his whole point – memory isn’t stored anywhere…

All of you seem to have agreed (or conceded) that if memories are stored in the brain, they are not isomorphic to the events that they are supposed to be memories of.

In that case, it seems we may still legitimately ask:

(1) What makes them representational, if isomorphism is absent?

(2) What makes them memories of one particular event in the past, rather than a host of similar events resembling it, which might have happened instead?

(3) Why should we trust them, if there is no guarantee of their accuracy?

(4) How far should we trust them, if there is no such guarantee? …

Mark Frank’s answer to the warrant problem is a practical one… I presume Mark Frank would add that if our memories weren’t generally reliable, we wouldn’t be here now. Animals that mis-remember tend to die young, leaving no progeny.

Well, my response is: it’s not that simple. Showing that our memories work and have worked in our evolutionary past isn’t the same as showing why we should trust them. I might have a justified belief that my memory is probably accurate at any given time. But the causal and structural nexus between a memory M and the event E that it’s supposed to be a memory of, remains as mysterious as ever. In effect, the pragmatic justification amounts to saying: “Don’t ask me how it works. It just does, that’s all.”…

While human memory clearly has much more in common with content addressable memory than with other kinds of computer memory, it behooves us to be skeptical. Where’s the pattern in the brain? That’s the point at issue. And if it does exist, it’s in flux. Sure, there may be a causal chain from the original event to the current memory – but causal chains can sometimes be wayward. (See here .) It seems that a purely physicalist account of memory offers no way in principle of distinguishing a bona fide causal chain from a wayward causal chain.

Finally, the philosophical problem of how memories stored in the brain could be said to be representations has not been addressed at all.

Despite my misgivings about the materialist account of memory, I think a fair-minded referee would have judged that the skeptics had the better of the argument, and that dualists like myself had failed to provide a better alternative theory.

So, what’s really wrong with the materialist theory of memory?

Five years have passed since then, and I have had time to reflect. In the final part of today’s post, I’d like to highlight what I see as the two main flaws in the materialist account of memory. Both of these flaws relate to the notion of a rule.

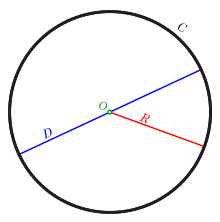

|

Circle with circumference (C) in black, diameter (D) in cyan, radius (R) in red, and center or origin (O) in magenta. Image courtesy of Wikipedia.

The first major problem with the materialist account is its inability to explain human concepts. Now, a naive materialist might be tempted to equate concepts with mental images. But that cannot be right: as the philosopher Aristotle pointed out 2,300 years ago in his De Anima, my image of the Sun is less than a foot across, yet in reality I know it to be larger than the Earth. Thomist philosopher Ed Feser puts forward another related argument: my mental image of a 999-sided regular polygon is identical with my mental image of a 1,000-sided regular polygon, yet my concepts of the two figures are fundamentally different: the former is a figure with an odd number of sides, which can be divided into three equal slices (since 3 x 333 = 999), while the latter is a figure with an even number of sides, which can be divided into two or five equal slices, but not three.

A more sophisticated materialist might try to identify concepts with mental schemas, which have a clearly defined internal structure. For example, the concept of a battle is connected to the attributes of a place (where the battle took place), a time (when it occurred), a winning side (headed by a leader), and a losing side (also headed by a leader). Similarly, the concept of a circle is connected with the attributes of a radius, a diameter (which is double the radius), a circumference and an area.

What the foregoing account overlooks, however, is that human concepts – as distinct from the more basic concepts possessed by other animals – are defined by rules. For instance, the concept of a circle is defined by the rules that the circumference of a circle is 2 times pi times the radius of the circle, while the circle’s area is equal to pi times the square of the radius. In order to reason properly about circles, we have to observe these and other similar rules.

The difficulty for a materialist account of memory here is not how the content of the rule (e.g. C=2*pi*r, or A=pi*(r^2)) can be stored in the brain: we commit rules to memory (and forget them) all the time, and I am sure that a neuroscientist could explain why we forget rules so easily, in purely materialistic terms. The real difficulty, though, is the notion of a rule itself. A rule is a prescription, and the language of scientific explanations is essentially descriptive rather than prescriptive. A neuroscientist can tell me how I store a formula in my brain, but in so doing, (s)he has failed to explain how I come to regard that formula not as merely a fact that happens to be true about all the circles I’ve ever come across, but as a norm that tells me how I ought to think about circles. For as the philosopher David Hume famously observed, you cannot derive an “ought” from an “is.” A formula, as such, is simply a descriptive statement; it does not come with a “must” or an “ought” attached to it. We add those, when we attempt to understand how Nature works.

|

The second flaw in the materialist account is that it completely ignores the meta-cognitive strategies that we use to retrieve items from memory. And that brings me to Rameses the Great, the Egyptian pharaoh who is the subject of today’s article. Some archaeologists consider him to be the Pharaoh of the Exodus; others identify his successor Merneptah as the Exodus pharaoh, while a few archaeologists even opt for a pharaoh from the fifteenth or sixteenth century B.C.

Anyway, a few days ago, I was trying to recall the date when Rameses’ reign began. No luck. I vaguely remembered that it was prior to 1,300 B.C., but no date “clicked” in my head. That was disconcerting. I have a very poor memory for names and faces, but I have an excellent memory for figures. Still, it had been decades since I’d last read anything about Rameses, so it wasn’t altogether surprising that my memory had drawn a blank.

But all was not lost. I seemed to recall that the reign of Rameses the Great had ended in 1,234 B.C. which is not a difficult date to remember. I also vaguely recalled that Rameses’ reign had lasted for an extraordinary 67 years. I was then able to deduce that Rameses’ reign must have begun in 1,301 B.C. And as soon as I calculated that date, it suddenly felt familiar to me. The number had lain in my memory all along, but the neural connection between the date 1,301 B.C. and the pharaoh Rameses the Great had grown faint. Nevertheless, I had been able to retrieve this vague memory, using a meta-cognitive strategy – a feat, I might add, which only humans seem to be capable of. In so doing, I had employed a simple rule, which enabled me to deduce a piece of missing information: the beginning of a monarch’s reign, when added to the length of that reign, equals the end of the monarch’s reign. (I have since found out that Egyptologists don’t all agree on the date of Rameses the Great’s reign: some sources list it as lasting from 1,279 B.C. to 1,213 B.C. Who’s right? I have no idea.)

The problem for materialism here is that the language that scientists use when talking about the brain is purely descriptive, whereas rules are essentially prescriptive. Once again, Hume’s “is-ought” divide rears its head: no matter how minutely I describe what my brain is doing right now, you will never be able to deduce what result I ought to come up with. To deduce that, you need to know the rule that I am trying to follow.

Of course, in real life, rule-following is an everyday occurrence: we have no trouble at all in correcting other people’s addition mistakes, for instance. But the more fundamental question that we need to ask, and which the materialist is incapable of answering, is: what makes it possible for us to follow a rule in the first place? The descriptive language that scientists use to talk about the human brain can no more explain the existence of prescriptions (or rules) than an objective, “third-person” account of color, analyzing it in terms of its constituent wavelengths, can explain the subjective, “first-person” experience of the color green. Rules, like subjective experiences, are an irreducible fact of life.

Now, it might be objected that computers (which are purely material entities) follow the rules of logic and arithmetic. But this is simply incorrect. A computer calculates as it is programmed to – and even when it checks the result of its own calculation, it does so because it was programmed to do that, too. Computers behave in accordance with rules, but they do not follow rules. A computer is a man-made device that is designed to mimic rule-following – but when a computer produces an incorrect result, we do not remonstrate with it and say, “You ought to have done better.” Nor does a computer ever ask itself: “Am I doing what I ought to do?” let alone the question, “What ought I to do?” Only genuine rule-followers are capable of doing that.

Why the notion of “self” is essential to the enterprise of following a rule

And this brings me to my final point: a rule-follower needs to have a concept of self, before it can formulate the question, “Am I acting as I should?” Rule-following and subjectivity are thus inter-twined: the former presupposes the latter. The notion of the “self” is therefore not a “confabulation” of the brain (as contemporary materialists would have us believe), for as we have seen, the processes occurring in the brain are incapable of explaining the enterprise of following a rule. Rather, the notion of “self” is an ineliminable, fundamental concept, without which we would be unable to think at all.

Now at least we can see why the adage “Mind is what matter does” cited by Dr. Steven Novella in his last post on memory, in reality explains nothing. Material processes can be described, but they do not yield any prescriptions.

Putting it more precisely, the reason why the human mental acts are capable of conforming to these norms is that we have the ability to attend to and follow a rule – which is quite different from merely behaving in conformity with a rule. As the philosopher John Searle explains, when one merely conforms to a rule, it is sufficient that the rule somehow determine one’s behavior, but when one follows a rule, something additional is required:

“In order that the rule be followed, the meaning of the rule has to play some causal role in the behavior.” (Minds, Brains and Science, Penguin Books, London, 1984, p. 47, italics mine.)

Searle’s comment suggests how we might formulate a fairly robust argument against materialism:

1. Human beings typically follow rules when they think.

2. Whenever human beings think, their behavior is explained (and caused) by the meaning of the rule they are following.

3. Whenever human beings’ behavior is caused by some physical process, their behavior is entirely explained by the physical properties of the process; meaning plays no causal role in this account.

4. Therefore, whenever human beings think, their behavior is not entirely caused by physical processes.

I should emphasize that Searle himself, who is a materialist, does not draw these anti-materialistic conclusions in his 1984 book Minds, Brains and Science; on the contrary, he avows that mental processes are “entirely caused, by processes going on inside the brain” (1984, p. 39). What Searle argues instead is that computers, which are designed to calculate in conformity to some rule(s), cannot truly be said to think, and that a computational account of mind is therefore false. Nevertheless, it seems to me that his arguments expose a cardinal difficulty facing any materialistic theory of mind.

What Thomistic dualism is – and isn’t

|

In his first post on memory, Dr. Egnor declared himself to be a Thomistic dualist, rather than a Cartesian dualist. Dr. Novella’s response was dismissive:

Thomistic dualism is just another flavor of dualistic nonsense, the notion that there are two kinds of stuff, material stuff and spiritual stuff. Egnor’s preferred version actually solves nothing, it simply asserts that the soul and the material body work together. (OK, problem solved, I guess.)

The foregoing remark reveals that Dr. Novella completely misunderstands Thomistic dualism: he evidently mistakes it for Cartesian dualism. Thomists do not maintain that “spiritual stuff” acts on “material stuff”; what they maintain instead is that when I act, some of my actions (including remembering, as opposed to the conscious recall that I used when retrieving the date of Rameses the Great’s reign) are physical acts that I perform with my body, while other actions of mine (including reasoning, in which I follow certain certain rules, or prescriptions) are non-bodily acts. It’s as simple as that. On the Thomistic account, every human being is a unity. An organism’s soul is simply its underlying principle of unity. The human soul, with its ability to reason, does not distinguish us from animals; it distinguishes us as animals. The unity of a human being’s actions is actually deeper and stronger than that underlying the acts of a non-rational animal: rationality allows us to bring together our past, present and future acts, when we formulate plans. When Aquinas argues that the act of intellect is not the act of a bodily organ, he is not showing that there is a non-animal act engaged in by human beings. He is showing, rather, that not every act of an animal is a bodily act. The human animal is capable of non-bodily acts in addition to bodily ones. In order to better appreciate the distinction between Cartesian dualism (which is similar to St. Augustine’s personal view of the relationship between body and soul) and Thomistic dualism, Dr. Novella would do well to read the essay, From Augustine’s Mind to Aquinas’ Soul by Fr. John O”Callaghan, S.J.

Of course, I realize that Dr. Novella will want to ask: if thinking and choosing are immaterial acts, then how does my act of will make my body move? I have addressed this question in other posts, notably <a href="https://uncommondescent.com/intelligent-design/how-is-libertarian-free-will-possible/">here and here, and also in this post, where I address the “interaction problem.” For an alternative view of the interaction problem, defended by a leading contemporary Thomist philosopher, see here. Finally, for a fairly comprehensive list of philosophical and empirical arguments against materialism, see here.

I would like to conclude by wishing Dr. Michael Egnor, Dr. Steven Novella and all my readers a very happy New Year.