|

Professor Larry Moran has kindly responded to my recent post questioning whether he, or anyone else, understands macroevolution. In the course of his response, titled, What do Intelligent Design Creationists really think about macroevolution?, Professor Moran posed a rhetorical question:

I recently wrote up a little description of the differences between the human and chimpanzee/bonobo genomes showing that those differences are perfectly consistent with everything we know about mutation rates and the fixation of alleles in populations [Why are the human and chimpanzee/bonobo genomes so similar?]. In other words, I answered Vincent Torley’s question [about whether there was enough time for macroevolution to have occurred – VJT].

That post was met with deafening silence from the IDiots. I wonder why?

I’ve taken the trouble to read Professor Moran’s post on the genetic similarity between humans, chimpanzees and bonobos, and I’d like to make the following points in response.

1. Personally, I accept the common ancestry of humans, chimpanzees and bonobos. Of course, I am well aware that many Intelligent Design theorists don’t accept common ancestry, but some prominent ID advocates do. Why do I accept common descent? Because I think it’s the best explanation for the pattern of similarities we find between humans, chimpanzees and bonobos. Young-earth creationist Todd Wood (who is also a geneticist) has freely acknowledged that it is difficult to explain these similarities without assuming common ancestry, in his 2006 article, The Chimpanzee Genome and the Problem of Biological Similarity (Occasional Papers of the BSG, No. 7, 20 February 2006, pp. 1-18). Referring to studies which highlight these similarities, he writes:

Creationists have responded to these studies in a variety of ways. A very popular argument is that similarity does not necessarily indicate common ancestry but could also imply common design (e.g. Batten 1996; Thompson and Harrub 2005; DeWitt 2005). While this is true, the mere fact of similarity is only a small part of the evolutionary argument. Far more important than the mere occurrence of similarity is the kind of similarity observed. Similarity is not random. Rather, it forms a detectable pattern with some groups of species more similar than others. As an example consider a 200,000 nucleotide region from human chromosome 1 (Figure 2). When compared to the chimpanzee, the two species differ by as little as 1-2%, but when compared to the mouse, the differences are much greater. Comparison to chicken reveals even greater differences. This is exactly the expected pattern of similarity that would result if humans and chimpanzees shared a recent common ancestor and mice and chickens were more distantly related. The question is not how similarity arose but why this particular pattern of similarity arose. To say that God could have created the pattern is merely ad hoc. The specific similarity we observe between humans and chimpanzees is not therefore evidence merely of their common ancestry but of their close relationship.

Evolutionary biologists also appeal to specific similarities that would be predicted by evolutionary descent. Max’s (1986) argument for shared errors in the human and chimpanzee genome example of a specific similarity expected if evolution were true. This argument could be significantly amplified from recent findings of genomic studies. For example, Gilad et al. (2003) surveyed 50 olfactory receptor genes in humans and apes. They found that the open reading frame of 33 of the human genes were interrupted by nonsense codons or deletions, rendering them pseudogenes. Sixteen of these human pseudogenes were also pseudogenes in chimpanzee, and they all shared the exact same substitution or deletion as the human sequence. Eleven of the human pseudogenes were shared by chimpanzee, gorilla, and human and had the exact same substitution or deletion. While common design could be a reasonable first step

to explain similarity of functional genes, it is difficult to explain why pseudogenes with the exact same substitutions or deletions would be shared between species that did not share a common ancestor.

Nevertheless, Wood feels compelled to reject common ancestry, since he believes the Bible clearly teaches the special creation of human beings (Genesis 1:26-27; 2:7, 21-22). Personally, I’d say that depends on how you define “special creation.” Does the intelligent engineering of a pre-existing life-form into a human being count as “creation”? In my book it certainly does.

2. In his post, Professor Moran (acting as devil’s advocate) proposes the intelligent design hypothesis that “the intelligent designer created a model primate and then tweaked it a little bit to give chimps, humans, orangutans, etc.” However, he argues that this hypothesis fails to explain “the fact that humans are more similar to chimps/bonobos than to gorillas and all three are about the same genetic distance from orangutans.” On the contrary, I think it’s very easy to explain that fact: all one needs to posit is three successive acts of tweaking, over the course of geological time: a first act, which led to the divergence of African great apes from orangutans; a second act, which caused the African great apes to split into two lineages (the line leading to gorillas and the line leading to humans, chimps and bonobos); and finally, a third act, which led humans to split off from the ancestors of chimps and bonobos.

“Why would a Designer do it that way?” you ask. “Why not just make a human being in a single step?” The short answer is that the Designer wasn’t just making human beings, but the entire panoply of life-forms on Earth, including all of the great apes. Successive tweakings would have meant less work on the Designer’s part, whereas a single tweaking causing a simultaneous radiation of orangutans, gorillas, chimps, bonobos and humans from a common ancestor would have necessitated considerable duplication of effort (e.g. inducing identical mutations in different lineages of African great apes), which would have been uneconomical. If we suppose that the Designer operates according to a “minimum effort” principle, then successive tweakings would have been the way to go.

3. But Professor Moran has another ace up his sleeve, for he argues that the number of mutations that have occurred since humans and chimps diverged matches the mutation rate that has occurred over the last few million years. In other words, time is all that is required to generate the differences we observe between human beings and chimpanzees, without any need for an Intelligent Designer:

The average generation time of chimps and humans is 27.5 years. Thus, there have been 185,200 generations since they last shared a common ancestor if the time of divergence is accurate. (It’s based on the fossil record.) This corresponds to a substitution rate (fixation) of 121 mutations per generation and that’s very close to the mutation rate as predicted by evolutionary theory.

Now, I suppose that this could be just an amazing coincidence. Maybe it’s a fluke that the intelligent designer introduced just the right number of changes to make it look like evolution was responsible. Or maybe the IDiots have a good explanation that they haven’t revealed?

Some mathematical objections to Professor Moran’s argument

|

Professor Moran makes the remarkable claim that 130 mutations are fixed in the human population, in each generation. Here are a few reasons why I’m doubtful, even after reading his posts on the subject (see here, here and here):

(a) most mutations will be lost due to drift, so a mutation will have to appear many times before it gets fixed in the population;

(b) necessarily, the mutation rate will always be much greater than the fixation rate;

(c) nearly neutral mutations cannot be fixed except by a bottleneck.

I owe the above points to a skeptical biologist who kindly offered me some advice about fixation. As I’m not a scientist, I shall pursue the matter no further. Instead, I’d like to invite other readers to weigh in. Is Professor Moran’s figure credible?

Professor Moran is also assuming that chimps and humans diverged a little over five million years ago. He might like to read the online articles, What is the human mutation rate? (November 4, 2010) and A longer timescale for human evolution (August 10, 2012), by paleoanthropologist John Hawks, who places the human-chimp divergence at about ten million years ago, but I’ll let that pass for now.

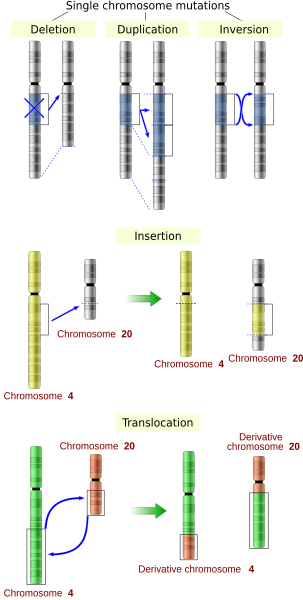

I shall also overlook the fact that Professor Moran severely underestimates the genetic differences between humans and chimps. As Jon Cohen explains in an article in Science (Vol. 316, 29 June 2007) titled, Relative Differences: The Myth of 1%, these differences include “35 million base-pair changes, 5 million indels in each species, and 689 extra genes in humans,” although he adds that many of these may have no functional meaning, and he points out that many of the extra genes in human beings are probably the result of duplication. Cohen comments: “Researchers are finding that on top of the 1% distinction, chunks of missing DNA, extra genes, altered connections in gene networks, and the very structure of chromosomes confound any quantification of ‘humanness’ versus ‘chimpness.’” Indeed, Professor Moran himself acknowledges in another post that “[t]here are about 90 million base pair differences as insertion and deletions (Margues-Bonet et al., 2009),” but he goes on to add that the indels (insertions and deletions) “may only represent 90,000 mutational events if the average length of an insertion/deletion is 1kb (1000 bp).” Still, 90,000 is a pretty small number, compared to his estimate of 22.4 million mutations that have occurred in the human line.

I could also point out that the claim made by Professor Moran that the DNA of humans and chimps is 98.6% identical in areas where it can be aligned is misleading, taken on its own: what it overlooks is the fact that, as creationist geneticist Jeffrey Tomkins (who obtained his Ph.D. from Clemson University) has recently demonstrated, the chromosomes of chimpanzees display “an overall genome average of only 70 percent similarity to human chromosomes” (Human and Chimp DNA–Nearly Identical, Acts & Facts 43 (2)).

I might add (h/t StephenB) that Professor Moran has overlooked the fact that humans have 23 pairs of chromosomes, whereas chimpanzees (and other great apes) have 24. However, Dr. Jeffrey Tomkins has published an article titled, Alleged Human Chromosome 2 “Fusion Site” Encodes an Active DNA Binding Domain Inside a Complex and Highly Expressed Gene—Negating Fusion (Answers Research Journal 6 (2013):367–375). Allow me to quote from the abstract:

A major argument supposedly supporting human evolution from a common ancestor with chimpanzees is the “chromosome 2 fusion model” in which ape chromosomes 2A and 2B purportedly fused end-to-end, forming human chromosome 2. This idea is postulated despite the fact that all known fusions in extant mammals involve satellite DNA and breaks at or near centromeres. In addition, researchers have noted that the hypothetical telomeric end-to-end signature of the fusion is very small (~800 bases) and highly degenerate (ambiguous) given the supposed 3 to 6 million years of divergence from a common ancestor. In this report, it is also shown that the purported fusion site (read in the minus strand orientation) is a functional DNA binding domain inside the first intron of the DDX11L2 regulatory RNA helicase gene, which encodes several transcript variants expressed in at least 255 different cell and/or tissue types. Specifically, the purported fusion site encodes the second active transcription factor binding domain in the DDX11L2 gene that coincides with transcriptionally active histone marks and open active chromatin. Annotated DDX11L2 gene transcripts suggest complex post-transcriptional regulation through a variety of microRNA binding sites. Chromosome fusions would not be expected to form complex multi-exon, alternatively spliced functional genes. This clear genetic evidence, combined with the fact that a previously documented 614 Kb genomic region surrounding the purported fusion site lacks synteny (gene correspondence) with chimpanzee on chromosomes 2A and 2B (supposed fusion sites of origin), thoroughly refutes the claim that human chromosome 2 is the result of an ancestral telomeric end-to-end fusion.

If Professor Moran believes that Dr. Tomkins’ article on chromosome fusion is flawed, then he owes his readers an explanation as to why he thinks so.

The vital flaw in Moran’s reasoning

Leaving aside these points, the real flaw in Professor Moran’s analysis is that he assumes that the essential differences between humans and chimpanzees reside in the 22.4 million-plus mutations – for the most part, neutral or near-neutral – that have occurred in the human line since our ancestors split off from chimpanzees. This is where I must respectfully disagree with him.

In my recent post, Does Professor Larry Moran (or anyone else) understand macroevolution? (March 19, 2014), I wrote:

No scientist can credibly claim to have a proper understanding of macroevolution unless they can produce at least a back-of-the-envelope calculation showing that it is capable of generating new species, new organs and new body plans, within the time available. So we need to ask: is there enough time for macroevolution?

I didn’t ask for a demonstration that macroevolution is capable of generating the neutral or near-neutral mutations that distinguish one lineage from another. Rather, what I wanted was something more specific.

In the post cited above, I endorsed the claim made by Dr. Branko Kozulic, in his 2011 VIXRA paper, Proteins and Genes, Singletons and Species, that the essential differences between species resided not in the neutral mutations they may have accumulated over the course of time, but in the hundreds of chemically unique genes and proteins they possessed, which have no analogue in other species. What Professor Moran really needs to show, then, is that a process of random genetic drift acting on neutral mutations is capable of generating these the chemically unique genes and proteins.

In an article titled, All alone (NewScientist, 19 January 2013), Helen Pilcher (whose hypothesis for the origin of orphan genes I critiqued in my last post) writes:

Curiously, orphan genes are often expressed in the testes – and in the brain. Lately, some have even dared speculate that orphan genes have contributed to the evolution of the biggest innovation of all, the human brain. In 2011, Long and his colleagues identified 198 orphan genes in humans, chimpanzees and orang-utans that are expressed in the prefrontal cortex, the region of the brain associated with advanced cognitive abilities. Of these, 54 were specific to humans. In evolutionary terms, the genes are young, less than 25 million years old, and their arrival seems to coincide with the expansion of this brain area in primates. “It suggests that these new genes are correlated with the evolution of the brain,” says Long.

|

These are the genes that I’m really interested in. Can a neutral theory of evolution, such as the one espoused by Professor Moran, account for their origin? Creationist geneticist Jeffrey Tomkins thinks not. In a recent blog article titled, Newly Discovered Human Brain Genes Are Bad News for Evolution, he writes:

Did the human brain evolve from an ape-like brain? Two new reports describe four human genes named SRGAP2A, SRGAP2B, SRGAP2C, and SRGAP2D, which are located in three completely separate regions on chromosome number 1.(1) They appear to play an important role in brain development.(2) Perhaps the most striking discovery is that three of the four genes (SRGAP2B, SRGAP2C, and SRGAP2D) are completely unique to humans and found in no other mammal species, not even apes.

Dr. Tomkins then summarizes the evolutionary hypothesis regarding the origin of these genes:

While each of the genes share some regions of similarity, they are all clearly unique in their overall structure and function when compared to each other. Evolutionists claim that an original version of the SRGAP2 gene inherited from an ape-like ancestor was somehow duplicated, moved to completely different areas of chromosome 1, and then altered for new functions. This supposedly occurred several times in the distant past after humans diverged from an imaginary ancestor in common with chimps.

However, this hypothesis faces two objections, which Dr. Tomkins considers fatal:

But this story now wields major problems. First, when compared to each other, the SRGAP2 gene locations on chromosome 1 are each very unique in their protein coding arrangement and structure. The genes do not look duplicated at all. The burden of proof is on the evolutionary paradigm, which must explain how a supposed ancestral gene was duplicated, spliced into different locations on the chromosome, then precisely rearranged and altered with new functions—all without disrupting the then-existing ape brain and all by accidental mutations.

The second problem has to do with the exact location of the B, C, and D versions of SRGAP2. They flank the chromosome’s centromere, which is a specialized portion of the chromosome, often near the center, that is important for many cell nucleus processes, including cell division and chromatin architecture.(3) As such, these two regions near the centromere are incredibly stable and mutation-free due to an extreme lack of recombination. There is no precedent for duplicated genes even being able to jump into these super-stable sequences, much less reorganizing themselves afterwards.

Professor Moran asks some more questions about species

In his latest post, What do Intelligent Design Creationists really think about macroevolution? (March 20, 2014), Professor Moran writes:

I’m not very clear on the "Theory" of Intelligent Design Creationism. Maybe it also predicts what it will be difficult to decide whether Neanderthals and Denisovans are separate species or part of Homo sapiens. Does anyone know how Intelligent Design Creationism deals with these problems? Can it tell us whether lions and tigers are different species or whether brown bears and polar bears are different species?

That’s a fair question, and I’ll do my best to answer it.

(a) Why Modern humans, Neandertals and Denisovans are all one species

Modern humans, Neandertals and Denisovans, who broke off from the lineages leading to Neandertal man and modern man at least 800,000 years ago, are known to have had 23 pairs of chromosomes in their body cells (or 46 chromosomes altogether), as opposed to the other great apes, which have 24 pairs (or 48 altogether).

What’s more, the genetic differences between modern man, Neandertal man and Denisovan man are now known to have been slight – so slight that it has been suggested that they be grouped in one species, Homo sapiens (see here, here, here, here, but see also here).

Finally, Dr. Jeffrey Tomkins addressed the genome of Neandertal man in a 2012 blog post titled, Neanderthal Myth and Orwellian Double-Think (16 August 2012):

Modern humans and Neanderthals are essentially genetically identical. Neanderthals are unequivocally fully human based on a number of actual genetic studies using ancient DNA extracted from Neanderthal remains.

An excursus regarding fruit flies and the identification of species

We noted above that Neandertals and Denisovans (which are all thought to belong to the same species) diverged around 800,000 years ago. However, a recent article by Nicola Palmieri et al., titled, The life cycle of Drosophila orphan genes (eLife 2014;3:e01311, 19 February 2014), indicates that orphan genes have been gained and lost in different species of the fruit-fly genus Drosophila. According to Timetree, Drosophila persimilis and Drosophila pseudoobscura diverged 0.9 million years ago. Drosophila pseudoobscura possesses no less than 228 orphan genes.

It seems prudent to conclude, then, that lineages which are known to have diverged more than 1 million years ago are indeed bona fide species.

N.B. A Science Daily press release at the time of publication of the article makes the following extravagant claim: “Recent work in another group has shown how orphan genes can arise: Palmieri and Schlötterer’s work now completes the picture by showing how and when they disappear.” It appears that this “other group” is actually a group of researchers at the University of California, Davis, who have shown in a recent study that new genes are being continually created from non-coding DNA, more rapidly than expected. Here’s the reference: Li Zhao, Perot Saelao, Corbin D. Jones, and David J. Begun. Origin and Spread of de Novo Genes in Drosophila melanogaster Populations. Science, 2013; DOI: 10.1126/science.1248286. I haven’t read the article, but judging from the press release, it seems that the authors haven’t identified a mechanism for the creation of these genes, as yet: “Zhao said that it’s possible that these new genes form when a random mutation in the regulatory machinery causes a piece of non-coding DNA to be transcribed to RNA.”

Dr. Jeffrey Tomkins provides a hilarious send-up of this logic in his article, Orphan Genes and the Myth of De Novo Gene Synthesis:

The circular form of illogical reasoning for the evolutionary paradigm of orphan genes and its counterpart ‘de novo gene synthesis’, goes like this. Orphan genes have no ancestral sequences that they evolved from. Therefore, they must have evolved suddenly and rapidly from non-coding DNA via de novo gene synthesis. And, are you ready? De novo gene synthesis must be true because orphan genes exist – orphan genes exist because of de novo gene synthesis. As you can see, one aspect of this supports the other in a circular fashion of total illogic – called a circular tautology.

At this stage, I think that press claims that scientists have solved the origin of orphan genes look decidedly premature, to say the least.

(b) Lions, tigers and leopards

|

What about lions and tigers? According to Timetree, lions and leopards diverged only 2.9 million years ago, while lions and tigers diverged 3.7 million years ago. All of these “big cats” represent different species of the genus Panthera. By comparison, humans and chimps (which are unquestionably different species) are said to have diverged 6.3 million years ago.

A recent article from Nature by Yun Sung Cho et al. (Nature Communications 4, Article number: 2433, doi:10.1038/ncomms3433, published 17 September 2013), titled, The tiger genome and comparative analysis with lion and snow leopard genomes, makes the following observations:

The Amur tiger genome is the first reference genome sequenced from the Panthera lineage and the second from the Felidae species. For comparative genomic analyses of big cats, we additionally sequenced four other Panthera genomes and tried to predict possible big cats’ molecular adaptations consistent with the obligatory meat eating and muscle strength of the predatory Panthera lineage. The tiger and cat genomes showed unexpectedly similar repeat compositions and high genomic synteny, and these indicated strong genomic conservation in Felidae. These results could be supported by the recency of the 37 species-Felidae radiation (<11 MYA)(15) and well-known hybridizations in captivity among subspecies in Felidae lineage such as liger and tigon. By contrast, the ratio of repeat components for the great apes was considerably different among species, especially between human and orang-utan(28), which diverged about the same time as felines. The breaks in synteny that we observed are likely occasional rare sporadic exchanges that accumulated over this short period (<11 MYA) of evolutionary time. The paucity of exchanges across the mammalian radiations (by contrast to more reshuffled species such as Canidae, Gibbons, Ursidae and New World monkeys) is a hallmark of evolutionary constraints.

Figure 1b in the article reveals that tigers have certain genes which cats lack. However, I was unable to ascertain whether tigers had any chemically unique orphan genes that lions or leopards lacked.

A Science Daily report titled, Tiger genome sequenced: Tiger, lion and leopard genomes compared (September 20, 2013) which discussed the findings in the above-cited article, added the following information:

Researchers also sequenced the genomes of other Panthera-a white Bengal tiger, an African lion, a white African lion, and a snow leopard-using next-gen sequencing technology, and aligned them using the genome sequences of tiger and domestic cat. They discovered a number of Panthera lineage-specific and felid-specific amino acid changes that may affect the metabolism pathways. These signals of amino-acid metabolism have been associated with an obligatory carnivorous diet.

Furthermore, the team revealed the evidence that the genes related to muscle strength as well as energy metabolism and sensory nerves, including olfactory receptor activity and visual perception, appeared to be undergoing rapid evolution in the tiger.

I should add that although lions and tigers can interbreed, the offspring (ligers and tigons) are nearly always sterile, because the parent species have different numbers of chromosomes.

From the above evidence, it appears likely that lions, tigers and leopards are genuinely different species, and that each species was intelligently engineered.

(c) Brown bears and polar bears

|

The case of brown bears and polar bears is much more difficult to decide, as there appear to be no online articles on orphan genes in these animals. However, Timetree indicates that they diverged 1.2 million years ago, which is a little earlier than the time when Drosophila persimilis and Drosophila pseudoobscura diverged (0.9 million years ago). It therefore seems likely that these two bears belong to different species.

Conclusion

I shall stop there for today. In conclusion, I’d like to point out that Professor Moran nowhere addressed the problem of the origin of orphan genes in his reply, so he didn’t really answer the first argument in my previous post, which was that we cannot claim to understand macroevolution until we ascertain the origin of the hundreds of chemically unique proteins and orphan genes that characterize each species.

To Professor Moran’s credit, he did attempt to answer my second argument (why is there so much stasis in the fossil record?), by suggesting that even large populations will still change slowly in their diversity, as new alleles increase in frequency and old ones are lost, but that morphological change is “more likely to occur during speciation events when the new daughter population (species) is quite small and rapid fixation of rare alleles is more likely.” But as I argued previously, why, during the times of environmental upheaval described by Professor Prothero, don’t we see a diversification of niches? Why don’t species branch off? Why do we instead see morphological stasis persisting for millions of years? That remains an unsolved mystery.

Finally, it seems to me that Professor Moran has solved the “time” question (my third argument) only in a trivial sense: he has calculated that the requisite number of mutations separating humans and chimps could have gotten fixed in the human line. I have to say I found his claim that in the last five million years, 22.4 million mutations have become fixed in the lineage leading to human beings, utterly astonishing. But even supposing that this figure is correct, what it overlooks is that the mutations accounting for the essential differences between humans and chimps aren’t your ordinary, run-of-the-mill mutations. Many of them seem to have involved orphan genes, which means that until we can explain how these genes arise, we lack an adequate account of macroevolution.