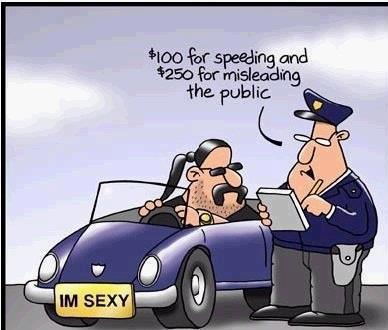

It seems to be time to call in the energy audit police.

It seems to be time to call in the energy audit police.

Let us explain, in light of an ongoing sharp exchange on “compensating” arguments in the illusion of organising energy thread. This morning Piotr, an objector (BTW — and this is one time where expertise base is relevant — a Linguist), at 288 dismissed Niwrad:

Stop using the term “2nd law” for something that is your private misconception. You’ve got it all backwards . . .

This demands correction, as Niwrad has done little more than appropriately point out that functionally specific complex organisation and associated information cannot cogently be explained away by making appeals to irrelevant energy flows elsewhere. Organisation is not properly to be explained on spontaneous energy flows and hoped for statistical miracles.

Not, in a world where something like random Brownian motion (HT: Wiki) is a common, classical case of spontaneous effects of energy and particle interactions at micro level:

In effect, pollen grains in the fluid of a microscope slide are acting as giant molecules jostled through their interaction with the invisible molecules of the fluid they are embedded in. In fact, this analysis was a key empirical evidence for the atomic-molecular theory and contributed to Einstein’s Nobel Prize as one of the famous 1905 Annalen der Physik papers.

In 289, I responded to Piotr, and drew attention of KS, DNA_Jock and others. I think the response should be headlined and of course the facilities of an OP will be taken advantage of to augment:

__________________

>> Perhaps it has not dawned on you what saying “private misconception” dismissively in front of someone who long since studied thermodynamics in light of the microstate underpinnings of macrostates, rooted in the work of Gibbs and Boltzmann comes across as.

Ill informed, ill advised posturing.

FYI, it is the observational facts, reasoning and underlying first plausibles that decide a scientific issue, not opinions and a united ideological front.

In direct terms, FYFI irrelevant energy flows and entropy changes as are commonly trotted out in “compensation” arguments are a fallacy.

FYYFI, 2LOT (which has multiple formulations as it was arrived at from several directions . . . Clausius being most important in my view), is rooted in the statistics of systems based on large numbers of particles ( typically we can start at say 10^12, and run to 19^19 – 10^26 atoms or molecules etc for analyses that spring to mind), and in effect sums up that for isolated systems the spontaneous trend is towards clusters of microstates with statistical dominance of the possibilities under given macro-conditions.

[Clipping from ID Foundations no 2, on Counterflow, open systems, FSCO/I and self-moved agents in action:]

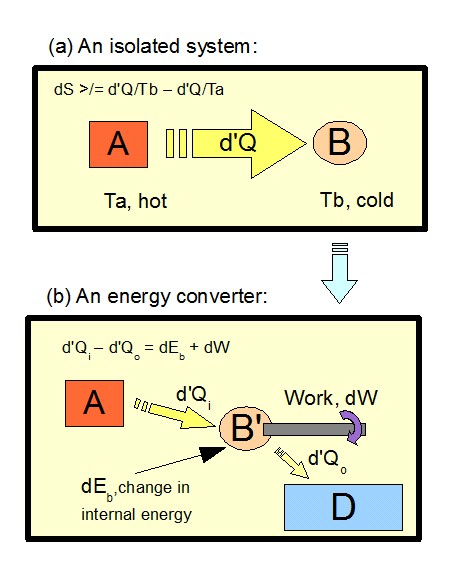

On peeking within conceptually (somewhat oddly, as strict isolation means no energy or mass movement cross-border so we technically cannot look in from outside . . . we are effectively taking a God’s eye view . . . ) Clausius set up two subsystems of differing temp and pondered heat flow d’q, then took ratios and showed that as Ta > Tb, net entropy rises, when we do the sums.

A direct implication is that raw energy importation tends to increase entropy. The micro view indicates this is because the number of ways micro level mass and energy can be arranged consistent with gross macro state has risen.

Thus, the point that I clipped in 169, that importation of raw energy into a system leads to a trend of increased entropy. Where as G N Lewis and others have highlighted, a useful metric for entropy is that it indicates the average missing information to specify particular microstate consistent with a macroscopic lab level gross state. [Let me clip from Wikipedia speaking against known interest, c. April 2011, on Information Entropy and the links to Thermodynamics:]

At an everyday practical level the links between information entropy and thermodynamic entropy are not close. Physicists and chemists are apt to be more interested in changes in entropy as a system spontaneously evolves away from its initial conditions, in accordance with the second law of thermodynamics, rather than an unchanging probability distribution. And, as the numerical smallness of Boltzmann’s constant kB indicates, the changes in S / kB for even minute amounts of substances in chemical and physical processes represent amounts of entropy which are so large as to be right off the scale compared to anything seen in data compression or signal processing.

But, at a multidisciplinary level, connections can be made between thermodynamic and informational entropy, although it took many years in the development of the theories of statistical mechanics and information theory to make the relationship fully apparent. In fact, in the view of Jaynes (1957), thermodynamics should be seen as an application of Shannon’s information theory: the thermodynamic entropy is interpreted as being an estimate of the amount of further Shannon information needed to define the detailed microscopic state of the system, that remains uncommunicated by a description solely in terms of the macroscopic variables of classical thermodynamics. For example, adding heat to a system increases its thermodynamic entropy because it increases the number of possible microscopic states that it could be in, thus making any complete state description longer. (See article: maximum entropy thermodynamics.[Also,another article remarks: >>in the words of G. N. Lewis writing about chemical entropy in 1930, “Gain in entropy always means loss of information, and nothing more” . . . in the discrete case using base two logarithms, the reduced Gibbs entropy is equal to the minimum number of yes/no questions that need to be answered in order to fully specify the microstate, given that we know the macrostate.>>]) Maxwell’s demon can (hypothetically) reduce the thermodynamic entropy of a system by using information about the states of individual molecules; but, as Landauer (from 1961) and co-workers have shown, to function the demon himself must increase thermodynamic entropy in the process, by at least the amount of Shannon information he proposes to first acquire and store; and so the total entropy does not decrease (which resolves the paradox).

Now, too, work can be understood to be forced, ordered motion at macro or micro levels, generally measured on the dot product F*dx.

[Courtesy HyperPhysics:]

Energy conversion devices such as heat engines couple energy inflows to structures that generate such forced ordered work, commonly shaft work that moves a shaft and loads connected to it. In so doing, to operate they exhaust degraded energy, often waste heat to a heat sink.

[A backhoe is a useful example showing controlled, programmed forced ordered motion deriving energy from fuel through a heat engine and effecting intelligently directed configuration thus constructive work and FSCO/I rich entities, exhausting waste heat etc to the atmosphere at ambient temperature. The backhoe is of course itself in turn a capital example of FSCO/I and its known source:]

[An illustration of a von Neumann Kinematic self replicator [vNSR] will help bridge this to the world of self replicating automata, which includes the living cell:]

The pivotal issue comes up here: relevant energy conversion devices (especially in cell based life, such as driving ATP synthesis (ATP synthase) or photosynthesis, or onwards synthesising proteins, are FSCO/I rich, composed of many interacting parts in specific arrangements that work together.

The pivotal issue comes up here: relevant energy conversion devices (especially in cell based life, such as driving ATP synthesis (ATP synthase) or photosynthesis, or onwards synthesising proteins, are FSCO/I rich, composed of many interacting parts in specific arrangements that work together.

[ATP Synthase illustration:]

[An outline on photosynthesis via Wiki and Somepics, will also help underscore the point:]

[Finally, let us observe the protein synthesis process:]

[ . . . and the wider metabolic framework of the cell:]

At OOL, there are suggestions, such machinery is supposed to have spontaneously come about through diffusion and chemical kinetics etc.

But the same statistics underpinning 2LOT and integral to it for over 100 years, highlights that such amounts to expecting randomising forces or phenomena such as diffusion to do complex, specific patterns of constructive work. The relevant statistics and their upshot is massively against such. The non functional clumped at random possibilities vastly outnumber the functionally specific ones, much less the scattered ones.

[Notionally, we can look at the needle in haystack blind search challenge based on:]

[Where the search challenge can be represented:]

[Where the search challenge can be represented:]

Hence the thought exercise I clipped at 242 above.

Hence the thought exercise I clipped at 242 above.

[U/D, Mar 18, 2015: I think a clip from 123 below in this thread for record will help draw out the significance of the direct statistical underpinnings of the second law of thermodynamics [2LOT]. For, it will help us to see how — though many orders of magnitude smaller than typical energy flows and entropy numbers from those of typical heat transfer exercises, the micro-level arrangements linked to FSCO/I at something like the level of the living cell are of such deep isolation in the field of molecular contingencies or configurations that the blind search resources of the solar system or observed cosmos will be deeply challenged to find such under pre-biotic conditions such as in a Darwin’s warm salty pond struck by lightning or the like typical scenario:

123: >> . . . the root [of the second law of thermodynamics] turned out to be [revealed by] an exploration of the statistical behaviour of systems of particles where various distributions of mass and energy at micro level are possible; consistent with given macro-conditions such as pressure, temperature, etc. that define the macrostate of the system. The second law [then] turns out to be a consequence of the strong tendency of systems to drift towards statistically dominant clusters of microstates.

As a simple example, used by Yavorsky and Pinsky in their Physics (MIR, Moscow, USSR, 1974, Vol I, pp. 279 ff.]) of approximately A Level or first College standard and discussed in my always linked note as being particularly clear, which effectively models a diffusion situation, we may consider

. . . a simple model of diffusion, let us think of ten white and ten black balls in two rows in a container. There is of course but one way in which there are ten whites in the top row; the balls of any one colour being for our purposes identical. But on shuffling, there are 63,504 ways to arrange five each of black and white balls in the two rows, and 6-4 distributions may occur in two ways, each with 44,100 alternatives. So, if we for the moment see the set of balls as circulating among the various different possible arrangements at random, and spending about the same time in each possible state on average, the time the system spends in any given state will be proportionate to the relative number of ways that state may be achieved. Immediately, we see that the system will gravitate towards the cluster of more evenly distributed states. In short, we have just seen that there is a natural trend of change at random, towards the more thermodynamically probable macrostates, i.e the ones with higher statistical weights. So “[b]y comparing the [thermodynamic] probabilities of two states of a thermodynamic system, we can establish at once the direction of the process that is [spontaneously] feasible in the given system. It will correspond to a transition from a less probable to a more probable state.” [p. 284.] This is in effect the statistical form of the 2nd law of thermodynamics. Thus, too, the behaviour of the Clausius isolated system . . . is readily understood: importing d’Q of random molecular energy so far increases the number of ways energy can be distributed at micro-scale in B, that the resulting rise in B’s entropy swamps the fall in A’s entropy. Moreover, given that FSCI-rich micro-arrangements are relatively rare in the set of possible arrangements, we can also see why it is hard to account for the origin of such states by spontaneous processes in the scope of the observable universe. (Of course, since it is as a rule very inconvenient to work in terms of statistical weights of macrostates [i.e W], we instead move to entropy, through

s = k ln W.

Part of how this is done can be seen by imagining a system in which there are W ways accessible, and imagining a partition into parts 1 and 2.

W = W1*W2,

as for each arrangement in 1 all accessible arrangements in 2 are possible and vice versa, but it is far more convenient to have an additive measure, i.e we need to go to logs. The constant of proportionality, k, is the famous Boltzmann constant and is in effect the universal gas constant, R, on a per molecule basis, i.e we divide R by the Avogadro Number, NA, to get: k = R/NA.

The two approaches to entropy, by Clausius, and Boltzmann, of course, correspond. In real-world systems of any significant scale, the relative statistical weights are usually so disproportionate, that the classical observation that entropy naturally tends to increase, is readily apparent.)

[ . . . . ]

A closely parallel first example by L K Nash, ponders the likely outcome of 1,000 coins tossed at random per the binomial distribution. This turns out to be a sharply peaked bell curve centred on 50-50 H-T as has been discussed. The dominant cluster will be just this, with the coins in no particular sequence.

But, if instead we were to see all H or all T or alternating H and T or the first 143 characters of this comment in ASCII code we can be assured that of the 1.07*10^301 possibilities, such highly specific, “simply describable” sets of outcomes are utterly maximally unlikely to come about by blind chance or mechanical necessity, but are readily explained on design. That sort of pattern is a case of complex specified information, and in the case of the ascii code, functionally specific complex organisation and associated information; FSCO/I, particularly digitally coded functionally specific information, dFSCI.

This example draws out the basis of the design inference on FSCO/I; as, the observed cosmos of 10^80 atoms or so, each having a tray of 1,000 coins flipped and observed every 10^-14 s, will in a reasonable lifespan to date of 10^17 s look at 10^111 possibilities. An upper limit to the number of Chem rxn speed atomic scale events in the observed cosmos to date. A large number, but one utterly dwarfed by 10^301 possibilities or so. Reducing the former to the size of a hypothetical straw, the size of the cubical haystack it would be pulled from would reduce our observed cosmos to a small blob by comparison.

That is, any reasonably isolated and special, definable cluster of possible configs, will be maximally unlikely to be found by such a blind search. Far too much haystack, too few needles, no effective scale of search appreciably different from no search.

On the scope of events we can observe, then, we can only reasonably expect to see cases from the overwhelming bulk.

This, with further development, is the core statistical underpinning of 2LOT.

And, as prof Sewell pointed out, the statistical challenge does not go away when you open up a system to generic, non functionally specific mass or energy inflows etc, opened up systems of appreciable size . . . and a system whose state can be specified by 1,000 bits of info is small indeed (yes a coin is a 1-bit info storing register) . . . the statistically miraculous will be still beyond plausibility unless something in particular is happening that makes it much more plausible. Something, like organised forced motion that sets up special configs. >>

Consequently, while something like a molecular nanotech lab perhaps some generations beyond Venter et al could plausibly — or at least conceivably as a thought exercise that extrapolates what routinely goes on in genetic engineering — use atom and molecular manipulation to construct carefully programmed molecules and manipulate them to form a metabolising, encapsulated, gated automaton with an integral code-using von Neumann kinematic self-replicator, that would be very different from hoping for the statistical miracle chain of typical suggested OOL scenarios. These are based on typically hoping for much the same to spontaneously arise from something like a hoped for self replicating molecule that somehow evolves into such an automaton.

For instance, this is how Wikipedia summed up such a few years back:

There is no truly “standard model” of the origin of life. Most currently accepted models draw at least some elements from the framework laid out by the Oparin-Haldane hypothesis. Under that umbrella, however, are a wide array of disparate discoveries and conjectures such as the following, listed in a rough order of postulated emergence:

- Some theorists suggest that the atmosphere of the early Earth may have been chemically reducing in nature [[NB: a fairly controversial claim, as others argue that the geological evidence points to an oxidising or neutral composition, which is much less friendly to Miller-Urey-type syntheses] , composed primarily of methane (CH4), ammonia (NH3), water (H2O), hydrogen sulfide (H2S), carbon dioxide (CO2) or carbon monoxide (CO), and phosphate (PO43-), with molecular oxygen (O2) and ozone (O3) either rare or absent.

- In such a reducing atmosphere [[notice the critical dependence on a debatable assumption], electrical activity can catalyze the creation of certain basic small molecules (monomers) of life, such as amino acids. [[Mostly, the very simplest ones, which had to be rapidly trapped out lest hey be destroyed by the same process that created them] This was demonstrated in the Miller–Urey experiment by Stanley L. Miller and Harold C. Urey in 1953.

- Phospholipids (of an appropriate length) can spontaneously form lipid bilayers, a basic component of the cell membrane.

- A fundamental question is about the nature of the first self-replicating molecule. Since replication is accomplished in modern cells through the cooperative action of proteins and nucleic acids, the major schools of thought about how the process originated can be broadly classified as “proteins first” and “nucleic acids first”.

- The principal thrust of the “nucleic acids first” argument is as follows:

- The polymerization of nucleotides into random RNA molecules might have resulted in self-replicating ribozymes (RNA world hypothesis) [[Does not account for the highly specific nature of observed self-replicating chains, nor the problem of hydrolysis by which ever-present water molecules could relatively easily break chains]

- Selection pressures for catalytic efficiency and diversity might have resulted in ribozymes which catalyse peptidyl transfer (hence formation of small proteins), since oligopeptides complex with RNA to form better catalysts. The first ribosome might have been created by such a process, resulting in more prevalent protein synthesis.

- Synthesized proteins might then outcompete ribozymes in catalytic ability, and therefore become the dominant biopolymer, relegating nucleic acids to their modern use, predominantly as a carrier of genomic information. [[Does not account for the origin of codes, the information in the codes, the algorithms to put it to use, or the co-ordinated machines to physically execute the algorithms.]

As of 2010, no one has yet synthesized a “protocell” using basic components which would have the necessary properties of life (the so-called “bottom-up-approach”). Without such a proof-of-principle, explanations have tended to be short on specifics. [[Acc.: Aug 5, 2010, coloured emphases and parentheses added.]

Such requires information rich macromolecules to spontaneously arise from molecular noise and blind chemical kinetics of equilibrium (which, as Thaxton et al showed in the 1980’s . . . cf. here and here, are extremely adverse), as well as codes — language — and complex algorithms we are only now beginning to understand.

There is no significant observed evidence of such cumulative constructive work being feasible by chance on the gamut of our solar system or the observed cosmos on the timeline of some 10^17 s from the conventional timeline since the big bang event as is commonly discussed, in light of the very strong statistical tendencies and expected outcomes just outlined.

No wonder, then, that some years ago, leading OOL researchers Robert Shapiro and Leslie Orgel had the following exchange of mutual ruin on metabolism- first vs genes-first/RNA world OOL speculative models:

The only empirically, observationally warranted adequate cause of such FSCO/I at macro or micro levels (recall that classic pic of atoms arranged to spell IBM?) is intelligently directed configuration. Which of course will use energy converting devices to carry out constructive work in a technology cascade. It takes a lot of background work to carry out the work in hand just now, as a rule.

The only empirically, observationally warranted adequate cause of such FSCO/I at macro or micro levels (recall that classic pic of atoms arranged to spell IBM?) is intelligently directed configuration. Which of course will use energy converting devices to carry out constructive work in a technology cascade. It takes a lot of background work to carry out the work in hand just now, as a rule.

Such is not a violation of 2LOT, as e.g. Szilard’s analysis of Maxwell’s Demon shows. There is a relevant heat or energy flow and degradation process that compensates the reduction in freedom of possibilities implied in constructing an FSCO/I rich entity.

But, RELEVANT is a key word; the compensating flow needs to credibly be connected to the constructive wiring diagram assembly work in hand to create an FSCO/I rich entity. It cannot just be free floating out there in a cloud cuckooland dream of getting forces of dissipation and disarrangement such as Brownian motion and diffusion to do a large body of constructive work.

That is the red herring-strawman fallacy involved in typical “compensation” arguments. There ain’t no “paper trail” that connects the claimed “compensation” to the energy transactions involved in the detailed construction work required to create FSCO/I.

[Or, let me clip apt but often derided remarks of Mathematics Professor Granville Sewell, an expert on (the highly relevant!) subject of differential equations, from http://www.math.utep.edu/Faculty/sewell/articles/appendixd.pdf:

. . . The second law is all about probability, it uses probability at the microscopic level to predict macroscopic change: the reason carbon distributes itself more and more uniformly in an insulated solid is, that is what the laws of probability predict when diffusion alone is operative. The reason natural forces may turn a spaceship, or a TV set, or a computer into a pile of rubble but not vice-versa is also probability: of all the possible arrangements atoms could take, only a very small percentage could fly to the moon and back, or receive pictures and sound from the other side of the Earth, or add, subtract, multiply and divide real numbers with high accuracy. The second law of thermodynamics is the reason that computers will degenerate into scrap metal over time, and, in the absence of intelligence, the reverse process will not occur; and it is also the reason that animals, when they die, decay into simple organic and inorganic compounds, and, in the absence of intelligence, the reverse process will not occur.

The discovery that life on Earth developed through evolutionary “steps,” coupled with the observation that mutations and natural selection — like other natural forces — can cause (minor) change, is widely accepted in the scientific world as proof that natural selection — alone among all natural forces — can create order out of disorder, and even design human brains, with human consciousness. Only the layman seems to see the problem with this logic. In a recent Mathematical Intelligencer article [“A Mathematician’s View of Evolution,” The Mathematical Intelligencer 22, number 4, 5-7, 2000] I asserted that the idea that the four fundamental forces of physics alone could rearrange the fundamental particles of Nature into spaceships, nuclear power plants, and computers, connected to laser printers, CRTs, keyboards and the Internet, appears to violate the second law of thermodynamics in a spectacular way.1 . . . .

What happens in a[n isolated] system depends on the initial conditions; what happens in an open system depends on the boundary conditions as well. As I wrote in “Can ANYTHING Happen in an Open System?”, “order can increase in an open system, not because the laws of probability are suspended when the door is open, but simply because order may walk in through the door…. If we found evidence that DNA, auto parts, computer chips, and books entered through the Earth’s atmosphere at some time in the past, then perhaps the appearance of humans, cars, computers, and encyclopedias on a previously barren planet could be explained without postulating a violation of the second law here . . . But if all we see entering is radiation and meteorite fragments, it seems clear that what is entering through the boundary cannot explain the increase in order observed here.” Evolution is a movie running backward, that is what makes it special.

THE EVOLUTIONIST, therefore, cannot avoid the question of probability by saying that anything can happen in an open system, he is finally forced to argue that it only seems extremely improbable, but really isn’t, that atoms would rearrange themselves into spaceships and computers and TV sets . . . [NB: Emphases added. I have also substituted in isolated system terminology as GS uses a different terminology. Cf as well his other remarks here and here.]]

Call in the energy auditors!

Arrest that energy embezzler!

In short simple terms, with all due respect, you simply don’t know what you are talking about and yet traipse in to announce that others who do have a clue, misunderstand.

That does not compute, as the fictional Mr Spock was so fond of saying.

Such does not exactly commend evolutionary materialist ideology as the thoughtful man’s view of the world. But then, long since, that view has been known to be self-referentially incoherent. E.g. per Haldane’s subtle retort:

“It seems to me immensely unlikely that mind is a mere by-product of matter. For if my mental processes are determined wholly by the motions of atoms in my brain I have no reason to suppose that my beliefs are true. They may be sound chemically, but that does not make them sound logically. And hence I have no reason for supposing my brain to be composed of atoms. In order to escape from this necessity of sawing away the branch on which I am sitting, so to speak, I am compelled to believe that mind is not wholly conditioned by matter.” [“When I am dead,” in Possible Worlds: And Other Essays [1927], Chatto and Windus: London, 1932, reprint, p.209.]

I suggest to you that you would be well advised “tae think again.”>>

__________________

Time tae think again objectors. END

PS: For benefit of Z at 17 below, here’s an exploded view of a Garden pond fountain pump, exploded view:

. . . and yes, just the drive motor alone would be FSCO/I.