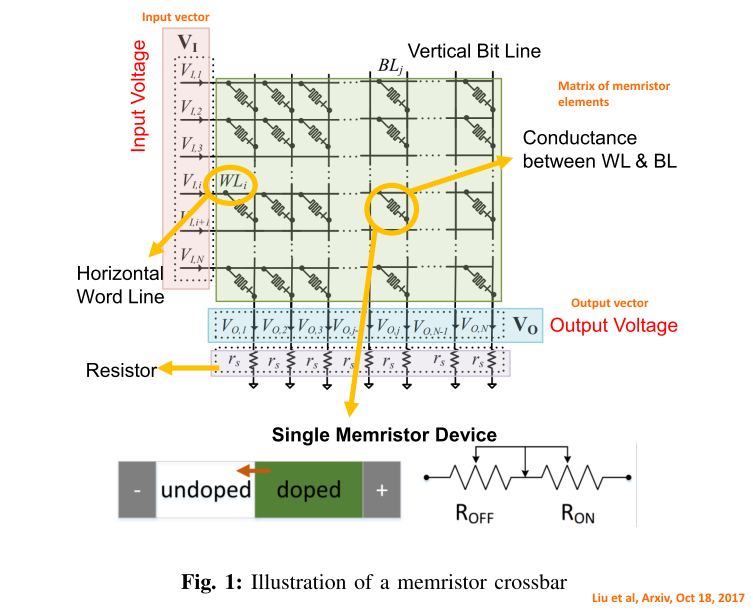

Memristors are in effect tunable resistors; where a resistive state can be programmed [and changed, so far a very finite number of times]. This means they can store and process information, especially by carrying out weighted-product summations and vector-based matrix array product summations. Such are very powerful physically instantiated mathematical operations. For example, here is a memristor crossbar matrix:

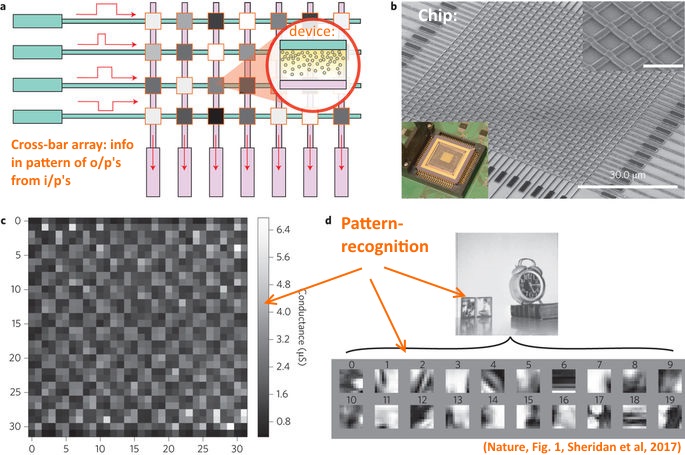

. . . and here is one in use to recognise patterns:

They hold promise for AI, high density storage units and more. How they work turns out to be a bit of a challenge, as IEEE Spectrum reported in 2015:

>>Over the last decade researchers have produced two commercially promising types of memristors: electrochemical metallization memory (ECM) cells, and valence change mechanism memory (VCM) cells.

In EMC cells, which have a copper electrode (called the active electrode), the copper atoms are oxidized—stripped of an electron—by the “write” voltage. The resulting copper ions migrate through a solid electrolyte towards a platinum electrode. When they reach the platinum they get an electron back. Other copper ions arrive and pile on, eventually forming a pure metallic filament linking both electrodes, thus lowering of the resistance of the device.

In VCM cells, both negatively charged oxygen ions and positively charged metal ions result from the “write” voltage. Theoretically, the oxygen ions are taken out of the solid electrolyte, contributing to a filament consisting of semiconducting material that builds up between the electrodes.>>

However, some recent work bridges the two suggested mechanisms:

>>Valov and coworkers in Germany, Japan, Korea, Greece, and the United States started investigating memristors that had a tantalum oxide electrolyte and an active tantalum electrode. “Our studies show that these two types of switching mechanisms in fact can be bridged, and we don’t have a purely oxygen type of switching as was believed, but that also positive [metal] ions, originating from the active electrode, are mobile,” explains Valov.>>

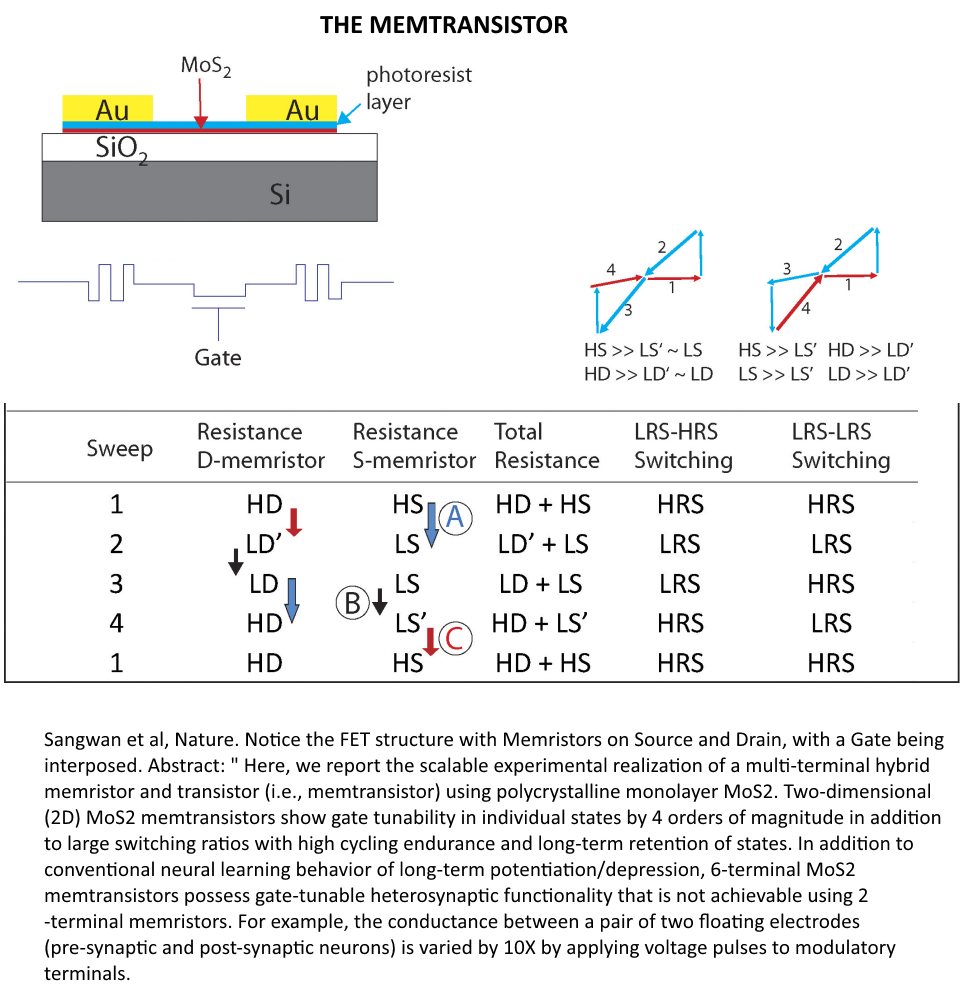

Of course, UD’s News just brought up an “onward” device, the memtransistor:

>>Hersam, a Walter P. Murphy Professor of Materials Science and Engineering in Northwestern’s McCormick School of Engineering, and his team are bringing the world closer to realizing [neuromorphic — brain like computing].

The research team has developed a novel device called a “memtransistor,” which operates much like a neuron by performing both memory and information processing. With combined characteristics of a memristor and transistor, the memtransistor also encompasses multiple terminals that operate more similarly to a neural network.>>

How does this one work? Phys dot org gives a word-picture:

>>Hersam’s memtransistor makes use of a continuous film of polycrystalline MoS2 that comprises a large number of smaller flakes. This enabled the research team to scale up the device from one flake to many devices across an entire wafer.

“When length of the device is larger than the individual grain size, you are guaranteed to have grain boundaries in every device across the wafer,” Hersam said. “Thus, we see reproducible, gate-tunable memristive responses across large arrays of devices.”

After fabricating memtransistors uniformly across an entire wafer, Hersam’s team added additional electrical contacts. Typical transistors and Hersam’s previously developed memristor each have three terminals. In their new paper, however, the team realized a seven-terminal device, in which one terminal controls the current among the other six terminals.

“This is even more similar to neurons in the brain,” Hersam said, “because in the brain, we don’t usually have one neuron connected to only one other neuron. Instead, one neuron is connected to multiple other neurons to form a network. Our device structure allows multiple contacts, which is similar to the multiple synapses in neurons.”>>

Of course, mention of neurons is a big clue to where this is headed: neural network AI, which is then held to be a brain-mind like system. IEEE has another article on this strong AI perspective, one with a telling title: “MoNETA: A Mind Made from Memristors”:

>> . . . why should you believe us when we say we finally have the technology that will lead to a true artificial intelligence? Because of MoNETA, the brain on a chip. MoNETA (Modular Neural Exploring Traveling Agent) is the software we’re designing at Boston University’s department of cognitive and neural systems, which will run on a brain-inspired microprocessor under development at HP Labs in California. It will function according to the principles that distinguish us mammals most profoundly from our fast but witless machines. MoNETA (the goddess of memory—cute, huh?) will do things no computer ever has. It will perceive its surroundings, decide which information is useful, integrate that information into the emerging structure of its reality, and in some applications, formulate plans that will ensure its survival. In other words, MoNETA will be motivated by the same drives that motivate cockroaches, cats, and humans.

Researchers have suspected for decades that real artificial intelligence can’t be done on traditional hardware, with its rigid adherence to Boolean logic and vast separation between memory and processing. But that knowledge was of little use until about two years ago, when HP built a new class of electronic device called a memristor. Before the memristor, it would have been impossible to create something with the form factor of a brain, the low power requirements, and the instantaneous internal communications. Turns out that those three things are key to making anything that resembles the brain and thus can be trained and coaxed to behave like a brain. In this case, form is function, or more accurately, function is hopeless without form.

Basically, memristors are small enough, cheap enough, and efficient enough to fill the bill. Perhaps most important, they have key characteristics that resemble those of synapses. That’s why they will be a crucial enabler of an artificial intelligence worthy of the term.>>

Lessee, mind as emergent property of a brain-like, sufficiently powerful neural network, check. A machine with motivation, check. Decision-making, learning, an emerging worldview termed “its reality,” check. Strong AI, check. Where of course, now that another great hope is on the horizon, we can admit that former approaches have been persistently disappointing, check. Charlie B, THIS time I won’t pull away the football, check.

In this case, there seems to be a suggestion that the principle that any competent digital architecture can execute any computable function so that there is a hardware-software-performance tradeoff is failing. It’s probably a badly phrased statement that nothing with cost-effective power to do the job and memristor-based machines are able to move the game ahead. (Sounds suspiciously like a funding proposal to me, but then maybe I am a little cynical about project proposals in general, including research ones. But, some people have enough “risk appetite” to try a kick at the football. Yet again. I think we may get some useful storage and processing devices and may see rebirth of a new wave of analogue computers using memristors. Pulse train processing technology is especially promising.)

I think there is a fundamental ideological assumption at work that ends up hoping for mind to emerge from hardware by reaching some onward level of complexity. Of course, it is overlooked that 500 – 1,000 bits of functionally specific, complex organisation and associated information [FSCO/I] challenges the blind search resources of our observed cosmos, so that already just to get to a first cell by blind watchmaker mechanisms is utterly implausible, much less a sophisticated nervous system.

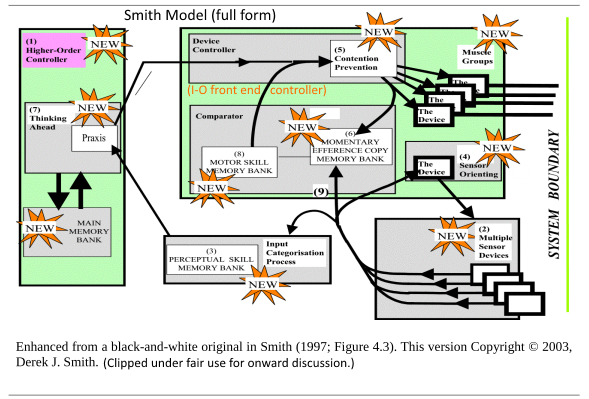

I again draw attention to Eng Derek Smith’s two-tier controller cybernetic loop, here in full form:

To better understand, let’s simplify:

We see that a cybernetic loop can be supervised, with shared information store, leading to imposing of flexible goal directed behaviour and adaptive/learning behaviour.

Scott Calef adds, from a quantum-influence perspective:

>>Keith Campbell writes, “The indeterminacy of quantum laws means that any one of a range of outcomes of atomic events in the brain is equally compatible with known physical laws. And differences on the quantum scale can accumulate into very great differences in overall brain condition. So there is some room for spiritual activity even within the limits set by physical law. There could be, without violation of physical law, a general spiritual constraint upon what occurs inside the head.” (p.54). Mind could act upon physical processes by “affecting their course but not breaking in upon them.” (p.54). If this is true, the dualist could maintain the conservation principle but deny a fluctuation in energy because the mind serves to “guide” or control neural events by choosing one set of quantum outcomes rather than another. Further, it should be remembered that the conservation of energy is designed around material interaction; it is mute on how mind might interact with matter. After all, a Cartesian rationalist might insist, if God exists we surely wouldn’t say that He couldn’t do miracles just because that would violate the first law of thermodynamics, would we? [Article, “Dualism and Mind,” Internet Encyclopedia of Philosophy.] >>

Where does this all come out?

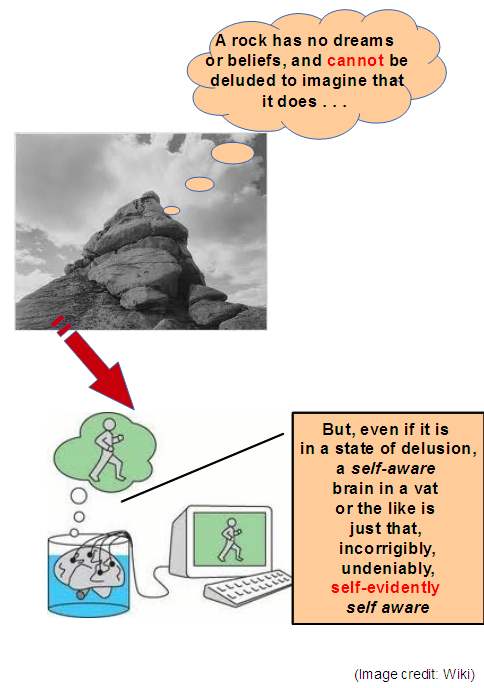

Even with memristors and memtransistors in play, we are back at the issue that refined and suitably organised rocks can compute. But such computation is an essentially mechanical, GIGO-limited blind process that manipulates signals in ways that a clever designer finds useful, that is not working on rational insight and inference.

As Reppert memorably puts it:

>>. . . let us suppose that brain state A, which is token identical to the thought that all men are mortal, and brain state B, which is token identical to the thought that Socrates is a man, together cause the belief that Socrates is mortal. It isn’t enough for rational inference that these events be those beliefs, it is also necessary that the causal transaction be in virtue of the content of those thoughts . . . [But] if naturalism is true, then the propositional content is irrelevant to the causal transaction that produces the conclusion, and [[so] we do not have a case of rational inference. In rational inference, as Lewis puts it, one thought causes another thought not by being, but by being seen to be, the ground for it. But causal transactions in the brain occur in virtue of the brain’s being in a particular type of state that is relevant to physical causal transactions. [Emphases added. Also cf. Reppert’s summary of Barefoot’s argument here.]>>

Or as I have so often put it here at UD:

We are dealing with a categorical distinction between computation and rational contemplation, where the former is an example of a dynamic-stochastic system that physically, mechanically implements chained operations,

. . . rather than what we experience ourselves to be: conscious, insightful, inferring, learning and acting agents who are significantly, responsibly rational and free. Memristor technology in the end exemplifies the difference. And hence, Haldane’s remark carries redoubled force:

>>”It seems to me immensely unlikely that mind is a mere by-product of matter. For if my mental processes are determined wholly by the motions of atoms in my brain I have no reason to suppose that my beliefs are true. They may be sound chemically, but that does not make them sound logically. And hence I have no reason for supposing my brain to be composed of atoms. In order to escape from this necessity of sawing away the branch on which I am sitting, so to speak, I am compelled to believe that mind is not wholly conditioned by matter.” [[“When I am dead,” in Possible Worlds: And Other Essays [1927], Chatto and Windus: London, 1932, reprint, p.209. (Highlight and emphases added.)] >>

Food for thought going forward. END