Here, it is helpful to headline an update to L&FP, 62, as we need to return to a rich vein of thought that allows us to approach science in light of systems engineering perspectives:

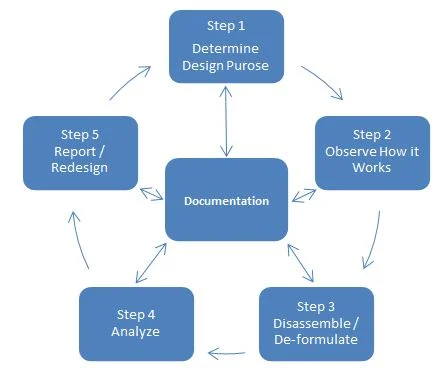

[[We may add a chart on a key subset of SE, reverse engineering, RE:

One of the most significant Reverse Engineering-Forward Engineering exercises was the clean room duplication of the IBM PC’s operating framework that allowed lawsuit-proof clones to be built that then led to the explosion of PC-compatible machines. By the time this was over, IBM sold out to Lenovo and went back to its core competency, Mainframes. Where, now, a mainframe today is in effect a high end packaged server farm; the microprocessor now rules the world, including the supercomputer space.

Here, let us add, a Wikipedia confession as yet another admission against interest:

Reverse engineering (also known as backwards engineering or back engineering) is a process or method through which one attempts to understand through deductive reasoning [–> actually, a poor phrase for inference to best explanation, i.e. abductive reasoning] how a previously made device, process, system, or piece of software accomplishes a task with very little (if any) [–> initial] insight into exactly how it does so. It is essentially the process of opening up or dissecting [–> telling metaphor] a system [–> so, SE applies] to see how it works, in order to duplicate or enhance it. Depending on the system under consideration and the technologies employed, the knowledge gained during reverse engineering can help with repurposing obsolete objects, doing security analysis, or learning how something works.[1][2]

Although the process is specific to the object on which it is being performed, all reverse engineering processes consist of three basic steps: Information extraction, Modeling, and Review. Information extraction refers to the practice of gathering all relevant information [–> telling word, identify the FSCO/I present in the entity, and of course TRIZ is highly relevant esp its library of key design strategies] for performing the operation. Modeling refers to the practice of combining the gathered information into an abstract model [–> that is, the inferred best explanation], which can be used as a guide for designing the new object or system. [–> guess why I think within this century we should be able to build a cell de novo?] Review refers to the testing of the model to ensure the validity of the chosen abstract.[1] Reverse engineering is applicable in the fields of computer engineering, mechanical engineering, design, electronic engineering, software engineering, chemical engineering,[3] and systems biology.[4] [More serious discussion, here.]

We can see that

one paradigm for science is, reverse engineering nature.

This directly connects to, technology as using insights from RE of nature to forward engineer [FE] our own useful systems. And of course that takes us to a theme of founders of modern science, that they were “thinking God’s thoughts after him.”]]

This approach is obviously design friendly and reflects commonplace views of many founders of modern science. For example, Johannes Kepler is commonly said to have written:

“I was merely thinking God’s thoughts after him. Since we astronomers are priests of the highest God in regard to the book of nature, it benefits us to be thoughtful, not of the glory of our minds, but rather, above all else, of the glory of God.”

Even were this apocryphal, it would be accurate to the views of many scientists then and now. However, the reverse engineering view is not merely of historical or philosophy of science interest. For, thanks to the works of such pioneers, we have a rich body of results that gives us confidence that such an approach builds on the framework of rational principles that have unfolded as we noted patterns in nature, inferred laws of nature and built explanatory models aka theories.

For telling instance, observe the ribosome in protein synthesis, showing a direct comparison to the role of punch paper tape and magnetic tape in the older generation of computers:

Such shows machine language in action. As Wikipedia confesses:

In computer programming, machine code is any low-level programming language, consisting of machine language instructions, which are used to control a computer’s central processing unit (CPU). Each instruction causes the CPU to perform a very specific task, such as a load, a store, a jump, or an arithmetic logic unit (ALU) operation on one or more units of data in the CPU’s registers or memory. Machine code is a strictly numerical language which is designed to run as fast as possible, and may be considered as the lowest-level representation of a compiled or assembled computer program or as a primitive and hardware-dependent programming language.

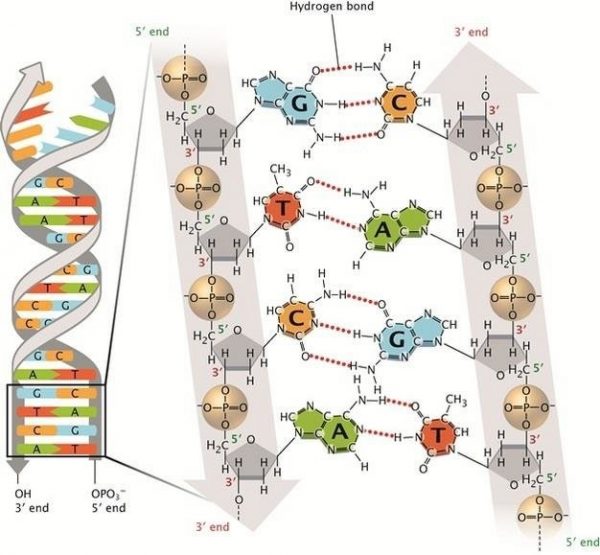

This is directly parallel — not merely, dismissibly loosely analogous to — the function of mRNA in the ribosome. Once threaded and aligned, we have AUG, START (and load Methionine), EXTEND (and load another specified AA), EXTEND . . . STOP. This is algorithmic procedure, and the code is based on a four state, prong height element similar to a Yale type, pin tumbler lock. But of course, using molecular nanotech with CG and AT or AU as complementary pairs:

This approach points onward to Wigner’s wondering about the uncanny effectiveness of mathematics in sciences. To this, my answer has been [see popular article here], that the logic of structure and quantity for possible worlds puts a core of Math into the structure of any feasible world. It only remains for us to explore and reverse engineer that structure then elaborate our own onward synthesis of bodies of knowledge.

Yes, Mathematics can also in key part be approached on reverse engineering, it’s not just Science here, indeed even logic as a technical discipline fits in. END