Our civilisation is haunted by the ghost of the Rev. Thomas Malthus. His core vision of resource exhaustion and population crashes haunts our imaginations. As BBC profiles in brief:

Malthus’ most well known work ‘An Essay on the Principle of Population’ was published in 1798, although he was the author of many pamphlets and other longer tracts including ‘An Inquiry into the Nature and Progress of Rent’ (1815) and ‘Principles of Political Economy’ (1820). The main tenets of his argument were radically opposed to current thinking at the time. He argued that increases in population would eventually diminish the ability of the world to feed itself and based this conclusion on the thesis that populations expand in such a way as to overtake the development of sufficient land for crops. Associated with Darwin, whose theory of natural selection was influenced by Malthus’ analysis of population growth, Malthus was often misinterpreted, but his views became popular again in the 20th century with the advent of Keynesian economics.

A key to this thinking is that cropland extension is at best linear by addition of land, and would face diminishing returns by driving into increasingly marginal areas; all the while population growth tends to be exponential, overtaking the growth in land thus forcing either voluntary limitation of reproduction or else a crash due to hitting the limits:

That the increase of population is necessarily limited by the means of subsistence,

[Malthus, Thomas Robert. An Essay on the Principle of Population. Oxfordshire, England: Oxford World’s Classics. p. 61.]

That population does invariably increase when the means of subsistence increase, and,

That the superior power of population is repressed by moral restraint, vice and misery.

This line of thought should seem familiar, it lies behind the familiar nightmare of a global “population bomb.” Indeed, even Darwin’s theory turns on appeal to similar dynamics, save that of course, moral restraint would not apply and that extinction and replacement through descent with modification leading to preservation of “favoured races” is held to drive unlimited variation, leading to ecosystems filled with the various life forms we see.

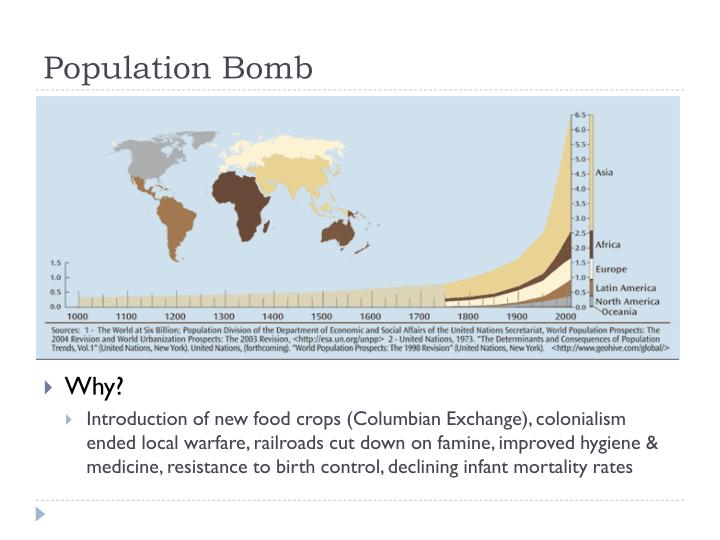

Currently, the view is more or less like:

This chart brings out that a key flaw in much Malthusian thinking is that resources and technologies are more or less static or even face diminishing returns so exponential population growth will inevitably overtake resources, unless a radical solution is implemented. Similarly, in the pollution-population variant, despoliation of our environment multiplies the challenge . . . with of course anthropologically driven climate change the current headlined villain of the piece. As a rule, those “radical solution[s]” being put forward favour centralised national and global control by largely unaccountable bureaucracies.

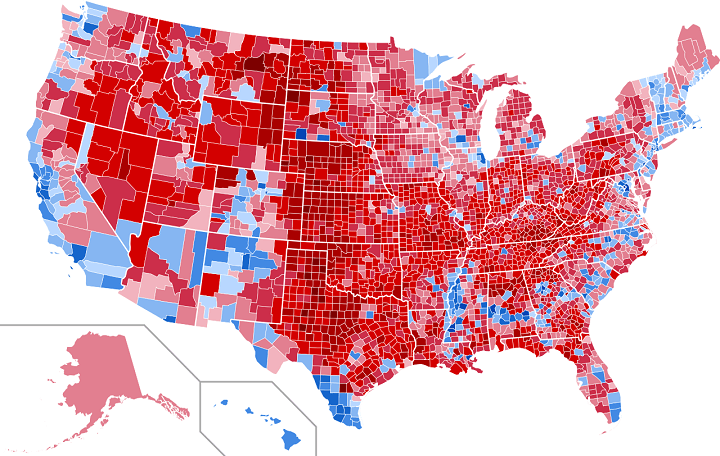

(And BTW, arguably, the current wave of Anglo-American Atlantic basin “populism” that is widely decried and “resisted” by media elites and by established power circles is a C21 version of old fashioned peasant uprisings, expressed so far by ballot box rather than pitchfork and pruning bill — or, AR15. Let’s show a couple of maps.

Peasant uprising 1, US Election 2016:

Peasant uprising 2, UK Election 2019 (the Brexit Election):

See the pattern of urban areas vs hinterlands?)

A better view is to see that we are in an era where generation(s) long — Kondratiev — waves of technological, economic and financial transformation dramatically shift the carrying capacity of both regions and our planet as a whole. For example, one of the arguments used by British officials to restrict Jewish settlement in Mandatory Palestine was carrying capacity, but now almost 10 million people live in the same zone at a far higher standard of living than eighty years ago, at least three to four times the former population.

Where, natural population growth is triggered by public health improvement which reduces especially infant mortality. But as standards of education and living rise, a demographic transition then leads to declining birth rates. Currently, the North is trending well below replacement level, ~ 2.1 children per woman. From some reports out there, China seems to be at 1.5 and India, 2.1, both following the downward trend; that’s the two dominant countries on population that are also shortly going to dominate global GDP growth. A result on trend — trends are made to be broken — is that we could peak at 9+ billion in mid century and decline thereafter, with the issues of aging and want of energy and optimism of youth etc.

All of this is in a sense preliminary.

The key issue is that technology transforms carrying capacity, and that energy is a key resource driver for economies and technological possibilities. The industrial revolution was fed by coal and steam, then by electricity and oil drove the post-war era. So, the logical breakthrough point is energy transformation. For, it is energy that allows us to use organised force to transform raw materials into products that allow us to operate in a C21 economy.

There is of course a green energy push, highlighting renewables. However, by and large, those renewables are often intermittent and fluctuating, leading to disruptive effects as we try to match production to consumption. For example, ponder solar photovoltaic and wind. That leaves on the table two major renewable sources, geothermal and large scaly hydroelectricity. The former, being high risk in exploration, the latter being targetted as environmentally destructive. (Of course we have also tended to forget the issue of large scale flooding which was a material factor in promoting dams.)

The other Mega- and Giga- Watt class dispatchable, baseload and load following energy source/technology is even more objected to: nuclear energy. In the form of fusion, it runs the universe, stars being fusion reactors. Indeed, the atoms in our bodies and in the planet underfoot by and large (Hydrogen being the main exception) came out of stellar furnaces; at least, that is the message of both Cosmology and astrophysics. Fission, is what drove the reactor technologies since the 1940’s and is targetted as a cause of radioactive wastes and risk of meltdowns etc.

We could get into endless arguments on this, but it is more profitable to point out that while fusion is still under development, we have two major reactor technologies that across this century could drive global prosperity, break the water crisis [e.g. through desalination] and power the first phases of solar system colonisation.

We have already seen an example of how reduction of key metals can be transformed, once we have the electricity. And, currently, electric and hybrid electric vehicles are a major trend. In short, technology again looks like driving a breakthrough that takes Malthusian-style population crashes off the table.

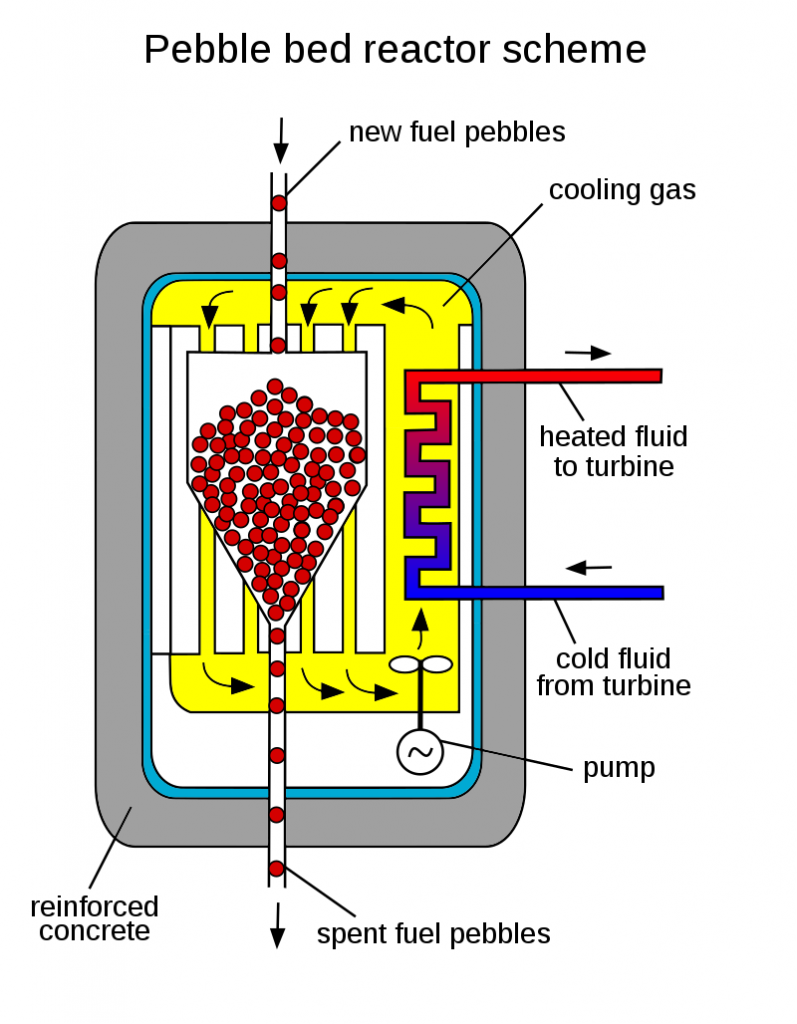

A first technology is a development of the High Temperature Gas Reactors of the 1960’s to 80’s; pebble bed [modular] reactors. As Wikipedia summarises:

The pebble-bed reactor (PBR) is a design for a graphite-moderated, gas-cooled nuclear reactor. It is a type of very-high-temperature reactor (VHTR), one of the six classes of nuclear reactors in the Generation IV initiative. The basic design of pebble-bed reactors features spherical fuel elements called pebbles. These tennis ball-sized pebbles are made of pyrolytic graphite (which acts as the moderator), and they contain thousands of micro-fuel particles called TRISO particles. These TRISO fuel particles consist of a fissile material (such as 235U) surrounded by a coated ceramic layer of silicon carbide for structural integrity and fission product containment. In the PBR, thousands of pebbles are amassed to create a reactor core, and are cooled by a gas, such as helium, nitrogen or carbon dioxide, that does not react chemically with the fuel elements.

This type of reactor is claimed to be passively safe;[1] that is, it removes the need for redundant, active safety systems. Because the reactor is designed to handle high temperatures, it can cool by natural circulation and still survive in accident scenarios, which may raise the temperature of the reactor to 1,600 °C. Because of its design, its high temperatures allow higher thermal efficiencies than possible in traditional nuclear power plants (up to 50%) and has the additional feature that the gases do not dissolve contaminants or absorb neutrons as water does, so the core has less in the way of radioactive fluids.

In the case of He-4, this being effectively alpha particles that have collected electrons to form a gas, the fluid will not be radioactive.

Modular designs have been proposed and it is easy to see scaled designs on relevant scales for various needs, 100’s of kW, 1 – 100 MW, etc.

Transformative.

Similarly, when nuclear power plants were first developed, the US Navy played a key role. A major consideration was that they wanted bomb making materials, U-235 and/or Pu. That led away from a major alternative, Thorium, which is a common byproduct of mining of rare earth metals; which are key for modern motors, magnetic devices and even IC designs. But Thorium-based molten salt reactors were in fact demonstrated in the 1960’s and are quite feasible. Illustrating:

(This type of reactor was actually envisioned for a so-called atomic aircraft, i.e. it is again highly scalable and modular.)

As the World Nuclear Association summarises and suggests:

Molten salt reactors operated in the 1960s.

They are seen as a promising technology today principally as a thorium fuel cycle prospect or for using spent LWR fuel.

A variety of designs is being developed, some as fast neutron types.

Global research is currently led by China.

Some have solid fuel similar to HTR fuel, others have fuel dissolved in the molten salt coolant.Molten salt reactors (MSRs) use molten fluoride salts as primary coolant, at low pressure. This itself is not a radical departure when the fuel is solid and fixed. But extending the concept to dissolving the fissile and fertile fuel in the salt certainly represents a leap in lateral thinking relative to nearly every reactor operated so far. However, the concept is not new, as outlined below.

MSRs may operate with epithermal or fast neutron spectrums, and with a variety of fuels. Much of the interest today in reviving the MSR concept relates to using thorium (to breed fissile uranium-233), where an initial source of fissile material such as plutonium-239 needs to be provided. There are a number of different MSR design concepts, and a number of interesting challenges in the commercialisation of many, especially with thorium.

The salts concerned as primary coolant, mostly lithium-beryllium fluoride and lithium fluoride, remain liquid without pressurization from about 500°C up to about 1400°C, in marked contrast to a PWR which operates at about 315°C under 150 atmospheres pressure.

The main MSR concept is to have the fuel dissolved in the coolant as fuel salt, and ultimately to reprocess that online. Thorium, uranium, and plutonium all form suitable fluoride salts that readily dissolve in the LiF-BeF2 (FLiBe) mixture, and thorium and uranium can be easily separated from one another in fluoride form. Batch reprocessing is likely in the short term, and fuel life is quoted at 4-7 years, with high burn-up. Intermediate designs and the AHTR have fuel particles in solid graphite and have less potential for thorium use.

Graphite as moderator is chemically compatible with the fluoride salts.

Background

During the 1960s, the USA developed the molten salt breeder reactor concept at the Oak Ridge National Laboratory, Tennessee (built as part of the wartime Manhattan Project). It was the primary back-up option for the fast breeder reactor (cooled by liquid metal) and a small prototype 8 MWt Molten Salt Reactor Experiment (MSRE) operated at Oak Ridge over four years to 1969 (the MSR program ran 1957-1976). In the first campaign (1965-68), uranium-235 tetrafluoride (UF4) enriched to 33% was dissolved in molten lithium, beryllium and zirconium fluorides at 600-700°C which flowed through a graphite moderator at ambient pressure. The fuel comprised about one percent of the fluid.

The coolant salt in a secondary circuit was lithium + beryllium fluoride (FLiBe).* There was no breeding blanket, this being omitted for simplicity in favour of neutron measurements.* Fuel salt melting point 434°C, coolant salt melting point 455°C. See Wong & Merrill 2004 reference.

The original objectives of the MSRE were achieved by March 1965, and the U-235 campaign concluded. A second campaign (1968-69) used U-233 fuel which was then available, making MSRE the first reactor to use U-233, though it was imported and not bred in the reactor. This program prepared the way for building a MSR breeder utilising thorium, which would operate in the thermal (slow) neutron spectrum.

According to NRC 2007, the culmination of the Oak Ridge research over 1970-76 resulted in a MSR design that would use LiF-BeF2-ThF4-UF4 (72-16-12-0.4) as fuel. It would be moderated by graphite with a four-year replacement schedule, use NaF-NaBF4 as the secondary coolant, and have a peak operating temperature of 705°C . . .

Yes, there are significant technical challenges and there are major politics and perception issues. No surprise. The real point, though, is that we have to break the global energy bottleneck, and the sooner we do so, the better. That is, we face a familiar, Machiavellian change challenge:

Where, of course, onward, such technologies can open up solar system colonisation, the long term solution for the human population. To give an idea, here is Cosmos Magazine on a high thrust ion drive rocket engine:

Plasma propulsion engine

These engines are like high-octane versions of the ion drive. Instead of a non-reactive fuel, magnetic currents and electrical potentials accelerate ions in plasma to generate thrust. It’s an idea half a century old, but it’s not yet made it to space.

The most powerful plasma rocket in the world is currently the Variable Specific Impulse Magnetoplasma Rocket (VASIMR), being developed by the Ad Astra Rocket Company in Texas. Ad Astra calculates it could power a spacecraft to Mars in 39 days.[ . . . ]

Thermal fission

A conventional fission reactor could heat a propellant to extremely high temperatures to generate thrust.Though no nuclear thermal rocket has yet flown, the concept came close to fruition in the 1960s and 1970s, with several designs built and tested on the ground in the US.

The Nuclear Engine for Rocket Vehicle Application (NERVA) was deemed ready for integration into a spacecraft, before the Nixon administration shelved the idea of sending people to Mars and decimated the project’s funding . . .

Later, DV. END