The UK Independent is noting how Stephen Hawking says of the Film on AI, Transcendence — plot summary here at wiki, that ‘Transcendence looks at the implications of artificial intelligence – but are we taking AI seriously enough?’

First off, I think “implications” is probably over the top — we seem to be more looking at materialist yearnings for eternity, resurrection and paradise.

(As in, on the presumption that intelligence boils down to evolutionarily written software running on wetware neural networks, then we can upload ourselves to a machine of sufficient sophistication, and reconstitute our bodies as we will, then amplify intelligence and create paradise. Anyone who understands why power tends to corrupt will understand why even were that possible, all but certainly, paradise would not happen, and a quasi-eternal power drunk madman of effectively unlimited power would create a disaster, not a utopia.)

Where also, the “strong” concept of Artificial intelligence pivots on the notion that machines can be made to effectively mimic and surpass human intelligence, not only in narrow areas, but eventually globally.

As wiki summarises at 101 level:

The central problems (or goals) of AI research include reasoning, knowledge, planning, learning, natural language processing (communication), perception and the ability to move and manipulate objects.[6] General intelligence (or “strong AI“) is still among the field’s long term goals.[7] Currently popular approaches include statistical methods, computational intelligence and traditional symbolic AI. There are a large number of tools used in AI, including versions of search and mathematical optimization, logic, methods based on probability and economics, and many others.

The field was founded on the claim that a central property of humans, intelligence—the sapience of Homo sapiens—”can be so precisely described that a machine can be made to simulate it.”[8] This raises philosophical issues about the nature of the mind and the ethics of creating artificial beings endowed with human-like intelligence, issues which have been addressed by myth, fiction and philosophy since antiquity.[9] Artificial intelligence has been the subject of tremendous optimism[10] but has also suffered stunning setbacks.[11] Today it has become an essential part of the technology industry, providing the heavy lifting for many of the most challenging problems in computer science.[12]

The basic problem with this?

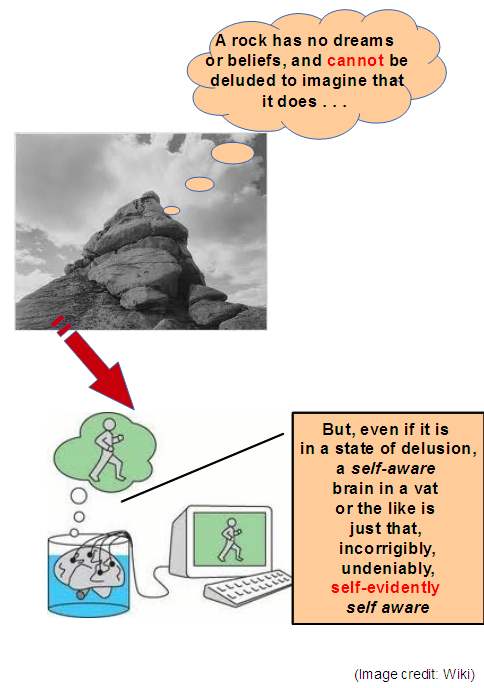

It confuses computation with contemplation, and effectively invites the assumption that consciousness will emerge from sufficiently complex computation. That brings up the point that a rock has no dreams:

A conscious, self aware entity is just that, even as to be appeared to redly is not equal to having sensors detect light of say 680 nm. To compute “light per sensor report in the red range” so output or store: red, is not the same. And, despite many attempts, we have not got anything like an idea of how to specify the difference, to bridge the computation-contemplation gap.

That is itself a point we should reflect on together.

But the movie plot brings out a much more troubling set of issues — once we do a bit of simple decoding.

Let’s clip:

Dr. Will Caster (Johnny Depp) is a scientist motivated by curiosity about the nature of the universe, part of a team working to create a sentient computer. [–> computation-cognition gap, and the materialist hope to create an informational soul] He predicts that such a computer will create a technological singularity, where everything will change, an event which Will calls “Transcendence”. [–> We are sentient, and use the internet, so why has this not happened already?] His wife Evelyn (Rebecca Hall), who he loves deeply, supports him in his efforts. However, a gang of luddite terrorists [–> As in, guess who are the likely enemies of science, progress and utopia?] shoot Will with a bullet laced with radioactive materials which will quickly kill him.

In desperation, Evelyn comes up with a plan to upload Will’s consciousness into the quantum computer [–> uploading the soul . . . as software] that the project is working on. His best friend Max Waters (Paul Bettany), also a researcher, questions the wisdom of this choice.

Will’s likeness survives his body’s death [–> as in the great materialist hope for eternity beyond bodily death, which is itself revealing] and requests that he be connected to the Internet so as to grow in capability and knowledge [–> And, the collectives in a great university do not constitute a transformational critical mass? No wonder the film was criticised as lacking in logic]. Max panics, insisting that the computer intelligence is not Will. Evelyn forcibly ejects Max from the building and connects the computer intelligence to the Internet.

Max is almost immediately confronted by Bree (Kate Mara), the leader of Revolutionary Independence From Technology (R.I.F.T.), the extremist group which carried out the terror attacks on the AI scientists and laboratories. [–> As in, we have to displace terrorism and project it to groups that better fit our expectations and fears and frankly hates] Max is captured by the terrorists and eventually persuaded to join them. The government is also deeply suspicious of what Will’s uploaded person will do, and plan to use the terrorists to take the blame for the government’s actions to stop Will.

Will uses his new-found intelligence to build a technological utopia [–> And why aren’t unis such paradises already?] in a remote desert town called Brightwood, but even Evelyn, who joins him there, begins to suspect his motives . . .

So now, we see the thinly disguised ideological morality play.

We need to ask some very serious questions as to why materialists so often view those who question their agendas and fantasies, as against science, against progress and the likely locus of terrorism etc? (Compare that fantasy with what is going on in The Ukraine and elsewhere, noting how the best and brightest seem to be yet again caught flat-footed.)

Food for thought. END