As we continue to mark up the Wiki article on ID, the next thing to notice is how the anonymous contributors have projected unto ID, an accusation of trying to redefine science and its methods in service to supernaturalistic creationism:

Intelligent design (ID) is a form of creationism promulgated by the Discovery Institute . . . .

Scientific acceptance of Intelligent Design would require redefining science to allow supernatural explanations of observed phenomena, an approach its proponents describe as theistic realism or theistic science. It puts forth a number of arguments in support of the existence of a designer, the most prominent of which are irreducible complexity and specified complexity.[5] The scientific community rejects the extension of science to include supernatural explanations in favor of continued acceptance of methodological naturalism,[n 3][n 4][6][7] and has rejected both irreducible complexity and specified complexity for a wide range of conceptual and factual flaws.[8][9][10][11] Intelligent design is viewed as a pseudoscience by the scientific community, because it lacks empirical support, offers no tenable hypotheses, and aims to describe natural history in terms of scientifically untestable supernatural causes.

This is a target-rich environment (and things are so polarised that too many will be disinclined to listen until real damage has been done), so, we are going to have to take some time to look at this in steps of thought.

Perhaps the best place to begin is probably to point out that this set of assertions are a part of a much broader cultural agenda of imposing a priori materialistic scientism and secularism, dressed up in the prestigious — one could even say, holy — lab coat. For instance, we can very clearly see this in the well-known cat-out-of-the-bag 1997 Lewontin NYRB review of Sagan’s The Demon-Haunted World:

. . . the problem is to get [the general public] to reject irrational and supernatural explanations of the world, the demons that exist only in their imaginations, and to accept a social and intellectual apparatus, Science, as the only begetter of truth . . . .

To Sagan, as to all but a few other scientists, it is self-evident that the practices of science provide the surest method of putting us in contact with physical reality . . . .

It is not that the methods and institutions of science somehow compel us to accept a material explanation of the phenomenal world, but, on the contrary, that we are forced by our a priori adherence to material causes [[–> another major begging of the question . . . ] to create an apparatus of investigation and a set of concepts that produce material explanations, no matter how counter-intuitive, no matter how mystifying to the uninitiated. Moreover, that materialism is absolute [[–> i.e. here we see the fallacious, indoctrinated, ideological, closed mind . . . ], for we cannot allow a Divine Foot in the door.

In attempting to defend this a priori ideological imposition, Lewontin then cites the eminent philosopher Lewis White Beck — oops, we thought that “science [is to be seen as] the only begetter of truth” — to the effect that:

. . . anyone who could believe in God could believe in anything. To appeal to an omnipotent deity is to allow that at any moment the regularities of nature may be ruptured, that miracles may happen

Of course, C S Lewis, in his famous essay, Miracles (and in several other places), long ago pointed out why this is patent nonsense. A world in which miracles are possible is not a chaotic world, but one ruled by an observable and intelligible general order. For, if all were chaos, something that is extraordinary — classically and pivotally, the resurrection of Jesus in fulfillment of prophecies and as witnessed by 500 who could not be shaken by dungeon, fire or sword (and which was recorded as history in multiple sources within their lifetime) — could not stand out as a sign pointing beyond the usual order to the intervention of a higher order of reality, UNLESS there is precisely that: “a usual order.”

That is why, as Dan Petersen recorded in his well-known article on ID (What’s the big deal about Intelligent Design?) from several years ago:

The attempt to equate science with materialism is a quite recent development, coming chiefly to the fore in the 20th century. Contrary to widespread propaganda, science is not something that arose after the dark, obscurantist forces of religion were defeated by an “enlightened” nontheistic worldview. The facts of history show otherwise.

IN HIS RECENT BOOK For the Glory of God, Rodney Stark argues “not only that there is no inherent conflict between religion and science, but that Christian theology was essential for the rise of science.” (His italics.) While researching this thesis, Stark found to his surprise that “some of my central arguments have already become the conventional wisdom among historians of science.” He is nevertheless “painfully aware” that most of the arguments about the close connection between Christian belief and the rise of science are “unknown outside narrow scholarly circles,” and that many people believe that it could not possibly be true.

Sometimes the most obvious facts are the easiest to overlook. Here is one that ought to be stunningly obvious: science as an organized, sustained enterprise arose only once in the history of Earth. Where was that? Although other civilizations have contributed technical achievements or isolated innovations, the invention of science as a cumulative, rigorous, systematic, and ongoing investigation into the laws of nature occurred only in Europe; that is, in the civilization then known as Christendom. Science arose and flourished in a civilization that, at the time, was profoundly and nearly exclusively Christian in its mental outlook.

That little bit of history is a big hint. He continues:

Recent scholarship in the history of science reveals that this commitment to rational, empirical investigation of God’s creation is not simply a product of the “scientific revolution” of the 16th and 17th centuries, but has profound roots going back at least to the High Middle Ages. The development of the university system in medieval times was, of course, almost entirely a product of the Church. Serious students of the period know that this was neither a time of stagnation, nor of repression of inquiry in favor of dogma. Rather, it was a time of great intellectual ferment and discovery, and the universities fostered rational, empirical, systematic inquiry . . . .

WHEN THE DISCOVERIES of science exploded in number and importance in the 1500s and 1600s, the connection with Christian belief was again profound. Many of the trailblazing scientists of that period when science came into full bloom were devout Christian believers, and declared that their work was inspired by a desire to explore God’s creation and discover its glories. Perhaps the greatest scientist in history, Sir Isaac Newton, was a fervent Christian who wrote over a million words on theological subjects. Other giants of science and mathematics were similarly devout: Boyle, Descartes, Kepler, Leibniz, Pascal. To avoid relying on what might be isolated examples, Stark analyzed the religious views of the 52 leading scientists from the time of Copernicus until the end of the 17th century. Using a methodology that probably downplayed religious belief, he found that 32 were “devout”; 18 were at least “conventional” in their religious belief; and only two were “skeptics.” More than a quarter were themselves ecclesiastics: “priests, ministers, monks, canons, and the like.”

Down through the 19th century, many of the leading figures in science were thoroughgoing Christians. A partial list includes Babbage, Dalton, Faraday, Herschel, Joule, Lyell, Maxwell, Mendel, and Thompson (Lord Kelvin). A survey of the most eminent British scientists near the end of the 19th century found that nearly all were members of the established church or affiliated with some other church.

In short, scientists who were committed Christians include men often considered to be fathers of the fields of astronomy, atomic theory, calculus, chemistry, computers, electricity, genetics, geology, mathematics, and physics. In the late 1990s, a survey found that about 40 percent of American scientists believe in a personal God and an afterlife — a percentage that is basically unchanged since the early 20th century. A listing of eminent 20th-century scientists who were religious believers would be far too voluminous to include here — so let’s not bring coals to Newcastle, but simply note that the list would be large indeed, including Nobel Prize winners.

Far from being inimical to science, then, the Judeo-Christian worldview is the only belief system that actually produced it.

In short, the notion that “supernaturalism” is inimical to science is simply false, false on grounds of sheer raw historical fact and related worldview trends. But, those who have been indoctrinated in the name of education in today’s schools probably will not know that, so the well-poisoning tactic Wiki and Lewontin indulged will not ring false to the already indoctrinated.

But it is not just a matter of Wikipedia or Richard Lewontin. The debates over the definition of science to be taught in Schools in Kansas brought to bear a joint letter from the US national Academy of Sciences (NAS) and National Science Teachers Association (NSTA). In key excerpts:

“. . . the members of the Kansas State Board of Education who produced Draft 2-d of the KSES [[Kansas Science Education Standards] have deleted text defining science as a search for natural explanations of observable phenomena, blurring the line between scientific and other ways of understanding. Emphasizing controversy in the theory of evolution — when in fact all modern theories of science are continually tested and verified — and distorting the definition of science are inconsistent with our Standards and a disservice to the students of Kansas. Regretfully, many of the statements made in the KSES related to the nature of science and evolution also violate the document’s mission and vision. Kansas students will not be well-prepared for the rigors of higher education or the demands of an increasingly complex and technologically-driven world if their science education is based on these standards. Instead, they will put the students of Kansas at a competitive disadvantage as they take their place in the world.” [[Source: excerpt of retort. Emphases and explanatory parentheses added.]

Boiled down, parents and politicians in Kansas were being told their children were being held hostage to a secularist, a priori materialist definition of science, and were also expected — under threat of blacklisting their children, notice — to pretend that the obvious, that evolutionism has been controversial as a theory for many reasons for 150 years, is not so. No, no, no, science has proved — when, where, how, by the way? — that the world is matter and energy moving by forces of chance and necessity ins pace and time, and so the very definition of science must reflect that, and while we are at it, you will not be ready for higher education or good paying jobs unless you toe this party-line.

What was it that elicited such a harsh, threat-laced retort?

The 2005 corrective definition of science that sought to restore a more traditional, historically balanced and less ideologically loaded view than the one that had been imposed in 2001:

So, we see here institutions at the highest level in science and science education trying not only to impose a priori materialism on the definition of science [cf here also], but to pretend that an attempt to restore a more balanced definition would so cripple children that they could not function in jobs or in College.

To put this in balance, let us examine typical definitions of science from high quality dictionaries (yes, yes, science is broader and deeper than such definitions can convey, but that is a challenge faced by all basic science education, so I will provide a sample of what an informed summary looks like . . . ) in the years before the design controversy:

science: a branch of knowledge conducted on objective principles involving the systematized observation of and experiment with phenomena, esp. concerned with the material and functions of the physical universe. [Concise Oxford Dictionary, 1990 — and yes, they used the “z” Virginia!]

scientific method: principles and procedures for the systematic pursuit of knowledge [”the body of truth, information and principles acquired by mankind”] involving the recognition and formulation of a problem, the collection of data through observation and experiment, and the formulation and testing of hypotheses. [Webster’s 7th Collegiate Dictionary, 1965]

These in turn trace back to the sort of thoughts Newton famously put on record in his 1704 Query 31 to his Opticks:

As in Mathematicks, so in Natural Philosophy, the Investigation of difficult Things by the Method of Analysis, ought ever to precede the Method of Composition. This Analysis consists in making Experiments and Observations, and in drawing general Conclusions from them by Induction, and admitting of no Objections against the Conclusions, but such as are taken from Experiments, or other certain Truths. For Hypotheses are not to be regarded in experimental Philosophy. And although the arguing from Experiments and Observations by Induction be no Demonstration of general Conclusions; yet it is the best way of arguing which the Nature of Things admits of, and may be looked upon as so much the stronger, by how much the Induction is more general. And if no Exception occur from Phaenomena, the Conclusion may be pronounced generally. But if at any time afterwards any Exception shall occur from Experiments, it may then begin to be pronounced with such Exceptions as occur. By this way of Analysis we may proceed from Compounds to Ingredients, and from Motions to the Forces producing them; and in general, from Effects to their Causes, and from particular Causes to more general ones, till the Argument end in the most general. This is the Method of Analysis: And the Synthesis consists in assuming the Causes discover’d, and establish’d as Principles, and by them explaining the Phaenomena proceeding from them, and proving the Explanations. [[Emphases added.]

The language is more complex (and points to deep underlying issues) but the pattern reflected in the 2005 KSES definition and the high quality dictionaries is obvious. Science seeks to describe our world and its phenomena of interest accurately and systematically, understand/explain reliably, predict correctly, and guide sound technology and policy. To do so, we use “O, HI PET” — observe, hypothesise, infer & predict, make empirical tests. The resulting provisional but tested and found reliable body of knowledge has, for over 350 years now, made increasing contributions to development and prosperity.

Obviously, successful science simply does not need to make question-begging a priori ideological commitments to doctrinaire materialism.

What, then, lies behind what Wiki is doing, and what Lewontin, the NAS and the NSTA did?

Let us look in steps:

1 –> The attempt to tag Intelligent Design as a form of Creationism promoted by the Discovery Institute, is a case of Saul Alinsky Rules for Radicals name-calling and polarisation by selecting, framing, freezing and polarising a target; including by misleading labelling.

2 –> Indeed, the very Creationists reject the idea that ID is Creationism — and they think it SHOULD become creationism. Not exactly the vaunted NPOV.

( . . . See how it pinches when the polarisation tactic is on the other foot? And, never mind, my observation is patently accurate, as I will go on to show.)

3 –> What the naive reader would not know is what I just had reason to highlight in a UD comment response to the “ID is Creationism in a cheap tuxedo” type accusation so often promoted by Ms Barbara Forrest of the NCSE and the Lousiana Humanists, and co. (Yes, motive mongering also pinches tightly when it is on the other foot.) Namely, the actual roots of design thought in developments in astrophysics, cosmology, molecular biology and origin of life research since the 1940’s and 50’s; pardon my details:

Thaxton . . . working with Bradley and Olsen, in 1984 — three years before the relevant US Supreme Court decision that is usually cited in “Creationism in a cheap tuxedo” narratives — developed the first technical design theory work . . . .

As in The Mystery of Life’s Origin, in which argumentation on thermodynamics, Geology, and related chemistry, polymer science, information issues and atmosphere science etc in the context of a prelife earth led them to conclude based on the unfavourable equilibria, that formation of relevant information-rich protein or RNA polymers in such a pre-life matrix was maximally implausible? Then, who went on to discuss the various protocell theories at the time critically and concluded that none of them were plausible?

Thence, concluded that the best explanation of formation of life was design, refusing to infer whether there was a ‘Creator” of such life within or beyond the cosmos, on grounds that the empirical evidence did not warrant such?

So, we are left to infer from Forrest’s graph [where, BTW, the relevant publishers of TMLO were not allowed to speak for themselves in the courtroom . . . ], that the only plausible explanation is an attempt to avoid the implications of a court ruling? . . . .

But if one is committed to the notion championed in the Wikipedia article I am currently marking up, that there is no technical merit to design arguments, there only remains sociological-psychological and political ones to account for its rise . . . .

I guess I should start at the level that Thaxton et al did not address as beyond the scope of their investigations, which is the level [of design theory] that does [appropriately] point beyond the cosmos [i.e. cosmological design theory].

For this, let me cite here a certain scientific hero of mine, the lifelong agnostic, Sir Fred Hoyle:

From 1953 onward, Willy Fowler and I have always been intrigued by the remarkable relation of the 7.65 MeV energy level in the nucleus of 12 C to the 7.12 MeV level in 16 O. If you wanted to produce carbon and oxygen in roughly equal quantities by stellar nucleosynthesis, these are the two levels you would have to fix, and your fixing would have to be just where these levels are actually found to be. Another put-up job? . . . I am inclined to think so. A common sense interpretation of the facts suggests that a super intellect [–> as in, a Cosmos-building super intellect] has “monkeyed” with the physics as well as the chemistry and biology, and there are no blind forces worth speaking about in nature. [F. Hoyle, Annual Review of Astronomy and Astrophysics, 20 (1982): 16.]

This seems to have been part of the conclusion of a talk he gave at Caltech in 1981. Let’s clip a little earlier:

The big problem in biology, as I see it, is to understand the origin of the information carried by the explicit structures of biomolecules. The issue isn’t so much the rather crude fact that a protein consists of a chain of amino acids linked together in a certain way, but that the explicit ordering of the amino acids endows the chain with remarkable properties, which other orderings wouldn’t give. The case of the enzymes is well known . . . If amino acids were linked at random, there would be a vast number of arrange-ments that would be useless in serving the pur-poses of a living cell. When you consider that a typical enzyme has a chain of perhaps 200 links and that there are 20 possibilities for each link,it’s easy to see that the number of useless arrangements is enormous, more than the number of atoms in all the galaxies visible in the largest telescopes. This is for one enzyme, and there are upwards of 2000 of them, mainly serving very different purposes. So how did the situation get to where we find it to be? This is, as I see it, the biological problem – the information problem . . . .

I was constantly plagued by the thought that the number of ways in which even a single enzyme could be wrongly constructed was greater than the number of all the atoms in the universe. So try as I would, I couldn’t convince myself that even the whole universe would be sufficient to find life by random processes – by what are called the blind forces of nature . . . . By far the simplest way to arrive at the correct sequences of amino acids in the enzymes would be by thought, not by random processes . . . .

Now imagine yourself as a superintellect working through possibilities in polymer chemistry. Would you not be astonished that polymers based on the carbon atom turned out in your calculations to have the remarkable properties of the enzymes and other biomolecules? Would you not be bowled over in surprise to find that a living cell was a feasible construct? Would you not say to yourself, in whatever language supercalculating intellects use: Some supercalculating intellect must have designed the properties of the carbon atom, otherwise the chance of my finding such an atom through the blind forces of nature would be utterly minuscule. Of course you would, and if you were a sensible superintellect you would conclude that the carbon atom is a fix.

OF COURSE, IT IS WORSE THAN THIS.

It turns out that on many dimensions of fine tuning, our cosmos spits out the following first four atoms: H, He, C, O. with N nearly 5th overall, and 5th for our galaxy. That gets us to stars, the rest of the periodic table, organic chemistry, water, terrestrial rocks [oxides or oxygen rich ceramics] and proteins.

That I find is a big clue.

Where, we must then see what Hoyle also said:

I do not believe that any physicist who examined the evidence could fail to draw the inference that the laws of nuclear physics have been deliberately designed with regard to the consequences they produce within stars. [[“The Universe: Past and Present Reflections.” Engineering and Science, November, 1981. pp. 8–12]

In short, the numbers do not add up as Ms Forrest would have us believe, and the personality who is actually pivotal — evidently including for Thaxton et al — is not by any means a Christian, but a lifelong agnostic.

And remember, the cosmological ID thinking emerged first, from the 1950′s to 70′s. It ties naturally into the issues being run into by OOL researchers who had by the 1970′s realised they had to account for functionally specific complex information in biology.

Here we need to remember Orgel and Wicken (it’s all there in the IOSE, folks):

ORGEL, 1973: . . . In brief, living organisms are distinguished by their specified complexity. Crystals are usually taken as the prototypes of simple well-specified structures, because they consist of a very large number of identical molecules packed together in a uniform way. Lumps of granite or random mixtures of polymers are examples of structures that are complex but not specified. The crystals fail to qualify as living because they lack complexity; the mixtures of polymers fail to qualify because they lack specificity. [[The Origins of Life (John Wiley, 1973), p. 189.]

WICKEN, 1979: ‘Organized’ systems are to be carefully distinguished from ‘ordered’ systems. Neither kind of system is ‘random,’ but whereas ordered systems are generated according to simple algorithms [[i.e. “simple” force laws acting on objects starting from arbitrary and common- place initial conditions] and therefore lack complexity, organized systems must be assembled element by element according to an [[originally . . . ] external ‘wiring diagram’ with a high information content . . . Organization, then, is functional complexity and carries information. It is non-random by design or by selection, rather than by the a priori necessity of crystallographic ‘order.’ [[“The Generation of Complexity in Evolution: A Thermodynamic and Information-Theoretical Discussion,” Journal of Theoretical Biology, 77 (April 1979): p. 353, of pp. 349-65. (Emphases and notes added. Nb: “originally” is added to highlight that for self-replicating systems, the blue print can be built-in.)]

The source of my descriptive term, functionally specific complex information [and related organisation], per the conduit of TMLO, should be obvious.

In short, [the] whole complex collapses, collapses on the grounds that its timeline is wrong and the forebears of intelligent design thought as a scientific research programme are not as [Wiki and others of like ilk] imagine.

4 –> So, clearly, we are seeing the willfully negligent — at the level of Ms Forrest (or even Wiki, given how influential it is as a reference web site) there are pretty serious duties of care to truth and fairness — substitution of a false history for an actual one, in order to enable rules for radicals polarisation, framing and smearing tactics.

5 –> That would already be enough to indict the article as irresponsible and in need of severe correction and permanent acknowledgement by a prominent notice of apology that they have had to be corrected. (Not that I am holding my breath in expectation of acting in light of basic broughtupcy.)

6 –> What about “theistic realism” or “theistic science”? In another article, Wiki informs:

Theistic science, also referred to as theistic realism[1], is the viewpoint that methodological naturalism should be replaced by a philosophy of science that is informed by supernatural revelation[2], which would allow occasional supernatural explanations particularly in topics that impact theology; as for example evolution.[3] Supporters of this viewpoint include intelligent design creationism proponents J. P. Moreland, Alvin Plantinga, Stephen C. Meyer[4][5] and Phillip E. Johnson.[1][6]

7 –> Notice, first, the label and dismiss tactics, where there are no movements that would accept the label “intelligent design creationists” as an accurate or fair or “neutral point of view” characterisation. That is, we see just how far and wide the rules for radicals polarisation and well poisoning tactics are spread in Wiki.

8 –> But when we see a red herring distractor led away to a strawman caricautre soaked in ad hominems and set alight, clouding, choking, poisoning and polarising the atmosphere, we need to go looking for the inconvenient truth that must be distracted from by any means deemed “necessary.”

9 –> And, it is not too hard to find: methodological naturalism — the backdoor a priori imposition of Lewontiniana priori materialism through the seemingly innocuous suggestion that this is the long term, successful method of science — has to be guarded at all costs.

10 –> But obviously, if you a priori rule that science can only operate in an evolutionary materialist circle of naturalistic explanations, you are begging big questions. The very word “science” gives a warning, as it is a slightly modified form of the Latin for knowledge: warranted, credibly true beliefs.

11 –> That is, what is being sacrificed here is the key concept that science should as far as possible be an open-minded, fair investigation of the truth about our world, in light of empirical evidence from observation, experiment, logically (and wherever possible, mathematically) driven analysis and open, uncensored but respectful discussion among the informed, etc.

12 –> That is, science is being taken captive to a priori, question-begging materialist ideology.

13 –> So, it is unsurprising to see that eminent philosophers of the ilk of a Plantinga (or even a Moreland etc), would point that out. To tag and dismiss them with a pejorative label is thus inexcusable.

14 –> The obsession with projecting the “Intelligent Design Creationism” smear by mis-labelling, then leads on to a strawman caricature of the purpose of empirically based, design detection methods. Not, to “support . . . the existence of a designer,” but instead to identify whether and how reliably, we may detect from observable characteristics of objects and phenomena, to what extent they were produced by processes traceable to the default — chance and/or mechanical necessity — on the one hand, or design on the other. That is, as the contrasted NWE online encyclopedia article begins:

Intelligent design (ID) is the view that it is possible to infer from empirical evidence that “certain features of the universe and of living things are best explained by an intelligent cause, not an undirected process such as natural selection” . . .

15 –> In short, the issue here is, whether there are features of objects and phenomena in nature that may be empirically investigated and which will — per inductive testing (with potential for falsification) — reliably indicate that they are caused by design. These signs, of course, include specified complexity and irreducible complexity, among others.

16 –> Which immediately puts them in Wiki’s cross-hairs:

The scientific community rejects the extension of science to include supernatural explanations in favor of continued acceptance of methodological naturalism,[n 3][n 4][6][7] and has rejected both irreducible complexity and specified complexity for a wide range of conceptual and factual flaws . . .

17 –> “No true Scotsman [Scientist . . . ].” Of course, the evolutionary materialism dominated school of thought rejects CSI and IC as credible indicators of design, but in a context where the methods used to make that determination are tainted by a priori materialism, such an appeal to consensus is immediately tainted.

18 –> On a more objective view, it should be clear that especially functionally specific complex organisation and associated information is a well-known, reliable and strong indicator of design as causal process.

19 –> For simple instance, consider the text of this post — it is complex, specific and functional as script in the English Language. No-one in his or her right mind would dream of assigning it by definition to chance and necessity spewing forth lucky noise that then propagated across the Internet and voila, on pain of deeming those who dare suggest such “pseudoscientific.”

20 –> So, why is it that, once the question of origins is on the table, to say look at the functionally specific digital code in the genome of the living cell and notice that it is a linguistic and algorithmic fact, which cries out for proper explanation on causes known to be adequate to write code to execute algorithms, leads to such accusations? (Where algorithms are step by step finite procedures that work to practically attain specific targetted end states. That is, they are purposeful. Goal-targetted behaviour being a well-known characteristic of mind.)

21 –> Do we have credible cases where from scratch and without intelligent design and direction (even, coded in a genetic algorithm or the like), purely by blind chance and mechanical necessity, cods, algorithms and data structures have organised themselves out of the chaos of odds and ends that are just lying around and have in so doing exceeded 500 – 1,000 bits of complexity?

22 –> Despite a lot of huffing and puffing, puffs of smoke and flashing mirrors with abracadabra hand waving to the contrary, NO.

23 –> What we do have is a growing global industry where skilled and knowledgeable designers are paid very well thank you, to create such coded algorithms and data structures to carry out algorithms.

24 –> In addition, we have a world full of libraries and a whole Internet full of billions of further cases on the point. Namely, such FSCO/I — especially digitally coded functionally specific, complex information (dFSCI) as we have focussed on is indeed a reliable sign of design. (And one that can be tested and in principle overturned by contrary observations, though the related needle in the haystack analysis shows why that is going to be quite hard to do.)

25 –>The FSCI in or implied by an object matter can be quantified and measured as well, e.g the simplified Chi metric that has often been discussed here at UD:

Chi = Ip*S – 500, in bits beyond a “complex enough” threshold

- NB: If S = 0 [–> the default, it is only where there is positive and observable reason to infer that something is functionally specific that S = 1], this locks us at Chi = – 500; and, if Ip is less than 500 bits, Chi will be negative even if S is positive.

- E.g.: a string of 501 coins tossed at random will have S = 0, but if the coins are arranged to spell out a message in English using the ASCII code [[notice independent specification of a narrow zone of possible configurations, T], Chi will — unsurprisingly — be positive.

- Following the logic of the per aspect necessity vs chance vs design causal factor explanatory filter, the default value of S is 0, i.e. it is assumed that blind chance and/or mechanical necessity are adequate to explain a phenomenon of interest.

- S goes to 1 when we have objective grounds — to be explained case by case — to assign that value.

- That is, we need to justify why we think the observed cases E come from a narrow zone of interest, T, that is independently describable, not just a list of members E1, E2, E3 . . . ; in short, we must have a reasonable criterion that allows us to build or recognise cases Ei from T, without resorting to an arbitrary list.

- A string at random is a list with one member, but if we pick it as a password, it is now a zone with one member. (Where also, a lottery, is a sort of inverse password game where we pay for the privilege; and where the complexity has to be carefully managed to make it winnable. )

- An obvious example of such a zone T, is code symbol strings of a given length that work in a programme or communicate meaningful statements in a language based on its grammar, vocabulary etc. This paragraph is a case in point, which can be contrasted with typical random strings ( . . . 68gsdesnmyw . . . ) or repetitive ones ( . . . ftftftft . . . ); where we can also see by this case how such a case can enfold random and repetitive sub-strings.

- Arguably — and of course this is hotly disputed — DNA protein and regulatory codes are another. Design theorists argue that the only observed adequate cause for such is a process of intelligently directed configuration, i.e. of design, so we are justified in taking such a case as a reliable sign of such a cause having been at work. (Thus, the sign then counts as evidence pointing to a perhaps otherwise unknown designer having been at work.)

- So also, to overthrow the design inference, a valid counter example would be needed, a case where blind mechanical necessity and/or blind chance produces such functionally specific, complex information. (Points xiv – xvi above outline why that will be hard indeed to come up with. There are literally billions of cases where FSCI is observed to come from design.)

xxii: So, we have some reason to suggest that if something, E, is based on specific information describable in a way that does not just quote E and requires at least 500 specific bits to store the specific information, then the most reasonable explanation for the cause of E is that it was designed. The metric may be directly applied to biological cases:

Using Durston’s Fits values — functionally specific bits — from his Table 1, to quantify I, so also accepting functionality on specific sequences as showing specificity giving S = 1, we may apply the simplified Chi_500 metric of bits beyond the threshold:

RecA: 242 AA, 832 fits, Chi: 332 bits beyondSecY: 342 AA, 688 fits, Chi: 188 bits beyondCorona S2: 445 AA, 1285 fits, Chi: 785 bits beyond

xxiii: And, this raises the controversial question that biological examples such as DNA — which in a living cell is much more complex than 500 bits — may be designed to carry out particular functions in the cell and the wider organism.

26 –> We are entitled to take such results seriously in scientific investigations, and accordingly it is reasonable to view the process of inferring to best, empirically grounded scientific explanation in that light, e.g.:

27 –> What is happening on matters of origins, especially of cell based life and of body plans, is that there is an a priori imposition of materialism, so the materialists are committed to the idea that here CANNOT be a designer present. So, they see no harm in locking in that idea through imposing the methodological constraint that science must explain naturalistically, seeing only the despised, suspect possibility, “the supernatural.”

28 –> But obviously, this sort of question-begging only leads them to lock out the evidence before it can speak. And in particular, they need to ask themselves whether they have listened to the point made ever since Plato in The Laws, Bk X, 2350 years ago, that there is another alternative to “natural” (= blind chance plus mechanical necessity acting on matter and energy in space and time) in causal explanations, i.e. the ARTificial. That is, design.

29 –> Design is obviously and empirical phenomenon, and it often leaves characteristic traces, such as FSCO/I. So, why not allow the evidence to speak for itself, through its characteristic signs? Surely, if science were concerned to discover the truth about our world in light of observable, factual evidence evaluated fairly and logically — which is a big part of the reason why the public respects it — that would be a no-brainer.

30 –> Sadly, and obviously on what we have already seen, that is not the case today.

31 –> Which is another way of saying, science has been taken ideological captive, and is being corrupted by that captivity. In turn, that implies that what is at stake in the design theory debates, is the restoration of the integrity of science.

32 –> But, what about irreducible complexity?

33 –> This concept is closely connected to the idea — easily confirmed by anyone who has had to put together a complex circuit board or troubleshoot a car with a mysterious fault — that when we have multiple part-based function that is strongly dependent on how the parts are arranged and coupled together, it means that for a complicated object, not just any and any old way of dashing bits and pieces or sub-assemblies together will work. That is, complex, specific, multipart function dependent on well-matched and properly arranged parts naturally comes in deeply isolated islands of function within broader spaces of possible configurations.

34 –> That poses a serious challenge for proposed mechanisms of evolution that depend on creating novel function based on such configurations, for the intervening seas of non-function between relevant islands could well make it all but impossible to practically bridge from one working island to another.

35 –> And, the sort of incremental changes within such islands of function as we find in body plans, do not answer to this problem. That is, an explanatory mechanism that plausibly accounts for varying proportions of white and black moths in an area or the size of finch beaks or the loss of eyes in blind cave fish, does not easily account for the origin of the body plans for moths, birds and fish by simple extrapolation of gradually branching incremental changes tracing back to some remote unicellular organism. (And the complex arrangements of molecules to make such a living cell with metabolic and replication facilities also needs to be accounted for.)

36 –> Why that is so, can be seen from Menuge’s criteria C1 – 5, which highlight the iconic case of the bacterial flagellum but are broadly applicable:

C1: Availability. Among the parts available for recruitment to form the flagellum, there would need to be ones capable of performing the highly specialized tasks of paddle, rotor, and motor, even though all of these items serve some other function or no function.

C2: Synchronization. The availability of these parts would have to be synchronized so that at some point, either individually or in combination, they are all available at the same time.

C3: Localization. The selected parts must all be made available at the same ‘construction site,’ perhaps not simultaneously but certainly at the time they are needed.

C4: Coordination. The parts must be coordinated in just the right way: even if all of the parts of a flagellum are available at the right time, it is clear that the majority of ways of assembling them will be non-functional or irrelevant.

C5: Interface compatibility. The parts must be mutually compatible, that is, ‘well-matched’ and capable of properly ‘interacting’: even if a paddle, rotor, and motor are put together in the right order, they also need to interface correctly.

( Agents Under Fire: Materialism and the Rationality of Science, pgs. 104-105 (Rowman & Littlefield, 2004). HT: ENV.)

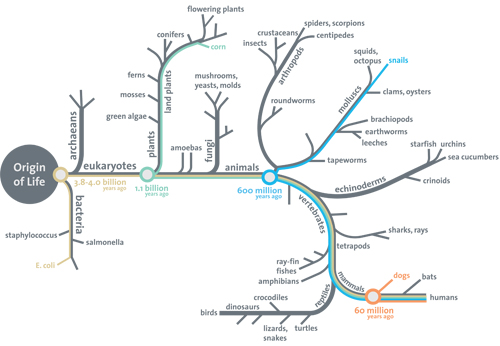

37 –> That is, the irreducible complexity issue challenges darwinists to provide empirical warrant for the detailed pattern of rooting — OOL — and major branches — origin of body plans — for the tree of life promoted ever since Darwin as the best explanation for the origin and diversity of life forms:

38 –> On fair comment, this classic icon of evolution — it is in fact the ONLY illustration in Darwin’s Origin of Species — presents as through it were established fact the incremental pattern of rooting and branching that would be necessary to actually empirically ground the macroevolutionary picture, but fails to provide actual empirical warrant. It makes plausible what is not — after 150 years — actually shown as so. And, particularly, it fails to show that blind chance variations and differential success in ecological niches accounts for the body plan level diversity of life.

39 –>To overturn IC, of course, all that would be needed is to actually provide observational evidence of the incremental origin of such body plans. But ever since the days when Darwin puzzled over the Cambrian life revolution, that evidence has been conspicuously missing. Never mind the 150 years of scouring the relevant fossil beds, which have simply underscored the pattern that was already evident in Darwin’s day. and which has been aptly summarised by Gould in a classic citation:

“The extreme rarity of transitional forms in the fossil record persists as the trade secret of paleontology. The evolutionary trees that adorn our textbooks have data only at the tips and nodes of their branches; the rest is inference, however reasonable, not the evidence of fossils. Yet Darwin was so wedded to gradualism that he wagered his entire theory on a denial of this literal record:

The geological record is extremely imperfect and this fact will to a large extent explain why we do not find intermediate varieties, connecting together all the extinct and existing forms of life by the finest graduated steps [[ . . . . ] He who rejects these views on the nature of the geological record will rightly reject my whole theory.[[Cf. Origin, Ch 10, “Summary of the preceding and present Chapters,” also see similar remarks in Chs 6 and 9.]

Darwin’s argument still persists as the favored escape of most paleontologists from the embarrassment of a record that seems to show so little of evolution. In exposing its cultural and methodological roots, I wish in no way to impugn the potential validity of gradualism (for all general views have similar roots). I wish only to point out that it was never “seen” in the rocks.

Paleontologists have paid an exorbitant price for Darwin’s argument. We fancy ourselves as the only true students of life’s history, yet to preserve our favored account of evolution by natural selection we view our data as so bad that we never see the very process we profess to study.” [[Stephen Jay Gould ‘Evolution’s erratic pace‘. Natural History, vol. LXXXVI95), May 1977, p.14. (Kindly note, that while Gould does put forward claimed cases of transitions elsewhere, that cannot erase the facts that he published in the peer reviewed literature in 1977 and was still underscoring in 2002, 25 years later, as well as what the theory he helped co-found, set out to do. Sadly, this needs to be explicitly noted, as some would use such remarks to cover over the points just highlighted. Also, note that this is in addition to the problem of divergent molecular trees and the top-down nature of the Cambrian explosion.)] [[HT: Answers.com]

___________________

So, we can easily see that the concluding dismissal of design theory in the wiki article is quite misleading and tendentious:

Intelligent design is viewed as a pseudoscience by the scientific community, because it lacks empirical support, offers no tenable hypotheses, and aims to describe natural history in terms of scientifically untestable supernatural causes.

These claims are simply not true, are lacking in proper warrant, and run counter to the evident facts as summarised at introductory level above. They should be withdrawn, apologised for and a permanent notice of that need to retract and apologies should be affixed at the head of the article. END

PS: To comment on this post, please go to the discussion thread here, for the root article.

F/N: It bears noting that the above (though it is s a bit longer than the stated number of words) is about the length of the challenge essay I have called for to show the warrant for the Darwinist account of origin and diversification of life, from OOL on.