AI — artificial intelligence — is emerging as a future-driver. For example, we have been hearing of driver-less cars, and now we have helmsman-less barges:

>>The world’s first fully electric, emission-free and potentially crewless container barges are to operate from the ports of Antwerp, Amsterdam, and Rotterdam from this summer.

The vessels, designed to fit beneath bridges as they transport their goods around the inland waterways of Belgium and the Netherlands, are expected to vastly reduce the use of diesel-powered trucks for moving freight.

Dubbed the “Tesla of the canals”, their electric motors will be driven by 20-foot batteries, charged on shore by the carbon-free energy provider Eneco.

The barges are designed to operate without any crew, although the vessels will be manned in their first period of operation as new infrastructure is erected around some of the busiest inland waterways in Europe.

In August, five barges – 52 metres long and 6.7m wide, and able to carry 24 20ft containers weighing up to 425 tonnes – will be in operation. They will be fitted with a power box giving them 15 hours of power. As there is no need for a traditional engine room, the boats have up to 8% extra space, according to their Dutch manufacturer, Port Liner.

About 23,000 trucks, mainly running on diesel, are expected to be removed from the roads as a result . . . >>

Of course, such articles tend to leave off “minor” details such as just how dirty the semiconductor manufacturing business is. As for “carbon [emissions] free” I will believe it when I see it demonstrated. Let’s simply say instead, renewables.

The significant point for us is that we are seeing AI emerging into the market-place as a potential future-driver, a good slice of the technologies that will likely drive the next generation of economy dominating industries that likely will shape our future. That brings the economy-driving long wave thinking of Kondratiev, Schumpeter and successors to the fore as the world moves beyond the great recession from 2008 on, which still has lingering impacts. And BTW, I have been told of huge former office-cubicle spaces that are now hosting racks of computers running AI driven trading programs for big-ticket investment houses.

Let’s pause and ponder a bit on the Long-wave view of the global economy, using here a “live” working chart:

Someone will doubtless ask: that’s all well and good as part of an ongoing UD Sci-Tech watch, but how is this relevant to the core ID focus of this blog?

Glad you asked.

Here is Science Daily, citing a somewhat older version of Wikipedia in a sci-tech backgrounder piece:

>>The modern definition of artificial intelligence (or AI) is “the study and design of intelligent agents” where an intelligent agent is a system that perceives its environment and takes actions which maximizes its chances of success.

John McCarthy, who coined the term in 1956, defines it as “the science and engineering of making intelligent machines.”

Other names for the field have been proposed, such as computational intelligence, synthetic intelligence or computational rationality.

The term artificial intelligence is also used to describe a property of machines or programs: the intelligence that the system demonstrates.

AI research uses tools and insights from many fields, including computer science, psychology, philosophy, neuroscience, cognitive science, linguistics, operations research, economics, control theory, probability, optimization and logic.

AI research also overlaps with tasks such as robotics, control systems, scheduling, data mining, logistics, speech recognition, facial recognition and many others.

Computational intelligence Computational intelligence involves iterative development or learning (e.g., parameter tuning in connectionist systems).

Learning is based on empirical data and is associated with non-symbolic AI, scruffy AI and soft computing.

Subjects in computational intelligence as defined by IEEE Computational Intelligence Society mainly include: Neural networks: trainable systems with very strong pattern recognition capabilities.

Fuzzy systems: techniques for reasoning under uncertainty, have been widely used in modern industrial and consumer product control systems; capable of working with concepts such as ‘hot’, ‘cold’, ‘warm’ and ‘boiling’.

Evolutionary computation: applies biologically inspired concepts such as populations, mutation and survival of the fittest to generate increasingly better solutions to the problem.

These methods most notably divide into evolutionary algorithms (e.g., genetic algorithms) and swarm intelligence (e.g., ant algorithms).

With hybrid intelligent systems, attempts are made to combine these two groups.

Expert inference rules can be generated through neural network or production rules from statistical learning such as in ACT-R or CLARION.

It is thought that the human brain uses multiple techniques to both formulate and cross-check results.>>

The current version of Wikipedia says outright: “In computer science AI research is defined as the study of “intelligent agents“: any device that perceives its environment and takes actions that maximize its chance of success at some goal.[1]“ It adds: “Colloquially, the term “artificial intelligence” is applied when a machine mimics “cognitive” functions that humans associate with other human minds, such as “learning” and “problem solving”.[2]“

In short, surprise — NOT, we are back at a triple-point fuzzy inter-disciplinary border between [a] sci-tech, [b] mathematics (including computing) and [c] philosophy.

That should be duly noted for when the usual objections come up that we must focus on “Science” or aren’t focussing enough on “Science.” Science isn’t just Science — and it never was. There was a reason why Newton’s key work was on The Mathematical Principles of Natural Philosophy, and why “Science” [meaning knowledge] originally meant more or less, the body of established results and findings of a methodical, objective field of study.

Backing up a tad, here is Merriam-Webster on AI:

Definition of artificial intelligence

1 : a branch of computer science dealing with the simulation of intelligent behavior in computers

2 : the capability of a machine to imitate intelligent human behavior

Of course one of the sharks lurking here is that in evolutionary materialistic scientism, it is assumed that our brains and central nervous systems evolved as natural computers that somehow threw up consciousness and rationality.Sir Francis Crick on The Astonishing Hypothesis (1994) is perhaps the most frank in saying this:

“You”, your joys and your sorrows, your memories and your ambitions, your sense of personal identity and free will, are in fact no more than the behaviour of a vast assembly of nerve cells and their associated molecules. As Lewis Carroll’s Alice might have phrased: “You’re nothing but a pack of neurons.” This hypothesis is so alien to the ideas of most people today that it can truly be called astonishing.

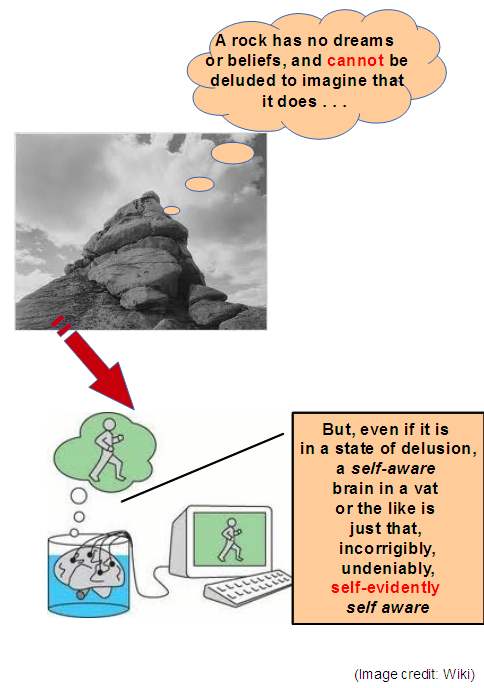

The scare-quoted “You,” the “in fact” assertion and the You’re “nothing but a pack of neurons” tell the story. Indeed, we can see the view as reducing mindedness to:

Engineer Derek Smith has given us a less philosophically loaded framework, and we can present it in simplified form:

Here, we can see ourselves as biological cybernetic systems that interact with the external world under the influence of a two-tier controller. The lower level in-the-loop input-output controller is itself further directed by a supervisory controller that allows room for intent, strategy, decision beyond ultimately deterministic and/or stochastic branch programming, and more. Is such “just” software? Is such an extra-dimensional entity that interfaces through say quantum influences? And more? (We can leave that open to further discussions.)

Oh, let me add this, as a tiny little first push-back on the gap between computational substrates and conscious, responsibly and rationally significantly free, morally governed agency:

The skinny is, what is an intelligence, what is agency, what is responsible, rational freedom and what can computational substrates do are all up for grabs and that this will get more and more involved as AI systems make it into the economy.

All of this leads to an interesting technology, memristors.

Memory + resistors.

Resistors with programmable memories that can be used in a more or less digital mode as storage units or as multiple level or continuous state elements amenable to neural network programming.

As storage units, for some years there has been talk of 100 Tera Byte storage units. Yes, 1 * 10^14 Bytes, or 8 * 10^14 bits. We’ll see on that soon enough. However, that would make low-cost high-density technology available for the much more interesting neural networks application.

Where, refresher, a neural network (in light of the way neurons interconnect):

On the interesting bit, first, Physics dot org:

>>Transistors based on silicon, which is the main component of computer chips, work using a flow of electrons. If the flow of electrons is interrupted in a transistor, all information is lost. However, memristors are electrical devices with memory; their resistance is dependent on the dynamic evolution of internal state variables. In other words, memristors can remember the amount of charge that was flowing through the material and retain the data even when the power is turned off.

“Memristors can be used to create super-fast memory chips with more data at less energy consumption” Hu says.

Additionally, a transistor is confined by binary codes—all the ones and zeros that run the internet, Candy Crush games, Fitbits and home computers. In contrast, memristors function in a similar way to a human brain using multiple levels, actually every number between zero and one. Memristors will lead to a revolution for computers and provide a chance to create human-like artificial intelligence.

“Different from an electrical resistor that has a fixed resistance, a memristor possesses a voltage-dependent resistance.” Hu explains, adding that a material’s electric properties are key. “A memristor material must have a resistance that can reversibly change with voltage.”>>

In short, rewritable essentially analogue storage units capable of use in neural networks, thus AI systems.

Nature amplifies, using the reservoir computing concept:

>>Reservoir computing (RC) is a neural network-based computing paradigm that allows effective processing of time varying inputs1,2,3. An RC system is conceptually illustrated in Fig. 1a, and can be divided into two parts: the first part, connected to the input, is called the ‘reservoir’. The connectivity structure of the reservoir will remain fixed at all times (thus requiring no training); however, the neurons (network nodes) in the reservoir will evolve dynamically with the temporal input signals. The collective states of all neurons in the reservoir at time t form the reservoir state x(t). Through the dynamic evolutions of the neurons, the reservoir essentially maps the input u(t) to a new space represented by x(t) and performs a nonlinear transformation of the input. The different reservoir states obtained are then analyzed by the second part of the system, termed the ‘readout function’, which can be trained and is used to generate the final desired output y(t). Since training an RC system only involves training the connection weights (red arrows in the figure) in the readout function between the reservoir and the output4, training cost can be significantly reduced compared with conventional recurrent neural network (RNN) approaches4.>>

Fig. 1:

According to University of Michigan’s The Engineer News Center:

According to University of Michigan’s The Engineer News Center:

>>To train a neural network for a task, a neural network takes in a large set of questions and the answers to those questions. In this process of what’s called supervised learning, the connections between nodes are weighted more heavily or lightly to minimize the amount of error in achieving the correct answer.

Once trained, a neural network can then be tested without knowing the answer . . . . [However,] “A lot of times, it takes days or months to train a network,” says [Wei Lu, U-M professor of electrical engineering and computer science who led the above research]. “It is very expensive.”

Image recognition is also a relatively simple problem, as it doesn’t require any information apart from a static image. More complex tasks, such as speech recognition, can depend highly on context and require neural networks to have knowledge of what has just occurred, or what has just been said.

“When transcribing speech to text or translating languages, a word’s meaning and even pronunciation will differ depending on the previous syllables,” says Lu.

This requires a recurrent neural network, which incorporates loops within the network that give the network a memory effect. However, training these recurrent neural networks is especially expensive, Lu says . . . .

Using only 88 memristors as nodes to identify handwritten versions of numerals, compared to a conventional network that would require thousands of nodes for the task, the reservoir achieved 91% accuracy.>>

So, we see a lot of promise and it is appropriate for us to have this background for onward discussion. END