In the Pulsars and Pauses thread, Petrushka raised a rather revealing assertion, to which MH, EA and I answered [U/d and GP just weighed in]:

P: >> I find it interesting that when it seems convenient to ID, the code is digital (and subject to being assembled by incremental accumulation). But at other times the analogy switches to objects like motors that are not digitally coded and do not reproduce with variation. >>

I have of course highlighted some key steps in the underlying pattern of thought:

(i) design thinkers think one way or another at convenience

[–> TRANS: we “cannot” happen to have either honestly arrived at views, or warrant for our views . . . ]

(ii) our arguments are based on — shudder — analogies

[–> TRANS: objectors to ID commonly fail to appreciate the difference between deductive reasoning and either classic logical induction or inference to best empirically anchored explanation, nor that inductive, empirically based reasoning is riddled with analogies so by blanket objecting to analogies, one is sawing off the branch on which s/he must sit.]

(iii) the ability to reproduce with incremental variation explains any and every thing

[ –> TRANS: the issues that complex, multipart functional integration and resulting irreducible complexity forms islands of function that cannot credibly be reached by cumulative incremental variations per a random walk in configuration space, and that a pattern of integrated metabolic capacity and ability to replicate same (even with minor variations) using digital, symbolic code is a case in point of said irreducible complexity, are dismissed without serious consideration.]

I am actually now at the stage where my conclusion (on years of observation) is, that — for many design objectors here at UD and elsewhere — unfortunately, we are dealing with the deeply ideologised, who — absent major attitudinal change — are unreachable by mere evidence and argument. The only mechanism I know that can trigger such a major shift in perception and attitude, is the sort of patent worldview collapse as happened at the turn of the 90’s with Marxism-Leninism.

So, let us note for record, and as a preventative for others so they will not fall into the same trap.

Now, why am I so stringent in my comments on what we are seeing?

I think a pretty good step is to clip the exchange from here on, that produced this gem and responded to it.

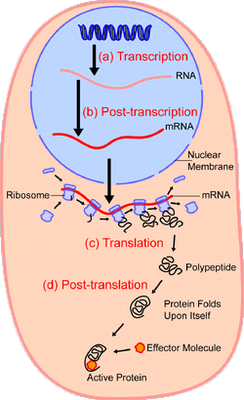

But first, a little context, the Ribosome in action:

(In reading the below, let us recall at all times that what is to be explained is, among other things, this digital, coded information driven algorithmic, step by step process using molecular machines in the living cell. Ask yourself, on your experience and observation, plus basic common sense and logic: what best explains algorithms, codes and related data structures and organised clusters of complex functional machines? Is there another genuinely credible explanation, and if so, why is it credible?)

Clipping from the exchanges deep in the thread:

____________

Dr Rec [DR]: >> Molecular ‘motors’ are an analogy drawn to human design.

Now you’ve taken the analogy too far.>>

JD: >> Oh? They look like motors, they function like motors, they have the same types of parts that motors have, BUT…. they aren’t motors because we know motors are designed. GOT IT!

I see 4 definitions at dictionary.com that molecular motors fit.>>

{Citing: mo·tor noun 1.a comparatively small and powerful engine, especially an internal-combustion engine in an automobile, motorboat, or the like.2.any self-powered vehicle.3.a person or thing that imparts motion, especially a contrivance, as a steam engine, that receives and modifies energy from some natural source in order to utilize it in driving machinery.4.Also called electric motor. Electricity . a machine that converts electrical energy into mechanical energy, as an induction motor.}

MH: >> Take ATP synthase or the flagellum: these molecular motors are composed of simple machines, e.g. wheels & axles (free turning rotor which is constrained in 5 degrees of freedom by a stator imbedded in the membrane), ramps (which transform linear momentum to rotational momentum due to a flow of ions), levers (clutch mechanism to reverse direction of rotation), and screws (as the filament turns it acts as a propeller). Any machine designed and built in the macro world contains some or all of these simple machines. And please note the purpose of such is to transform one form of energy into another. In the case of ATP synthase and the flagellum, the energy of a proton gradient is converted into torque, which is used to generate chemical energy (ATP) and linear motion, respectively. Motors are a physical mechanism by which a form of potential energy is channeled and converted into a form of *useful* energy. This is exactly what we see in the cell, which means we are not speaking in analogies here. These are actual motors, in every sense of the term.

Now I would ask you: do you avoid calling these things “motors” in an effort to avoid the clear, purposeful design implications, or because you are fundamentally ignorant of what motors actually are?>>

P: >> The interesting thing is there are so many different versions of flagella, and so many genomes containing bits and pieces of the code, used for so many different purposes.

There are at least 20 different species of microbes having subunits of the flagellum code.

I find it interesting that when it seems convenient to ID, the code is digital (and subject to being assembled by incremental accumulation). But at other times the analogy switches to objects like motors that are not digitally coded and do not reproduce with variation.>>

MH: >> So what? Do you not realize that in the macro world there are single component parts that are used in a multitude of disparate systems, nuts & bolts being the most obvious example. In fact, a good engineer strives to make the hardware from system to system as standard as possible. The more variation there is in the hardware, the more headaches it causes. Copper wiring is another example, with wiring of the same gage and shielding used all over the place. Standard circuit cards, standard housings for gear boxes, standard junctions, standard belts, and the list goes on forever. Standard components is just as much a sign of design as anything, friend . . . .

Are you suggesting that the flagellum is not a motor because its components are constructed of discrete modular building blocks? If so, that’s asinine. And here’s some news for you: motors designed by humans in the macro world are reproduced also. Weird, huh? And what if the flagellum varies over time; does that plasticity make it not a motor? Nope. Still a motor. >>

KF: >> 1 –> Codes, generally speaking, use symbolic representations [whereby one thing maps to another and per a convention MEANS that], and are inherently discrete state, i.e. digital.

2 –> The DNA-> RNA –> Ribosome –> AA chain for protein system uses just such symbols, and goes through transcription, processing that allows reordering, and translation in a translation device that is also a manufacturing unit for proteins.

3 –> the fact that you find yourself resisting such patent and basic facts is revealing.

4 –> A motor is a functional, composite entity. It is made up from parts, that fit together in a certain specific way, per an exploded view wiring diagram, and when they fit together they do a job.

5 –> As has been pointed out for a long time now, that sort of 3-D exploded view can be converted into a cluster of linked strings that express the implied information, as in Autocad etc.

6 –> However, the point of a motor, is that it does a certain job, converting energy into shaft work, often but not always in rotary form. (Linear motors exist and are important.)

7 –> A lot of was and means can be used to generate the torque [power = torque * speed], but rotary motors generally have a shaft that carries the load, on the output port, and an energy converter on the input port. (Motors are classic two-port devices.)

8 –> Electrical motors work off the Lorentz force [which in turn is in large part a reflection of relativistic effects of the Coulomb force], hydraulic and pneumatic ones, off fluid flows, some motors work off expanding combustion products, etc etc.

9 –> Two of the motors in living forms seem to work off ion flows and associated barrier potentials. Quite efficiently and effectively too.

10 –> Wiki, testifying against known ideological interest:

An engine or motor is a machine designed to convert energy into useful mechanical motion.[1][2] Heat engines, including internal combustion engines and external combustion engines (such as steam engines) burn a fuel to create heat which is then used to create motion. Electric motors convert electrical energy in mechanical motion, pneumatic motors use compressed air and others, such as wind-up toys use elastic energy. In biological systems, molecular motors like myosins in muscles use chemical energy to create motion.

11 –> In short, we see here a recognition of what you are so desperate to resist: there are chemically powered, molecular scale motors in the body, here citing a linear motor, that makes muscles work.

12 –> So, there is no reason why we should suddenly cry “analogy” — shudder — when we see similar, ion powered rotary motors in the living cell.>>

EA:>> No-one is switching analogies because it is convenient. A digital code is an example of complex specified information. An integrated functional system is an example of complex specified information. There are lots of examples. No-one is switching anything.

BTW, analogies are useful and there is nothing wrong with them as far as they go in helping us think through things.

But in this case we don’t even have to analogize. The code in DNA is a digital code, it isn’t just like a digital code. Molecular motors in living cells aren’t just like motors, they are motors.>>

J: >> [To DR] If you don’t like analogies nor the design inference all YOU have to do is actually step-up and demonstrate that stochastic processes can account for what we say is designed.

OR you can continue whining.

Your choice…>>

EA: >> DrREC:

Seriously, do you think some explorer could wander up on Easter Island, and say those look natural? Or would the knowledge of statutes in human design be sufficient?

Excellent. So finally we get nearer to the heart of the matter. DrREC acknowledges that we don’t have to know the exact specification we are looking for. It is enough to have seen some similar systems. In other words, we look at a system of unknown origin and analogize to systems that we do know. This is one important aspect (though not complete) of the way we draw design inferences. We work from what we know, not from what we don’t know. We work from our understanding of cause and effect in the world, not from what we don’t know. And with those tools under our belt, we consistently and regularly infer design as the most appropriate explanation, even when we don’t know the exact specification we will find.

DrREC has no issue with this approach. He thinks it is perfectly reasonable and appropriate. He even suggests above that it is absurd to think otherwise. All correct.>>

DR: >> Funny everyone keeps coming with analogies where the design is not in question! It is almost as if they assume design, and proceed from there.

Oh, right.

The funny thing Eric can’t do it tell us what the independent specifications for protein design are.

But at any rate, there are a couple of things that went unanswered.

Despite the attempts to distract with other analogies, my post at 9.3 [ –> Cf KF at 9.3.1 . . . ]clearly demonstrates IN PRACTICE, that fsci calculations narrowly and subjectively define a design as part of estimating functional space.>>

SA2:>> As opposed to what, using examples in which design is in question? Or examples of things that don’t appear designed?

Most designed things don’t have independent specifications that one can produce or refer to. From where have you invented this requirement?

Your position seems to be that if you have this thing and you don’t know whether it was designed, comparing it to outputs of known design is an inherently invalid approach.

You also suggest that design is such a shockingly unimaginable phenomenon that it must be seen to be believed. Astoundingly, you do not apply this same skepticism to vague, unformed hypotheses of self-organization.

Living things, from the molecular level up, follow the very same patterns as any number of design technologies, except that they appear far more advanced. They do not follow any known patterns of self-organization.

You can lead a horse to water, but you can’t stop it from drinking sand. And you can’t use logic to convince someone to respect logic.>>

DR: >> Yeah. Seriously, the constant insult laced posts that go “Hey look at this human-designed object. Only a moron couldn’t tell it was deigned.” get old fast.

It also isn’t really what you guys are trying to do. You’re trying to make a design inference, based on ruling out natural possibilities through the use of improbability+specification . . . >>

{–> I flag this, as it is an unsubstantiated dismissal by definition, slipped in}

SA2: >> Was your post pre-specified?

Do you have the schematics for your computer? Can you tell me right now how every circuit was specified?

Now you’re saying that you’ll believe it if you see the specification in advance of the implementation. With a wave of the hand you have dismissed the possibility that anything of unobserved origin was designed.

Your whole pulsar tangent [which I omitted . . . cf the thread] is one giant strawman. No one is going about randomly trying to infer design to anything and everything for no reason. You’re arguing against the design inference by applying where it obviously doesn’t fit. That’s because you have no valid objection or alternative where it does fit.

Don’t count out, “Just look at it, it must have been designed.” No insult intended, but that’s the voice of common sense. It’s not always right, but common sense plus abundant evidence always beats a hand-waving explanation of ‘something happened, we don’t know what except that we have ruled out intelligence.’>>

DR: >> “Was your post pre-specified?”

Yes, or at least independently. By the rules english grammar and syntax.

“Do you have the schematics for your computer? Can you tell me right now how every circuit was specified?”

No, but I’m sure someone at apple does. And again, with the endless human designs. Bored now.

“Now you’re saying that you’ll believe it if you see the specification in advance of the implementation.”

Or independently. Or in any way that doesn’t draw a target around something in nature, infer that to be the specification, declare it is specified, and deduce design.

“Your whole pulsar tangent is one giant strawman. No one is going about randomly trying to infer design to anything and everything for no reason.”

SETI or NASA might be interested. I think it is right up your alley. Aren’t you design detectors?

“Don’t count out, “Just look at it, it must have been designed.”

That’s really sad for ID.>>

MH: >> Lets go back to the Voynich Manuscript. How is it that *you* don’t know what it says, or even if it says anything at all, yet *you* know for a fact that someone did it? You can just look at it and know. >>

DR: >> The Voynich Manuscript?

Where is the design detection? Are you saying it is natural, or needs to be distinguished from nature.

Some independent specifications:

1) On vellum (human product)

2) Iron ink with quill and pen (human product)

3) Conforms to manuscript and illustrations of the period

Should we continue with this absurdity?>>

SA2: >> It’s not ID at all. It’s common sense backed up by unmistakable evidence.

ID is science, not common sense.

You’re bored? How many times have I pointed out that the very nature of extrapolation and inference requires us to reason beyond what we observe? If inference is invalid unless the subject is identical to that with which it is compared, then it is invalid in every case except when we do not need it. You’ve just invalidated the concept of inference.

Next you claim that we draw a target around everything in nature. No, we draw a target around around anything that appears to have come about by a process of arranging symbolic information that exhibits planning and foresight to arrive at a functional result. I don’t need to say more than that, such as the amazing attributes or behaviors of any living things. That a thing which reproduces and processes energy is generated from symbolic information is enough. The rest is icing on the cake, lots of it. Reducing function to abstract instructions is intelligent behavior. Yeah, humans do it, and humans are the only one’s we’ve seen do it. But it doesn’t look like humans were in on this one. If you think that somehow nullifies the obvious expression of a similar pattern, you’re free to make whatever excuses you can to deny whatever evidence you wish. But it’s still there.

To say that we draw targets around living things is to suggest that the targets weren’t already drawn. A crab is no different from the rock it sits on.

You’re wrong. Every living thing shares a profound, fundamental difference from every non-living thing. Crabs aren’t funny-shaped rocks that eat and reproduce and run away from bigger things. Every child knows that. That’s the incomprehensible, twisted aberration of reason you are forced to accept when you commit to a conclusion that is diagonally opposed to the evidence.

UB has it right. Instantiation of semiotic information transfer = intelligence. There is no alternative explanation, real, hypothetical, or imaginary. That’s a lifeline from reality. Grab it or don’t.>>

EA: >> We are showing you examples of how design is inferred. These aren’t just unrelated “analogies.” These are live examples of design inference. Set aside for a moment your philosophical bias against examples, analogies, whatever and think through this for a moment.

Under your logic, the only way we can ever know if something was designed is if we already know that what we are looking for is designed. That is entirely circular and, pardon, but frankly absurd. That would mean that it is impossible to ever discover if something is designed. Because in order to discover that, we must have already known the design we were looking for.

The fact of the matter is design is inferred all the time. The only reason you are hung up is that it happens to be in life this time, which, apparently, is philosophically unpalatable.>>

DR:>> for the umpteenth time, if it is INDEPENDENTLY specified (pi, prime numbers) in my pulsat example, that is fine.

I’ve walked through how fsci calculations specify a design post-hoc in the detection of design in explicit detail above. No one seems to want to deal with that, and has resorted to broad chest thumping rhetoric.

Don’t you just SEE the design? Lol.>>

FALSE, let us clip:

KF, 9.3.1: >> By now, it should be clear that you are imposing the a prioris, not me.

Let’s look at your:

I) Use of Durston’s, or any related metric imposes a post-hoc specification-a design in the search for design.

Look at the tables of Fits. There is an estimate based of the length, and number of sequences. But sequences of what? A post-hoc specified design

Really, now. A protein family is observed, and its variability while retaining function is used to quantify the info in the AA sequence. We have a macro-observable state that asks only: does it do job X in living systems. That is more than sufficiently independent.

The redundancy in the strings reduces the bit value from 4.32 per AA residue.

After the reduction, the number of functional bits is totted up. A comparison to threshold then tells us what you obviously do not wish to hear: a functional family that isolated in AA string config space that does a job that specific to the string sequence, is not likely to have been come upon by blind processes.

So we see a selectively hyperskeptical objectopn.

Only problem, this is the same problem as explaining functional text on blind forces.

Cicero could spot the problem c 50 BC, and so can we today, providing we are not blinkered by materialist a prioris.>>

KF, 11.1.1.4 f: >> . . . do you understand the difference between an observation and an assumption?

Let’s take a microcontroller object program for an example.

Can you see whether the controlled device with the embedded system works? Whether it works reliably, or whether it works partially? Whether it has bugs — i.e. we can find circumstances under which it behaves unexpectedly in non-functional ways, or fails?

Can you see that we can here recognise that something is functional, and may even be able to construct some sort of metric of the degree of functionality?

Now, we observe that the microcontroller depends on certain stored strings of binary digits, and that when some are disturbed by injecting random changes it keeps on working, but beyond a certain threshold, key functions or even overall function break down.

This identifies empirically that we are in an island of function.

[As a live case in point, here at UD, last week I had the experience of discovering a “feature” of WP, i.e. if you happen to try square brackets — like I am using here — in a caption for a photo the post display process will fail to complete and posting of the original post, but not comments, will abort. I suspect that’s because square brackets are used for certain functional tasks and I happened to half-trigger some such task, leading to an abort.]

Do you now appreciate that we can empirically detect FSCI, and in particular, digitally coded FSCI?

Do you in particular see that the concept of islands of function shaped by the constraints on — in this case — strings of algorithmically functional data elements, naturally leads to the islands of function effect?

That, where we see functional constraints in a context of complex function, this is exactly what we should EXPECT?

For, parts have to fit into a context of a Wicken-type “wiring diagram” for the whole to work, and absent the complex, mutually adapted set of elements wired on that diagram for that case, the system will wholly or partly degrade. That is, we see here the significance of functionally specific, integrated complex organisation. It is a commonplace of the technology of complex, multi-part, functionally integrated, organised systems, that function depends on fairly specific organisation, with a bit of room for tolerance, but not very much relative to the space of configurational possibilities of a set of components.

And, we may extend this fairly simply to the case where there are no explicit strings, by taking the functional diagram apart on an exploded view, and reducing the information of that 3-D representation and putting it in a data structure based on ordered, linked strings. That is what Autocad etc do. And of course the assembly process is generally based on such an exploded view model.

(Assembly of a complex functional system based on a great many parts with inevitable tolerances is in itself a complex issue, riddled with the implications of tolerances of the many components. Don’t forget the cases in the 1950′s where it was discovered that just putting a bolt in the wrong way on I think it was the F 86, could cause fatal crashes. Design for one-off success is much less complex than design for mass production. And, when we add in the issue in biology of SELF-assembly, that problem goes through the roof!)

In short, we can see how FSCO, FSCI, and irreducible complexity emerge naturally as concepts summarising a world of knowledge about complex multi-part systems.

These things are not made-up, they are instantly recognisable and understandable to anyone who has had to struggle with designing and building or simply troubleshooting and fixing complex multi-part functional systems.

BTW, this is why I can only shake my head when I hear talking points over Hoyle’s fallacy, when he posed the challenge of assembling a jumbo jet by passing a tornado through a junkyard.

Actually — and as I discussed recently here in the ID foundations series (notice the diagram of the instrument), we may take out the rhetorical flourish and focus on the challenge of assembling a D’Arsonval galvanometer movement based instrument in its cockpit. Or even the challenge of screwing together the right nut and bolt in a bowl of mixed parts, by random agitation.

And, BTW, the just linked shows how Paley long since highlighted the problem with the dismissive “analogy” argument, when in Ch 2 of his work, he pointed out the challenge of building a self-replicating watch:

Suppose, in the next place, that the person who found the watch should after some time discover that, in addition to all the properties which he had hitherto observed in it, it possessed the unexpected property of producing in the course of its movement another watch like itself – the thing is conceivable; that it contained within it a mechanism, a system of parts — a mold, for instance, or a complex adjustment of lathes, baffles, and other tools — evidently and separately calculated for this purpose . . . .

The first effect would be to increase his admiration of the contrivance, and his conviction of the consummate skill of the contriver. Whether he regarded the object of the contrivance, the distinct apparatus, the intricate, yet in many parts intelligible mechanism by which it was carried on, he would perceive in this new observation nothing but an additional reason for doing what he had already done — for referring the construction of the watch to design and to supreme art . . . . He would reflect, that though the watch before him were, in some sense, the maker of the watch, which, was fabricated in the course of its movements, yet it was in a very different sense from that in which a carpenter, for instance, is the maker of a chair — the author of its contrivance, the cause of the relation of its parts to their use.

[Emphases added. (Note: It is easy to rhetorically dismiss this argument because of the context: a work of natural theology. But, since (i) valid science can be — and has been — done by theologians; since (ii) the greatest of all modern scientific books (Newton’s Principia) contains the General Scholium which is an essay in just such natural theology; and since (iii) an argument’s weight depends on its merits, we should not yield to such “label and dismiss” tactics. It is also worth noting Newton’s remarks that “thus much concerning God; to discourse of whom from the appearances of things, does certainly belong to Natural Philosophy [i.e. what we now call “science”].” )]

In short, the additionality of self replication of a functioning system is already a challenge. And Paley was of course too early by over a century to know what von Neumann worked out on his kinematic self-replicator that uses digitally stored information in a string structure to control self assembly and self replication. (Also discussed in the just linked onlookers.)

On the strength of these and related considerations, I then look at say Denton’s description (please watch the vid tour then read) of the automated multi-part functionality of the living cell:

To grasp the reality of life as it has been revealed by molecular biology, we must magnify a cell a thousand million times until it is twenty kilometers in diameter [[so each atom in it would be “the size of a tennis ball”] and resembles a giant airship large enough to cover a great city like London or New York. What we would then see would be an object of unparalleled complexity and adaptive design. On the surface of the cell we would see millions of openings, like the port holes of a vast space ship, opening and closing to allow a continual stream of materials to flow in and out. If we were to enter one of these openings we would find ourselves in a world of supreme technology and bewildering complexity. We would see endless highly organized corridors and conduits branching in every direction away from the perimeter of the cell, some leading to the central memory bank in the nucleus and others to assembly plants and processing units. The nucleus itself would be a vast spherical chamber more than a kilometer in diameter, resembling a geodesic dome inside of which we would see, all neatly stacked together in ordered arrays, the miles of coiled chains of the DNA molecules. A huge range of products and raw materials would shuttle along all the manifold conduits in a highly ordered fashion to and from all the various assembly plants in the outer regions of the cell.

We would wonder at the level of control implicit in the movement of so many objects down so many seemingly endless conduits, all in perfect unison. We would see all around us, in every direction we looked, all sorts of robot-like machines . . . . We would see that nearly every feature of our own advanced machines had its analogue in the cell: artificial languages and their decoding systems, memory banks for information storage and retrieval, elegant control systems regulating the automated assembly of components, error fail-safe and proof-reading devices used for quality control, assembly processes involving the principle of prefabrication and modular construction . . . . However, it would be a factory which would have one capacity not equaled in any of our own most advanced machines, for it would be capable of replicating its entire structure within a matter of a few hours . . . .

Unlike our own pseudo-automated assembly plants, where external controls are being continually applied, the cell’s manufacturing capability is entirely self-regulated . . . .

[[Denton, Michael, Evolution: A Theory in Crisis, Adler, 1986, pp. 327 – 331. This work is a classic that is still well worth reading. Emphases added. (NB: The 2009 work by Stephen Meyer of Discovery Institute, Signature in the Cell, brings this classic argument up to date. The main thesis of the book is that: “The universe is comprised of matter, energy, and the information that gives order [[better: functional organisation] to matter and energy, thereby bringing life into being. In the cell, information is carried by DNA, which functions like a software program. The signature in the cell is that of the master programmer of life.” Given the sharp response that has provoked, the onward e-book responses to attempted rebuttals, Signature of Controversy, would also be excellent, but sobering and sometimes saddening, reading.) ]

We could go on and on, but by now the point should be quite clear to all but the deeply indoctrinated.

Namely, we have every reason to see why complex, integrated functionality on many interacting parts naturally leads to islands of functional configurations in much wider spaces of possible but overwhelmingly non-functional configurations. (And, this thought exercise will rivet the point home, in a context that is closely tied to the statistical underpinnings of the second law of thermodynamics.)

Clearly, it is those who imply or assume that we have instead a vast continent of function that can be traversed incrementally step by step starting form simple beginnings that credibly get us to a metabolising, self-replicating organism, who have to empirically show their claims.

It will come as no surprise to the reasonably informed that the original of cell based life bit is neatly snipped out of the root of the tree of life, precisely because after 150 years or so of speculations on Darwin’s warm little pond full of chemicals and struck by lightning, etc, the field of study is in crisis.

Similarly, the astute onlooker will know that he general pattern of the fossil record and of today’s life forms, is that of sudden appearance, stasis, sudden disappearances and gaps, not at all the smoothly graded overall tree of life as imagined. Evidence of small scale adaptations within existing body plans has been grossly extrapolated and improperly headlined as proof of what is in fact the product of an imposed philosophical a priori, evolutionary materialism. That is why Philip Johnson’s retort to Lewontin et al was so cuttingly, stingingly apt:

For scientific materialists the materialism comes first; the science comes thereafter. [[Emphasis original] We might more accurately term them “materialists employing science.” And if materialism is true, then some materialistic theory of evolution has to be true simply as a matter of logical deduction, regardless of the evidence. That theory will necessarily be at least roughly like neo-Darwinism, in that it will have to involve some combination of random changes and law-like processes capable of producing complicated organisms that (in Dawkins’ words) “give the appearance of having been designed for a purpose.”

. . . . The debate about creation and evolution is not deadlocked . . . Biblical literalism is not the issue. [–> those who are currently spinning toxic, atmposphere poisoning, ad homiem laced talking points about “sermons” and “preaching” and “preachers” need to pay particular heed to this . . . ] The issue is whether materialism and rationality are the same thing. Darwinism is based on an a priori commitment to materialism, not on a philosophically neutral assessment of the evidence. Separate the philosophy from the science, and the proud tower collapses. [[Emphasis added.] [[The Unraveling of Scientific Materialism, First Things, 77 (Nov. 1997), pp. 22 – 25.]

So, where does this leave the little equation accused of being question-begging:

Chi_500 = I*S – 500, bits beyond the solar system threshold

1 –> The Hartley-Shannon information metric is a standard measure of info carrying capacity, here being extended to cover a case were we must meet some specificaitons, and pass a threshold of complexity.

2 –> the 500 bit threshold is sufficient to isolate the full Planck Time Quantum State [PTQS] search capacity of our solar system’s 10^57 atoms, 10^102 states in 10^17 or so seconds, to ~ 1 in 10^48 of the set of possibilities for 500 bits: 3 * 10^150.

3 –> So, before we get any further, we know that we are looking at so tiny a fractional sample that (on well-established sampling theory) ANYTHING that is not typical of the vast bulk of the distribution is utterly unlikely to be detected by a blind process.

4 –> The comparison to make this familiar is, to draw at chance or at chance plus mechanical necessity, a blind sample of size of one straw from a cubical hay-bale 3 1/2 light days across, which could have our solar system out to Pluto in it [about 1/10 the way across]. With maximal probability — all but certainty, such a sample will pick up straw.

5 –> The threshold of complexity, in short is reasonable, and if you want to challenge the solar system (our practical universe which is 98% dominated by our Sun, in which no OOL is even possible . . . ) then scale up to the observed cosmos as a whole, 1,000 bits. (The calculation for THAT hay bale would have millions of cosmi comparable to our own lurking within and we would have the same result.)

6 –> So, the only term really up for challenge is S, the dummy variable that is set to 0 as default, and if we have positive, objective reason to infer functional specificity or more broadly ability to assign observed cases E to a narrow zone T that can be INDEPENDENTLY described (i.e. the selection of T is non-arbitrary, we have a definable collection in the set theory sense and a set builder rule — or at least, a separate objective criterion for inclusion/exclusion) then it can be set to 1.

7 –> The S = 0 case, the default, is of course the blind chance plus necessity case. The assumption is that phenomena are normally accessible by chance plus necessity acting on matter and energy in space and time.

8 –> But, in light of the sort of issues discussed above (and over and over again elsewhere over the course of years . . . ), it is recognised that certain phenomena, especially FSCI and in particular dFSCI — like the posts in our thread — are in fact only reasonably accessible by intelligent direction on the gamut of our solar system or observed cosmos.

9 –> Without loss of general force, we may focus on functional specificity. We can objectively, observationally identify this, and routinely do so.

10 –> So, what the equation ends up doing is to give us an empirically testable threshold for when something is functionally specific, information-bearing and sufficiently complex that it may be inferred that it is best explained on design, not chance plus necessity.

11 –> Since this is specific and empirically testable, it cannot be a mere begging of the question, it is inviting refutation by the simple expedient of showing how chance and necessity without intelligent guidance or starting within an island of function already — that is what Genetic Algorithms do, as the infamous well-behaved fitness function so plainly shows — can give rise to FSCI.

12 –> The truth is that the talking point storm and assertions about not sufficiently rigorous definitions, etc etc etc, are all because the expression handily passes empirical tests. the entire Internet is a case in point, if you want empirical tests.

13 –> So, if this were a world in which science were done by machines programmed to be objective, the debate would long since have been over as soon as this expression and the underlying analysis were put on the table.

14 –> But, humans are not machines, and so recently the debate talking point storm has been on how this eqn is begging questions or is not sufficiently defined to suit the tastes of those committed to a priori evolutionary materialism, or how GA’s — which start inside islands of function! — show how FSCI can be had without paying for it with the hard cash of intelligence. (I won’t bother with more than mentioning the sort of hostile, hateful attack that was so plainly triggered by our blowing the MG sock-puppet campaign out of the water. Cf link here for the blow by blow on how that campaign failed.)

15 –> To all this, I simply say, the expression invites empirical test and has billions of confirmatory instances. Kindly show us a clear case that — without starting on an existing island of function — shows how FSCI, especially dFSCI (at least 500 – 1,000 bits), emerges credibly by chance and necessity, within the scope of available empirical resources.

16 –> For those not familiar with the underlying principle, I am saying that the expression is analytically warranted per a reasonable model and is directly subject to empirical test with a remarkable known degree of success, and so far no good counter-examples. So, we are inductively warranted to trust it absent convincing counter-example.

17 –> Not as question begging a prioris, but as per the standard practice of science where laws of science and scientific models are provisionally warranted and empirically reliable, not necessarily true beyond all possibility of dispute.

18 –> Indeed, that is why the laws of thermodynamics can be formulated in terms that perpetual motion machines of the first, second and third kind will not work. So far quite empirically reliable, and on reasonable models, we can see why. But, provide such a perpetual motion machine and thermodynamics would collapse.

____________

So, Dr Rec, a fill in the blanks exercise:

your empirical counter-example per actual observation is CCCCCCC, and your analytical explanation for it is WWWWWWW

If you cannot directly fill in the blanks, we have every reason to accept the Chi_500 expression on the normal terms for accepting a scientific result, no matter how uncomfortable this is for the a priori materialists.>>

EA:>>. . . so you agree with Dembski that there is an independence requirement. We all agree.

But why on earth do you think the specification has to be known and set out beforehand?

I’ve asked this several times. Is it possible to crack a code that was previously unknown and therefore realize it was designed? All I can see from your various responses is one of two possibilities: either you are saying, yes it is possible because (i) we know it was designed in the first place (that is what it sounded like you were saying, thus my comment in 22), or (ii) we’ve seen similar systems (in other words, we analogize to our prior experience).

So which is it? Do we have to know beforehand the specification, or can we analogize to our prior experience and thus recognize the specification when it is discovered?

Let me know which of these two options you support, and then we can continue the discussion. If you support (ii) and not (i), then I apologize for having misunderstood you and withdraw my comment 22.>>

EA: >>I should add that it is funny that so much energy is being spent denying that there is specification in living cells. Specification, in terms of functional complex specified information, is really a no brainer as it relates to many cellular systems. Indeed, most Darwinists and other materialists admit the specification because it is so obviously there. What they then spend their energies on is attempting to show that the specification isn’t really that improbable (inevitability theorists), or that it comes about through some emergent process (emergent theorists), or alternatively, that while wildly improbably “evolution” can overcome it through the magic of lots of time and errors in replication (Dawkins et al.).

Don’t get me wrong, I love a good discussion about specification. Just seems funny that it is being so strenuously denied when so many evolutionists admit it as a given.>>

STRAWMAN ALERT:

DR: >> Could you provide a reference where a “Darwinist” acknowledges a specification by a designer in life? I’m really puzzled at the who the hell these “so many evolutionists admit it as a given” are.

This is almost a fourth grade playground lie you tell the kid you want to do something idiotic-everyone’s doing it. Why won’t you? All the other Darwinists are admitting design specifications…come on, just admit it….please….

“Specification, in terms of functional complex specified information, is really a no brainer”

So, in a few days, fsci has gone from being a calculation to a “no brainer” Next I’ll here the “only an idiot would deny it.” Is this science to you? Next paper, I’ll write “this is a no brainer, proof not required.”>>

EA:>> Do I have a quote from a Darwinist who says that they have analyzed cellular systems from a standpoint of design and believe the cellular systems meet the specification criteria outlined by intelligent design theorists Dembski, Meyer, and others? Of course not. They don’t use that terminology. But they do acknowledge the same point, in different words.

Starting with Darwin, who marveled at the wonderous “contrivance” of the eye, the primary goal has not been to deny that life contains complex, functionaly-integrated systems, but to argue that they can come about through natural processes.

Dawkins went so far as to define biology as the “study of complicated things that give the appearance of having been designed for a purpose.” What did he mean by that? Precisely that when we look at living systems, the appearance of design jumps out at us. Why is that; what is this appearance of design? It is because of the integrated functional complexity — precisely one of the examples of complex specified information. It is the fact that in our universal and repeated experience when we see systems like these they turn out to be designed. Dawkins isn’t arguing against the appearance of design or that such information doesn’t exist in biology (the specification); rather he argues that the appearance need not point exclusively to design because evolution can produce it through long periods of time and chance changes.

If I recall correctly, Michael Shermer is the one who has even taken to arguing in debates that, yes, life is designed, but, he adds, the design comes about without a designer, through natural processes.

Can complex specified information be calculated? Sure it can (particularly in cases where we are dealing with digital code) and there are interesting cases and good work to be done in identifying and calculating what is contained in life. But that there is a large amount of complex specified information in cells, absolutely; most everyone realizes it is there. The entire OOL enterprise is built upon trying to figure out how the complex specified information — which everyone recognizes is there — could have arisen.

The recognition that life contains digital code, symbolic representations, complex integrated functional systems is pretty universal. That is complex specified information. So, yes, most Darwinists don’t spend their energy arguing against complex specified information in life. Rather they spend their energies trying to explain how it could have arisen through purely natural causes.>>

GP:>>

I am not sure what the point of the debate is now. So, just to start, I would restate a couple of importa points here, and kindly ask you to update me about the main problems you see in the discussion here:

a) In functionally specified information, and especially dFSCI, the specification can (and indeed is) explicited “post-hoc”, from the observed information in the object. The function is defined objectively, and obviously defines a functional subset of the search space (for that specific function). However, the functional target must be computed in some way, because it includes all the sequences that confer the function, as defined.

b) In the example of a signal “writing” the digits of pi in binary code, the design inference IMO is completely justified (if the number of digits in the signal is great enough). That would be an example of dFSCI. the digits of p in binary form are a good example of dFSCI, and of “post hoc” specification.

c) Still, those who infer design in that case have the duty to seriously consider if the pattern could be generated by some law, that is by some known necessity driven system, even with possible chance contributions. In this case, as far as I know, there is no known physic system that could generate the binary code for the digits of such a fundamental mathemathical constant. So I refute a necessity explanation, at the present state of knowledge.

d) Obviously, it remains possible that some physical system, by necessity or necessity + chance, could generate that output, but, as far as I know, there is no logical reason to believe that this is true, and no empirical evidence in favor of that statement. That’s why that possibility is not at present a valid scientific explanation of the origin of our signal.>>

______________

Sometimes, it is in dialogue that we see what is really going on.

What is astonishing above, is that across the course of the discussion, DR ended up acknowledging that the issue was that we have a question of independent specification of a sufficiently complex [so, not likely on a chance based random walk] outcome. He has never acknowledged that this is precisely the set of criteria used to routinely infer to an alternative hypothesis in many cases of statistical inference testing.

And, when he has been presented with a quantification of the inference [ADDED: and an explanation of why S would be 0/1], he consistently has accused such of being mere analogies, or subjective, or painting the target after the fact.

Sorry, it is patent that what we see in the living cell is digital code fed into effecting machinery, and in a context where derangement of the code leads to loss of function in sufficiently many cases that it is quite patent that we are dealing with deeply isolated islands of function. This is instantiation, not dubious analogy.

Similarly, when I see where those arrows just happened to hit — codes, complex algorithms, sophisticated nanomachines, motors, etc, I think that a common sense, unbiased view would agree that such is suggestive of deliberate aiming, not of painting a target after the fact.

Thirdly, is should be quite clear that — despite all the dismissive rhetorical declarations to the contrary, in the expression for Chi_500, the default, 0, is precisely what the objectors wish [that chance and necessity explain a given phenomenon — and pulsars FYI, DR, were so explained, so S = 0], and there is always a specific, good reason for moving it to 1 in cases that are relevant. For instance, as was given already, that square brackets block posts problem for WP, shows an island of function. So did the rocket that had to be destroyed on launch because a comma was wrong, and we definitely do see that in many living systems, variations in the code pushed through the ribosome will lead to breakdown of function. In short, the objections are specious and amount to little more than we do not like where this is pointing.

Let’s ask: your observationally anchored explanation for the origin of the codes [thus, machine language], algorithms, and organised molecular machines and systems for protein synthesis and ATP synthesis, just for starters, is XXXXXX.

(Onward, try explaining the origin of say whales, bats, echolocation etc, or human language capacity by step by step observationally anchored, FUNCTIONAL incremental changes to a relevant initial animal. And, show us some relevant concrete evidence — preferably published — that documents the observed origin of such FSCI by chance plus necessity without intelligent intervention. Hurling elephants by pointing to piles of papers behind paywalls will not do, nor will literature bluffs based on lists of papers or allusions to authors or vague summaries of claims. Give us the OBSERVATION-anchored evidence. We have a whole Internet full, as a first example, on how FSCI is routinely the product of intelligence, how we can observe that we are dealing with functionally specific information that conforms to independent things like language and context, or algorithms that have to work, etc etc. , and how we see that as a rule such functions come in islands, i.e we cannot reasonably argue that we can get to first function or leap from one island to a sufficiently distant one, by a chance based random walk to give us high contingency, on the gamut of the solar system or the observed cosmos, once we are past 500 – 1,000 bits.)

Can you fill in the blanks on repeatable observed spontaneous chance plus necessity emergence?

If you can, S will revert to 0. But if not, we have very good reason to hold that S = 1. END