Over the past few months, I noticed objectors to design theory dismissing or studiously ignoring a simple — much simpler than a clock — macroscopic example of Functionally Specific, Complex Organisation and/or associated Information (FSCO/I) and its empirically observed source, the ABU-Garcia Ambassadeur 6500 C3 fishing reel:

Yes, FSCO/I is real, and has a known cause.

{Added, Feb 6} It seems a few other clearly paradigmatic cases will help rivet the point, such as the organisation of a petroleum refinery:

. . . or the wireframe view of a rifle ‘scope (which itself has many carefully arranged components):

. . . or a calculator circuit:

. . . or the wireframe for a gear tooth (showing how complex and exactingly precise a gear is):

And if you doubt its relevance to the world of cell based life, I draw your attention to the code-based, ribosome using protein synthesis process that is a commonplace of life forms:

[vimeo 31830891]

U/D Mar 11, let’s add as a parallel to the oil refinery an outline of the cellular metabolism network as a case of integrated complex chemical systems instantiated using molecular nanotech that leaves the refinery in the dust for elegance and sophistication . . . noting how protein synthesis as outlined above is just the tiny corner at top left below, showing DNA, mRNA and protein assembly using tRNA in the little ribosome dots:

Now, the peculiar thing is, the demonstration of the reality and relevance of FSCO/I was routinely, studiously ignored by objectors, and there were even condescending or even apparently annoyed dismissals of my having made repeated reference to a fishing reel as a demonstrative example.

But, in a current thread Andre has brought the issue back into focus, as we can note from an exchange of comments:

Andre, #3: I have to ask our materialist friends…..

We need to go back to the Fishing reel, with its story:

[youtube bpzh3faJkXk]

The closest we got to a reasonable response on the design-indicating implications of FSCO/I in fishing reels as a positive demonstration (with implications for other cases), is this, from AR:

It requires no effort at all to accept that the Abu Ambassadeur reel was designed and built by Swedes. My father had several examples. He worked for a rival company and was tasked with reverse-engineering the design with a view to developing a similar product. His company gave up on it. And I would be the first to suggest there are limits to our knowledge. We cannot see beyond the past light-cone of the Earth.

I think a better word that would lead to less confusion would be “purposeful” rather than “intelligent”. It better describes people, tool-using primates, beavers, bees and termites. The more important distinction should be made between material purposeful agents about which I cannot imagine we could disagree (aforesaid humans, other primates, etc) and immaterial agents for which we have no evidence or indicia (LOL) . . .

Now, it should be readily apparent . . . let’s expand in step by step points of thought [u/d Feb 8] . . . that:

a –> intelligence is inherently purposeful, and

b –> that the fishing reel is an example of how the purposeful intelligent creativity involved in the intelligently directed configuration — aka, design — that

c –> leads to productive working together of multiple, correct parts properly arranged to achieve function through their effective interaction

d –> leaves behind it certain empirically evident and in principle quantifiable signs. In particular,

e –> the specific arrangement of particular parts or facets in the sort of nodes-arcs pattern in the exploded view diagram above is chock full of quantifiable, function-constrained information. That is,

f –> we may identify a structured framework and list of yes/no questions required to bring us to the cluster of effective configurations in the abstract space of possible configurations of relevant parts.

g –> This involves specifying the parts, specifying their orientation, their location relative to other parts, coupling, and possibly an assembly process. Where,

h –> such a string of structured questions and answers is a specification in a description language, and yields a value of functionally specific information in binary digits, bits.

If this sounds strange, reflect on how AutoCAD and similar drawing programs represent designs.

This is directly linked to a well known index of complexity, from Kolmogorov and Chaitin. As Wikipedia aptly summarises:

In algorithmic information theory (a subfield of computer science and mathematics), the Kolmogorov complexity (also known as descriptive complexity, Kolmogorov–Chaitin complexity, algorithmic entropy, or program-size complexity) of an object, such as a piece of text, is a measure of the computability resources needed to specify the object . . . . the complexity of a string is the length of the shortest possible description of the string in some fixed universal description language (the sensitivity of complexity relative to the choice of description language is discussed below). It can be shown that the Kolmogorov complexity of any string cannot be more than a few bytes larger than the length of the string itself. Strings, like the abab example above, whose Kolmogorov complexity is small relative to the string’s size are not considered to be complex.

A useful way to picture this is to recognise from the above, that the three dimensional complexity and functionally specific organisation of something like the 6500 C3 reel, may be reduced to a descriptive string. In the worst case (a random string), we can give some header contextual information and reproduce the string. In other cases, we may be able to spot a pattern and do much better than that, e.g. with an orderly string like abab . . . n times we can compress to a very short message that describes the order involved. In intermediate cases, in all codes we practically observe there is some redundancy that yields a degree of compressibility.

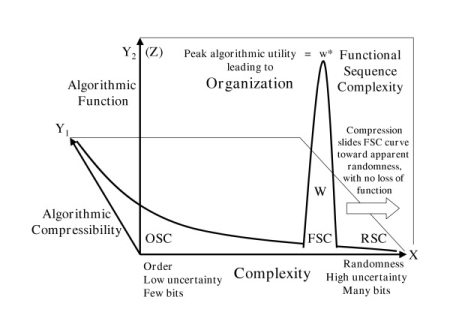

So, as Trevors and Abel were able to visualise a decade ago in one of the sleeping classic peer reviewed and published papers of design theory, we may distinguish random, ordered and functionally specific descriptive strings:

That is, we may see how islands of function emerge in an abstract space of possible sequences in which compressibility trades off against order and specific function in an algorithmic (or more broadly informational) context emerges. Where of course, functionality is readily observed in relevant cases: it works, or it fails, as any software debugger or hardware troubleshooter can tell you. Such islands may also be visualised in another way that allows us to see how this effect of sharp constraint on configurations in order to achieve interactive function enables us to detect the presence of design as best explanation of FSCO/I:

That is, we may see how islands of function emerge in an abstract space of possible sequences in which compressibility trades off against order and specific function in an algorithmic (or more broadly informational) context emerges. Where of course, functionality is readily observed in relevant cases: it works, or it fails, as any software debugger or hardware troubleshooter can tell you. Such islands may also be visualised in another way that allows us to see how this effect of sharp constraint on configurations in order to achieve interactive function enables us to detect the presence of design as best explanation of FSCO/I:

Obviously, as the just above infographic shows, beyond a certain level of complexity, the atomic and temporal resources of our solar system or the observed cosmos would be fruitlessly overwhelmed by the scope of the space of possibilities for descriptive strings, if search for islands of function was to be carried out on the approach of blind chance and/or mechanical necessity. We therefore now arrive at a practical process for operationally detecting design on its empirical signs — one that is independent of debates over visibility or otherwise of designers (but requires us to be willing to accept that we exemplify capabilities and characteristics of designers but do not exhaust the list of in principle possible designers):

Obviously, as the just above infographic shows, beyond a certain level of complexity, the atomic and temporal resources of our solar system or the observed cosmos would be fruitlessly overwhelmed by the scope of the space of possibilities for descriptive strings, if search for islands of function was to be carried out on the approach of blind chance and/or mechanical necessity. We therefore now arrive at a practical process for operationally detecting design on its empirical signs — one that is independent of debates over visibility or otherwise of designers (but requires us to be willing to accept that we exemplify capabilities and characteristics of designers but do not exhaust the list of in principle possible designers):

Further, we may introduce relevant cases and a quantification:

Further, we may introduce relevant cases and a quantification:

That is, we may now introduce a metric model that summarises the above flowchart:

Chi_500 = I*S – 500, bits beyond the solar system search threshold . . . Eqn 1

What this tells us, is that if we recognise a case of FSCO/I beyond 500 bits (or if the observed cosmos is a more relevant scope, 1,000 bits) then the config space search challenge above becomes insurmountable for blind chance and mechanical necessity. The only actually empirically warranted adequate causal explanation for such cases is design — intelligently directed configuration. And, as shown, this extends to specific cases in the world of life, extending a 2007 listing of cases of FSCO/I by Durston et al in the literature.

To see how this works, we may try the thought exercise of turning our observed solar system into a set of 10^57 atoms regarded as observers, assigning to each a tray of 500 coins. Flip every 10^-14 s or so, and observe, doing so for 10^17 s, a reasonable lifespan for the observed cosmos:

The resulting needle in haystack blind search challenge is comparable to a search that samples a one straw sized zone in a cubical haystack comparable in thickness to our galaxy. That is, we here apply a blind chance and mechanical necessity driven dynamic-stochastic search to a case of a general system model,

The resulting needle in haystack blind search challenge is comparable to a search that samples a one straw sized zone in a cubical haystack comparable in thickness to our galaxy. That is, we here apply a blind chance and mechanical necessity driven dynamic-stochastic search to a case of a general system model,

. . . and find it to be practically insuperable.

By contrast, intelligent designers routinely produce text strings of 72 ASCII characters in recognisable, context-responsive English and the like.

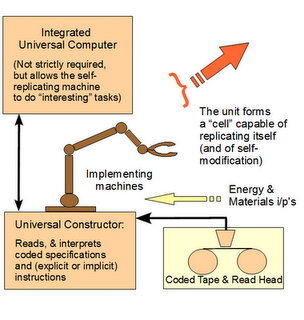

[U/D Feb 5th:] I forgot to add, on the integration of a von Neumann Self Replication facility, which requires a significant increment in FSCO/I, which may be represented:

Following von Neumann generally, such a machine uses . . .

Following von Neumann generally, such a machine uses . . .

This irreducible complexity is compounded by the requirement (i) for codes, requiring organised symbols and rules to specify both steps to take and formats for storing information, and (v) for appropriate material resources and energy sources.

This irreducible complexity is compounded by the requirement (i) for codes, requiring organised symbols and rules to specify both steps to take and formats for storing information, and (v) for appropriate material resources and energy sources.Immediately, we are looking at islands of organised function for both the machinery and the information in the wider sea of possible (but mostly non-functional) configurations. In short, outside such functionally specific — thus, isolated — information-rich hot (or, “target”) zones, want of correct components and/or of proper organisation and/or co-ordination will block function from emerging or being sustained across time from generation to generation. So, once the set of possible configurations is large enough and the islands of function are credibly sufficiently specific/isolated, it is unreasonable to expect such function to arise from chance, or from chance circumstances driving blind natural forces under the known laws of nature.

And ever since Paley spoke of the thought exercise of a watch that replicated itself in the course of its movement, it has been pointed out that such a jump in FSCO/I points to yet higher more perfect art as credible cause.

It bears noting, then, that the only actually actually observed source of FSCO/I is design.

That is, we see here the vera causa test in action, that when we set out to explain observed traces from the unobservable deep past of origins, we should apply in our explanations only such factors as we have observed to be causally adequate to such effects. The simple application of this principle to the FSCO/I in life forms immediately raises the question of design as causal explanation.

A good step to help us see why is to consult Leslie Orgel in a pivotal 1973 observation:

. . . In brief, living organisms are distinguished by their specified complexity. Crystals are usually taken as the prototypes of simple well-specified structures, because they consist of a very large number of identical molecules packed together in a uniform way. Lumps of granite or random mixtures of polymers are examples of structures that are complex but not specified. The crystals fail to qualify as living because they lack complexity; the mixtures of polymers fail to qualify because they lack specificity.

These vague idea can be made more precise by introducing the idea of information. Roughly speaking, the information content of a structure is the minimum number of instructions needed to specify the structure. [–> this is of course equivalent to the string of yes/no questions required to specify the relevant “wiring diagram” for the set of functional states, T, in the much larger space of possible clumped or scattered configurations, W, as Dembski would go on to define in NFL in 2002, also cf here, here and here (with here on self-moved agents as designing causes).] One can see intuitively that many instructions are needed to specify a complex structure. [–> so if the q’s to be answered are Y/N, the chain length is an information measure that indicates complexity in bits . . . ] On the other hand a simple repeating structure can be specified in rather few instructions. [–> do once and repeat over and over in a loop . . . ] Complex but random structures, by definition, need hardly be specified at all . . . . Paley was right to emphasize the need for special explanations of the existence of objects with high information content, for they cannot be formed in nonevolutionary, inorganic processes.

[The Origins of Life (John Wiley, 1973), p. 189, p. 190, p. 196. Of course,

a –> that immediately highlights OOL, where the required self-replicating entity is part of what has to be explained (cf. Paley here), a notorious conundrum for advocates of evolutionary materialism; one, that has led to mutual ruin documented by Shapiro and Orgel between metabolism first and genes first schools of thought, cf here.

b –> Behe would go on to point out that irreducibly complex structures are not credibly formed by incremental evolutionary processes and Menuge et al would bring up serious issues for the suggested exaptation alternative, cf. his challenges C1 – 5 in the just linked. Finally,

c –> Dembski highlights that CSI comes in deeply isolated islands T in much larger configuration spaces W, for biological systems functional islands. That puts up serious questions for origin of dozens of body plans reasonably requiring some 10 – 100+ mn bases of fresh genetic information to account for cell types, tissues, organs and multiple coherently integrated systems. Wicken’s remarks a few years later as already were cited now take on fuller force in light of the further points from Orgel at pp. 190 and 196 . . . ]

. . . and J S Wicken in a 1979 remark:

. . . then also this from Sir Fred Hoyle:

Once we see that life is cosmic it is sensible to suppose that intelligence is cosmic. Now problems of order, such as the sequences of amino acids in the chains which constitute the enzymes and other proteins, are precisely the problems that become easy once a directed intelligence enters the picture, as was recognised long ago by James Clerk Maxwell in his invention of what is known in physics as the Maxwell demon. The difference between an intelligent ordering, whether of words, fruit boxes, amino acids, or the Rubik cube, and merely random shufflings can be fantastically large, even as large as a number that would fill the whole volume of Shakespeare’s plays with its zeros. So if one proceeds directly and straightforwardly in this matter, without being deflected by a fear of incurring the wrath of scientific opinion, one arrives at the conclusion that biomaterials with their amazing measure or order must be the outcome of intelligent design. No other possibility I have been able to think of in pondering this issue over quite a long time seems to me to have anything like as high a possibility of being true.” [[Evolution from Space (The Omni Lecture[ –> Jan 12th 1982]), Enslow Publishers, 1982, pg. 28.]

Why then, the resistance to such an inference?

AR gives us a clue:

The more important distinction should be made between material purposeful agents about which I cannot imagine we could disagree (aforesaid humans, other primates, etc) and immaterial agents for which we have no evidence or indicia (LOL) . . .

That is, there is a perception that to make a design inference on origin of life or of body plans based on the observed cause of FSCO/I is to abandon science for religious superstition. Regardless, of the strong insistence of design thinkers from the inception of the school of thought as a movement, that inference to design on the world of life is inference to ART as causal process (in contrast to blind chance and mechanical necessity), as opposed to inference to the supernatural. And underneath lurks the problem of a priori imposed Lewontinian evolutionary materialism, as was notoriously stated in a review of Sagan’s A Demon Haunted World:

. . . the problem is to get them [hoi polloi] to reject irrational and supernatural explanations of the world, the demons that exist only in their imaginations, and to accept a social and intellectual apparatus, Science, as the only begetter of truth [–> NB: this is a knowledge claim about knowledge and its possible sources, i.e. it is a claim in philosophy not science; it is thus self-refuting]. . . .

. . . the problem is to get them [hoi polloi] to reject irrational and supernatural explanations of the world, the demons that exist only in their imaginations, and to accept a social and intellectual apparatus, Science, as the only begetter of truth [–> NB: this is a knowledge claim about knowledge and its possible sources, i.e. it is a claim in philosophy not science; it is thus self-refuting]. . . .

It is not that the methods and institutions of science somehow compel us to accept a material explanation of the phenomenal world, but, on the contrary, that we are forced by our a priori adherence to material causes [–> another major begging of the question . . . ] to create an apparatus of investigation and a set of concepts that produce material explanations, no matter how counter-intuitive, no matter how mystifying to the uninitiated. Moreover, that materialism is absolute [–> i.e. here we see the fallacious, indoctrinated, ideological, closed mind . . . ], for we cannot allow a Divine Foot in the door . . .

[From: “Billions and Billions of Demons,” NYRB, January 9, 1997. In case you imagine this is “quote-mined” I suggest you read the fuller annotated cite here.]

A priori Evolutionary Materialism has been dressed up in the lab coat and many have thus been led to imagine that to draw an inference that just might open the door a crack to that barbaric Bronze Age sky-god myth — as they have been indoctrinated to think about God (in gross error, start here) — is to abandon science for chaos.

Philip Johnson’s reply, rebuttal and rebuke was well merited:

And so, our answer to AR must first reflect BA’s: Craig Venter et al positively demonstrate that intelligent design and/or modification of cell based life forms is feasible, effective and an actual cause of observable information in life forms. To date, by contrast — after 150 years of trying — the observational base for bio-functional complex, specific information beyond 500 – 1,000 bits originating by blind chance and mechanical necessity is ZERO.

So, straight induction trumps ideological speculation, per the vera causa test.

That is, at minimum, design sits at the explanatory table regarding origin of life and origin of body plans, as of inductive right.

And, we may add that by highlighting the case for the origin of the living cell, this applies from the root on up and should shift our evaluation of the reasonableness of design as an alternative for major, information-rich features of life-forms, including our own. Particularly as regards our being equipped for language.

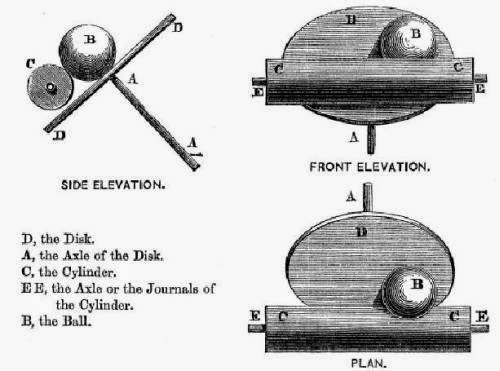

Going beyond, we note that we observe intelligence in action, but have no good reason to confine it to embodied forms. Not least, because blindly mechanical, GIGO-limited computation such as in a ball and disk integrator:

. . . or a digital circuit based computer:

. . . or even a neural network:

. . . is dynamic-stochastic system based signal processing, it simply is not equal to insightful, self-aware, responsibly free rational contemplation, reasoning, warranting, knowing and linked imaginative creativity. Indeed, it is the gap between these two things that is responsible for the intractability of the so-called Hard Problem of Consciousness, as can be seen from say Carter’s formulation which insists on the reduction:

The term . . . refers to the difficult problem of explaining why we have qualitative phenomenal experiences. It is contrasted with the “easy problems” of explaining the ability to discriminate, integrate information, report mental states, focus attention, etc. Easy problems are easy because all that is required for their solution is to specify a mechanism that can perform the function. That is, their proposed solutions, regardless of how complex or poorly understood they may be, can be entirely consistent with the modern materialistic conception of natural phenomen[a]. Hard problems are distinct from this set because they “persist even when the performance of all the relevant functions is explained.”

Notice, the embedded a priori materialism.

2350 years past, Plato spotlighted the fatal foundational flaw in his The Laws, Bk X, drawing an inference to cosmological design:

Ath. . . . when one thing changes another, and that another, of such will there be any primary changing element? How can a thing which is moved by another ever be the beginning of change?Impossible. But when the self-moved changes other, and that again other, and thus thousands upon tens of thousands of bodies are set in motion, must not the beginning of all this motion be the change of the self-moving principle? . . . . self-motion being the origin of all motions, and the first which arises among things at rest as well as among things in motion, is the eldest and mightiest principle of change, and that which is changed by another and yet moves other is second.

Cle. Certainly we should.

Ath. And when we see soul in anything, must we not do the same-must we not admit that this is life? [[ . . . . ]

Cle. You mean to say that the essence which is defined as the self-moved is the same with that which has the name soul?

Ath. Yes; and if this is true, do we still maintain that there is anything wanting in the proof that the soul is the first origin and moving power of all that is, or has become, or will be, and their contraries, when she has been clearly shown to be the source of change and motion in all things?

Cle. Certainly not; the soul as being the source of motion, has been most satisfactorily shown to be the oldest of all things.

Ath. And is not that motion which is produced in another, by reason of another, but never has any self-moving power at all, being in truth the change of an inanimate body, to be reckoned second, or by any lower number which you may prefer? Cle. Exactly.

In effect, the key problem is that in our time, many have become weeded to an ideology that attempts to get North by insistently heading due West.

Mission impossible.

Instead, let us let the chips lie where they fly as we carry out an inductive analysis.

Patently, FSCO/I is only known to come about by intelligently directed — thus purposeful — configuration. The islands of function in config spaces and needle in haystack search challenge easily explain why, on grounds remarkably similar to those that give the statistical underpinnings of the second law of thermodynamics.

Further, while we exemplify design and know that in our case intelligence is normally coupled to brain operation, we have no good reason to infer that it is merely a result of the blindly mechanical computation of the neural network substrates in our heads. Indeed, we have reason to believe that blind GIGO limited mechanisms driven by forces of chance and necessity are utterly at categorical difference from our familiar responsible freedom. (And it is noteworthy that those who champion the materialist view often seek to undermine responsible freedom to think, reason, warrant, decide and act.)

To all such, we must contrast the frank declaration of evolutionary theorist J B S Haldane:

“It seems to me immensely unlikely that mind is a mere by-product of matter. For if my mental processes are determined wholly by the motions of atoms in my brain I have no reason to suppose that my beliefs are true.They may be sound chemically, but that does not make them sound logically. And hence I have no reason for supposing my brain to be composed of atoms. In order to escape from this necessity of sawing away the branch on which I am sitting, so to speak, I am compelled to believe that mind is not wholly conditioned by matter.” [[“When I am dead,” in Possible Worlds: And Other Essays [1927], Chatto and Windus: London, 1932, reprint, p.209. (Highlight and emphases added.)]

And so, when we come to something like the origin of a fine tuned cosmos fitted for C-Chemistry, aqueous medium, code and algorithm using, cell-based life, we should at least be willing to seriously consider Sir Fred Hoyle’s point:

From 1953 onward, Willy Fowler and I have always been intrigued by the remarkable relation of the 7.65 MeV energy level in the nucleus of 12 C to the 7.12 MeV level in 16 O. If you wanted to produce carbon and oxygen in roughly equal quantities by stellar nucleosynthesis, these are the two levels you would have to fix, and your fixing would have to be just where these levels are actually found to be. Another put-up job? . . . I am inclined to think so. A common sense interpretation of the facts suggests that a super intellect has “monkeyed” with the physics as well as the chemistry and biology, and there are no blind forces worth speaking about in nature. [F. Hoyle, Annual Review of Astronomy and Astrophysics, 20 (1982): 16. Emphasis added.]

As he also noted:

I do not believe that any physicist who examined the evidence could fail to draw the inference that the laws of nuclear physics have been deliberately designed with regard to the consequences they produce within stars. [[“The Universe: Past and Present Reflections.” Engineering and Science, November, 1981. pp. 8–12]

That is, we should at minimum be willing to ponder seriously the possibility of creative mind beyond the cosmos, beyond matter, as root cause of what we see. If, we are willing to allow FSCO/I to speak for itself as a reliable index of design. Even, through a multiverse speculation.

For, as John Leslie classically noted:

One striking thing about the fine tuning is that a force strength or a particle mass often appears to require accurate tuning for several reasons at once. Look at electromagnetism. Electromagnetism seems to require tuning for there to be any clear-cut distinction between matter and radiation; for stars to burn neither too fast nor too slowly for life’s requirements; for protons to be stable; for complex chemistry to be possible; for chemical changes not to be extremely sluggish; and for carbon synthesis inside stars (carbon being quite probably crucial to life). Universes all obeying the same fundamental laws could still differ in the strengths of their physical forces, as was explained earlier, and random variations in electromagnetism from universe to universe might then ensure that it took on any particular strength sooner or later. Yet how could they possibly account for the fact that the same one strength satisfied many potentially conflicting requirements, each of them a requirement for impressively accurate tuning?

. . . [.] . . . the need for such explanations does not depend on any estimate of how many universes would be observer-permitting, out of the entire field of possible universes. Claiming that our universe is ‘fine tuned for observers’, we base our claim on how life’s evolution would apparently have been rendered utterly impossible by comparatively minor alterations in physical force strengths, elementary particle masses and so forth. There is no need for us to ask whether very great alterations in these affairs would have rendered it fully possible once more, let alone whether physical worlds conforming to very different laws could have been observer-permitting without being in any way fine tuned. Here it can be useful to think of a fly on a wall, surrounded by an empty region. A bullet hits the fly Two explanations suggest themselves. Perhaps many bullets are hitting the wall or perhaps a marksman fired the bullet. There is no need to ask whether distant areas of the wall, or other quite different walls, are covered with flies so that more or less any bullet striking there would have hit one. The important point is that the local area contains just the one fly. [Our Place in the Cosmos, 1998 (courtesy Wayback Machine) Emphases added.]

In short, our observed cosmos sits at a locally deeply isolated, functionally specific, complex configuration of underlying physics and cosmology that enable the sort of life forms we see. That needs to be explained adequately, even as for a lone fly on a patch of wall swatted by a bullet.

And, if we are willing to consider it, that strongly points to a marksman with the right equipment.

Even, if that may be a mind beyond the material, inherently contingent cosmos we observe.

Even, if . . . END

We have recently discovered a 3rd rotary motor [ –> after the Flagellum and the ATP Synthase Enzyme] that is used by cells for propulsion.

http://www.cell.com/current-bi…..%2901506-1

Please give me an honest answer how on earth can you even believe or hang on to the hope that this system not only designed itself but built itself? This view is not in accrodance with what we observe in the universe. I want to believe you that it can build and design itself but please show me how! I’m an engineer and I can promise you in my whole working life I have NEVER seen such a system come into existence on its own. If you have proof of this please share it with me so that I can also start believing in what you do!

Andre, 22: I see no attempt by anyone to answer my question…

How do molecular machines design and build themselves?

Anyone?

KF, 23: providing you mean the heavily endothermic information rich molecules and key-lock fitting components in the nanotech machines required for the living cell, they don’t, and especially, not in our observation. Nor do codes (languages) and algorithms (step by step procedures) assemble themselves out of molecular noise in warm salty ponds etc. In general, the notion that functionally specific complex organisation and associated information comes about by blind chance and mechanical necessity is without empirical warrant. But, institutionalised commitment to Lewontinian a priori evolutionary materialism has created the fixed notion in a great many minds that this “must” have happened and that to stop and question this is to abandon “Science.” So much the worse for the vera causa principle that in explaining a remote unobserved past of origins, there must be a requirement that we first observe the actual causes seen to produce such effects and use them in explanation. If that were done, the debates and contentions would be over as there is but one empirically grounded cause of FSCO/I; intelligently directed configuration, aka design

Andre, 24: On the money.

Piotr is an expert on linguistics, I wonder if he can tell us how the system of speech transmission, encoding and decoding could have evolved in a stepwise fashion.

Here is a simple example…..

http://4.bp.blogspot.com/_1VPL…..+Model.gif

[I insert:]

[And, elaborate a bit, on technical requisites:]

I really want to know how or am I just being unreasonable again?