In the still active discussion thread on failure of compensation arguments, long term maverick ID (and, I think, still YEC-sympathetic) supporter SalC comments:

SalC, 570: . . . I’ve argued against using information theory type arguments in defense of ID, it adds way too much confusion. Basic probability will do the job, and basic probability is clear and unassailable.

The mutliplicities of interest to ID proponents don’t vary with temperature, whereas the multiplicities from a thermodynamic perspective change with temperature. I find that very problematic for invoking 2LOT in defense of ID.

Algorithmically controlled metabolisms (such as realized in life) are low multiplicity constructs as a matter of principle. They are high in information content. But why add more jargon and terminology?

Most people understand “complex-computer-like machines such a living creatures are far from the expected outcome of random processes”. This is a subtle assertion of LLN [ –> The Law of Large Numbers in Statistics]. This is a different way of posing the Humpty Dumpty problem.

There are an infinite number of ways to make lock-and-key or login/password systems, but just because there are infinite number of ways to do this does not make them highly probable from random processes . . . . Why invoke 2LOT? Why invoke fancy terms that add confusion? One deliberately uses unclear arguments when one wishes to obfuscate, but why be unclear when evidence is on one’s side? . . . .

LLN and variations of Jonathan Wells Humpty Dumpty argument I think have proven themselves in the arena of debate. Maybe even more tersely, extrapolating Pasteur, “life does not come from non-life.”

In response, while SalC has a point where a basic and non-controversial exchange is in hand, we usually do not have this luxury and must address the technical issues to some significant extent. When we do so all of the above factors at once come out as differing facets of the same issue.

Facets, that inextricably interact as parts of a whole.

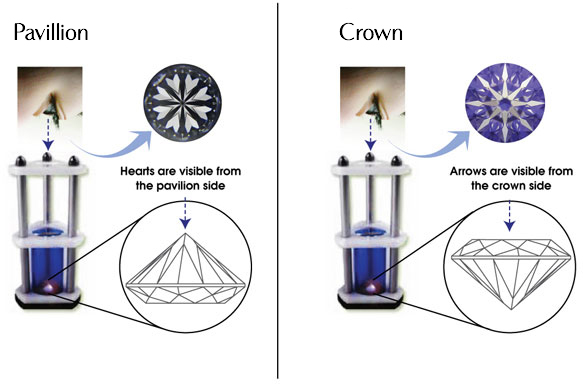

Analogous to say the hearts and arrows effect with suitably, very specifically cut and polished diamonds:

In short, once we begin to probe beneath the surface, design thought shows itself to be . . . irreducibly complex.

Accordingly, I responded as follows at 592 , which I think I should headline and augment:

_______________

KF, 592: >>Pardon, but I have a different take: on years of observing, any serious design argument will be twisted into pretzels, strawmannised, confused, clouded and generally distorted and dismissed by the sort of ruthlessly determined and too often amoral or outright nihilistic, truth and fairness disregarding objectors we frequently face.

This is because, too many such are “any means necessary”/”ends justify means” committed ideologues full of agit-prop talking points and agendas.

That’s exactly how the trained, indoctrinated Marxist agitators of my youth operated. Benumbed in conscience, insensitive to truth, fundamentally rage-blinded [even when charming], secure in their notion that they were the vanguard of the future/progress, and that they were championing pure victims of the oppressor classes who deserved anything they got.

(Just to illustrate the attitude, I remember one who accused me falsely of theft of an item of equipment kept in my lab. I promptly had it signed over to the Student Union once I understood the situation, then went to her office and confronted her with the sign off. How can you be so thin skinned was her only response; taking full advantage of the rule that men must restrain themselves in dealing with women, however outrageous the latter, and of course seeking to further wound. Ironically, this champion of the working classes was from a much higher class-origin than I was . . . actually, unsurprisingly. To see the parallels, notice how often not only objectors who come here but the major materialist agit-prop organisations — without good grounds — insinuate calculated dishonesty and utter incompetence to the point that we should not have been able to complete a degree, on our part.)

I suggest, first, that the pivot of design discussions on the world of life is functionally specific, complex interactive Wicken wiring diagram organisation of parts that achieve a whole performance based on particular arrangement and coupling, and associated information. Information that is sometimes explicit (R/DNA codes) or sometimes may be drawn out by using structured Y/N q’s that describe the wiring pattern to achieve function.

FSCO/I, for short.

{Aug. 1:} Back to Reels to show the basic “please face and acknowledge facts” reality of FSCO/I , here the Penn International Trolling Reel exploded view:

. . . and a video showing the implications of this “wiring diagram” for how it is put together in the factory:

https://www.youtube.com/watch?v=TTqzSHZKQ1k

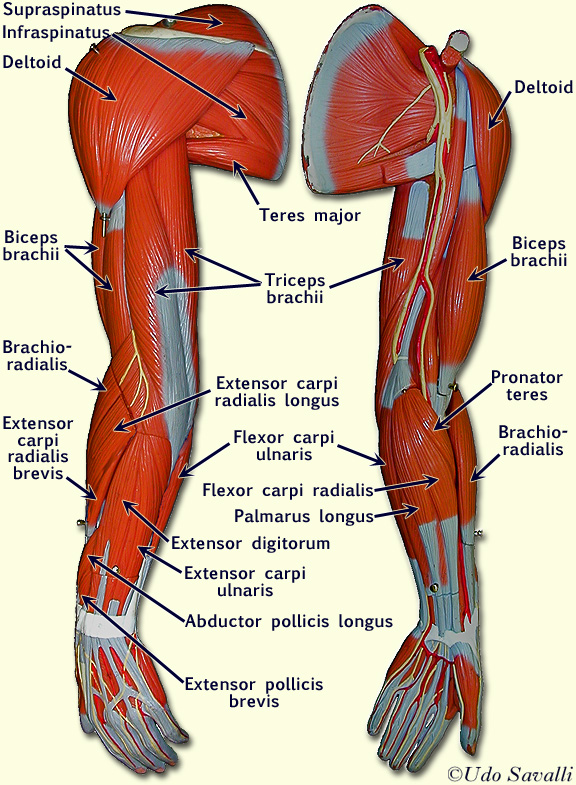

. . . just, remember, the arm-hand system is a complex, multi-axis cybernetic manipulator-arm:

This concept is not new, it goes back to Orgel 1973:

. . . In brief, living organisms [–> functional context] are distinguished by their specified complexity. Crystals are usually taken as the prototypes of simple well-specified structures, because they consist of a very large number of identical molecules packed together in a uniform way. Lumps of granite or random mixtures of polymers are examples of structures that are complex but not specified. The crystals fail to qualify as living because they lack complexity; the mixtures of polymers fail to qualify because they lack specificity . . . .

[HT, Mung, fr. p. 190 & 196:] These vague idea can be made more precise by introducing the idea of information. Roughly speaking, the information content of a structure is the minimum number of instructions needed to specify the structure. [–> this is of course equivalent to the string of yes/no questions required to specify the relevant “wiring diagram” for the set of functional states, T, in the much larger space of possible clumped or scattered configurations, W, as Dembski would go on to define in NFL in 2002 . . . ] One can see intuitively that many instructions are needed to specify a complex structure. [–> so if the q’s to be answered are Y/N, the chain length is an information measure that indicates complexity in bits . . . ] On the other hand a simple repeating structure can be specified in rather few instructions. [–> do once and repeat over and over in a loop . . . ] Complex but random structures, by definition, need hardly be specified at all . . . . Paley was right to emphasize the need for special explanations of the existence of objects with high information content, for they cannot be formed in nonevolutionary, inorganic processes. [The Origins of Life (John Wiley, 1973), p. 189, p. 190, p. 196.]

. . . as well as Wicken, 1979:

‘Organized’ systems are to be carefully distinguished from ‘ordered’ systems. Neither kind of system is ‘random,’ but whereas ordered systems are generated according to simple algorithms [[i.e. “simple” force laws acting on objects starting from arbitrary and common- place initial conditions] and therefore lack complexity, organized systems must be assembled element by element according to an [[originally . . . ] external ‘wiring diagram’ with a high information content . . . Organization, then, is functional complexity and carries information. It is non-random by design or by selection, rather than by the a priori necessity of crystallographic ‘order.’ [[“The Generation of Complexity in Evolution: A Thermodynamic and Information-Theoretical Discussion,” Journal of Theoretical Biology, 77 (April 1979): p. 353, of pp. 349-65. (Emphases and notes added. Nb: “originally” is added to highlight that for self-replicating systems, the blue print can be built-in.)]

. . . and is pretty directly stated by Dembski in NFL:

p. 148:“The great myth of contemporary evolutionary biology is that the information needed to explain complex biological structures can be purchased without intelligence. My aim throughout this book is to dispel that myth . . . . Eigen and his colleagues must have something else in mind besides information simpliciter when they describe the origin of information as the central problem of biology.

I submit that what they have in mind is specified complexity, or what equivalently we have been calling in this Chapter Complex Specified information or CSI . . . .

Biological specification always refers to function. An organism is a functional system comprising many functional subsystems. . . . In virtue of their function [[a living organism’s subsystems] embody patterns that are objectively given and can be identified independently of the systems that embody them. Hence these systems are specified in the sense required by the complexity-specificity criterion . . . the specification can be cashed out in any number of ways [[through observing the requisites of functional organisation within the cell, or in organs and tissues or at the level of the organism as a whole. Dembski cites:

Wouters, p. 148: “globally in terms of the viability of whole organisms,”

Behe, p. 148: “minimal function of biochemical systems,”

Dawkins, pp. 148 – 9: “Complicated things have some quality, specifiable in advance, that is highly unlikely to have been acquired by ran-| dom chance alone. In the case of living things, the quality that is specified in advance is . . . the ability to propagate genes in reproduction.”

On p. 149, he roughly cites Orgel’s famous remark from 1973, which exactly cited reads:

In brief, living organisms are distinguished by their specified complexity. Crystals are usually taken as the prototypes of simple well-specified structures, because they consist of a very large number of identical molecules packed together in a uniform way. Lumps of granite or random mixtures of polymers are examples of structures that are complex but not specified. The crystals fail to qualify as living because they lack complexity; the mixtures of polymers fail to qualify because they lack specificity . . .

And, p. 149, he highlights Paul Davis in The Fifth Miracle: “Living organisms are mysterious not for their complexity per se, but for their tightly specified complexity.”] . . .”

p. 144: [[Specified complexity can be more formally defined:] “. . . since a universal probability bound of 1 [[chance] in 10^150 corresponds to a universal complexity bound of 500 bits of information, [[the cluster] (T, E) constitutes CSI because T [[ effectively the target hot zone in the field of possibilities] subsumes E [[ effectively the observed event from that field], T is detachable from E, and and T measures at least 500 bits of information . . . ”

What happens at relevant cellular level, is that this comes down to highly endothermic C-Chemistry, aqueous medium context macromolecules in complexes that are organised to achieve highly integrated and specific interlocking functions required for metabolising, self replicating cells to function.

This implicates huge quantities of information manifest in the highly specific functional organisation. Which is observable on a much coarser resolution than the nm range of basic molecular interactions. That is we see tightly constrained clusters of micro-level arrangements — states — consistent with function, as opposed to the much larger numbers of possible but overwhelmingly non-functional ways the same atoms and monomer components could be chemically and/or physically clumped “at random.” In turn, that is a lot fewer ways than the same could be scattered across a Darwin’s pond or the like.

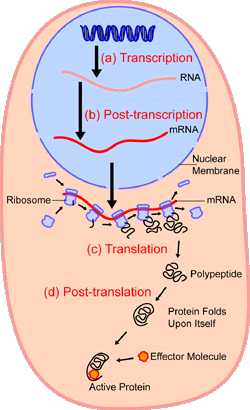

{Aug. 2} For illustration let us consider the protein synthesis process at gross level:

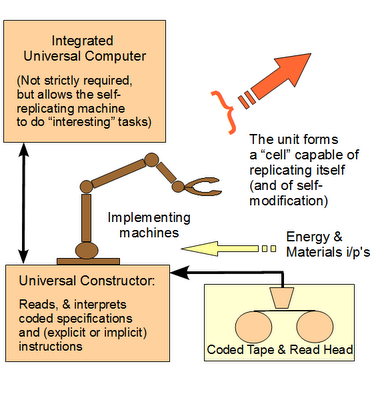

. . . spotlighting and comparing the ribosome in action as a coded tape Numerically Controlled machine:

. . . then at a little more zoomed in level:

. . . then in the wider context of cellular metabolism [protein synthesis is the little bit with two call-outs in the top left of the infographic]:

Thus, starting from the “typical” diffused condition, we readily see how a work to clump at random emerges, and a further work to configure in functionally specific ways.

With implications for this component of entropy change.

As well as for the direction of the clumping and assembly process to get the right parts together, organised in the right cluster of ways that are consistent with function.

Thus, there are implications of prescriptive information that specifies the relevant wiring diagram. (Think, AutoCAD etc as a comparison.)

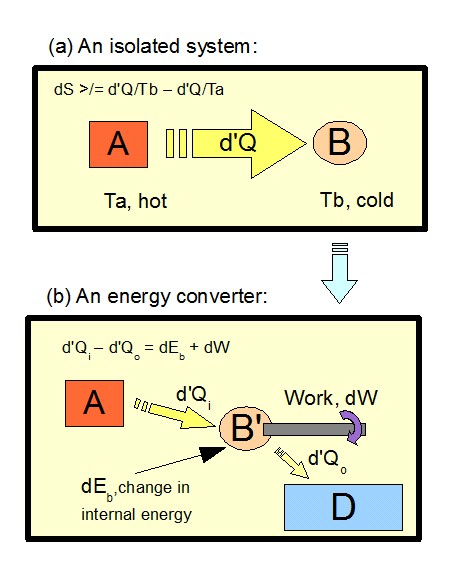

Pulling back, we can see that to achieve such, the reasonable — and empirically warranted — expectation, is

a: to find energy, mass and information sources and flows associated with

b: energy converters that provide shaft work or controlled flows [I use a heat engine here but energy converters are more general than that], linked to

c: constructors that carry out the particular work, under control of

d: relevant prescriptive information that explicitly or implicitly regulates assembly to match the wiring diagram requisites of function,

. . . [u/d Apr 13] or, comparing an contrasting a Maxwell Demon model that imposes organisation by choice with use of mechanisms, courtesy Abel:

. . . also with

e: exhaust or dissipation otherwise of degraded energy [typically, but not only, as heat . . . ] and discarding of wastes. (Which last gives relevant compensation where dS cosmos rises. Here, we may note SalC’s own recent cite on that law from Clausius, at 570 in the previous thread that shows what “relevant” implies: Heat can never pass from a colder to a warmer body without some other change, connected therewith, occurring at the same time.)

{Added, April 19, 2015: Clausius’ statement:}

By contrast with such, there seems to be a strong belief that irrelevant mass and/or energy flows without coupled converters, constructors and prescriptive organising information, through phenomena such as diffusion and fluctuations can somehow credibly hit on a replicating entity that then can ratchet up into a full encapsulated, gated, metabolising, algorithmic code using self replicating cell.

Such is thermodynamically — yes, thermodynamically, informationally and probabilistically [loose sense] utterly implausible. And, the sort of implied genes first/RNA world, or alternatively metabolism first scenarios that have been suggested are without foundation in empirically observed adequate cause tracing only to blind chance and mechanical necessity.

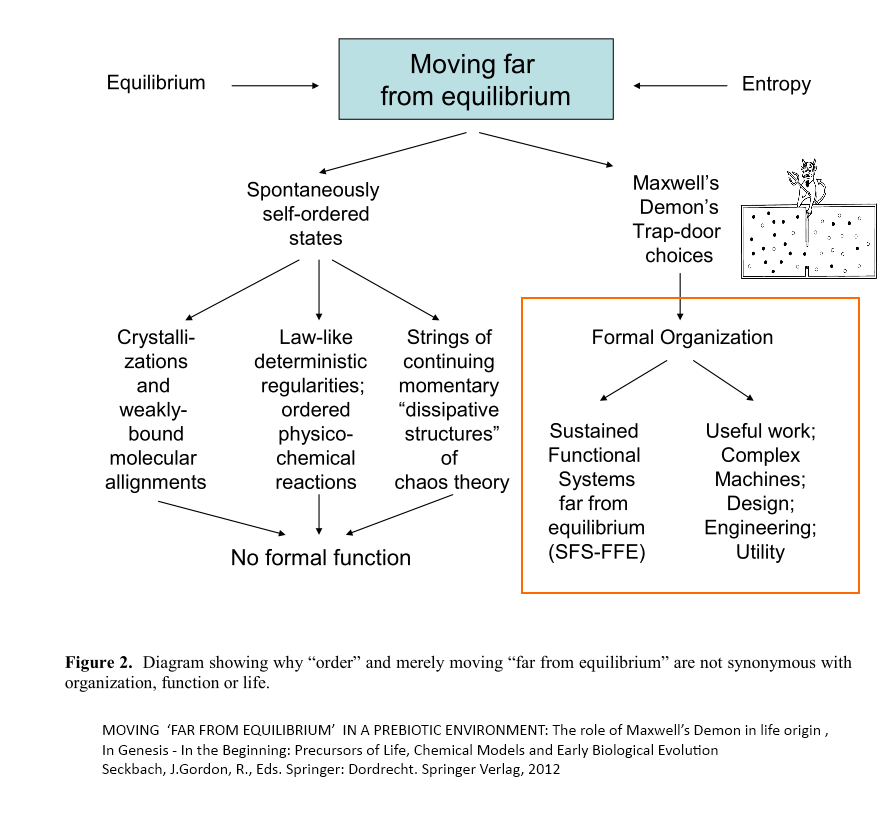

{U/D, Apr 13:} Abel 2012 makes much the same point, in his book chapter, MOVING ‘FAR FROM EQUILIBRIUM’ IN A PREBIOTIC ENVIRONMENT: The role of Maxwell’s Demon in life origin :

Mere heterogeneity and/or order do not even begin to satisfy the necessary and sufficient conditions for life. Self-ordering tendencies provide no mechanism for self-organization, let alone abiogenesis. All sorts of physical astronomical “clumping,” weak-bonded molecular alignments, phase changes, and outright chemical reactions occur spontaneously in nature that have nothing to do with life. Life is organization-based, not order-based. As we shall see below in Section 6, order is poisonous to organization.

Stochastic ensembles of nucleotides and amino acids can polymerize naturalistically (with great difficulty). But functional sequencing of those monomers cannot be determined by any fixed physicodynamic law. It is well-known that only one 150-mer polyamino acid string out of 10^74 stochastic ensembles folds into a tertiary structure with any hint of protein function (Axe, 2004). This takes into full consideration the much publicized substitutability of amino acids without loss of function within a typical protein family membership. The odds are still only one functional protein out of 10^74 stochastic ensembles. And 150 residues are of minimal length to qualify for protein status. Worse yet, spontaneously condensed Levo-only peptides with peptide-only bonds between only biologically useful amino acids in a prebioitic environment would rarely exceed a dozen mers in length. Without polycodon prescription and sophisticated ribosome machinery, not even polypeptides form that would contribute much to “useful biological work.” . . . .

There are other reasons why merely “moving far from equilibrium” is not the key to life as seems so universally supposed. Disequilibrium stemming from mere physicodynamic constraints and self-ordering phenomena would actually be poisonous to life-origin (Abel, 2009b). The price of such constrained and self-ordering tendencies in nature is the severe reduction of Shannon informational uncertainty in any physical medium (Abel, 2008b, 2010a). Self-ordering processes preclude information generation because they force conformity and reduce freedom of selection. If information needs anything, it is the uncertainty made possible by freedom from determinism at true decisions nodes and logic gates. Configurable switch-settings must be physicodynamically inert (Rocha, 2001; Rocha & Hordijk, 2005) for genetic programming and evolution of the symbol system to take place (Pattee, 1995a, 1995b). This is the main reason that Maxwell’s Demon model must use ideal gas molecules. It is the only way to maintain high uncertainty and freedom from low informational physicochemical determinism. Only then is the control and regulation so desperately needed for organization and life-origin possible. The higher the combinatorial possibilities and epistemological uncertainty of any physical medium, the greater is the information recordation potential of that matrix.

Constraints and law-like behavior only reduce uncertainty (bit content) of any physical matrix. Any self-ordering tendency precludes the freedom from law needed to program logic gates and configurable switch settings. The regulation of life requires not only true decision nodes, but wise choices at each decision node. This is exactly what Maxwell’s Demon does. No yet-to-be discovered physicodynamic law will ever be able to replace the Demon’s wise choices, or explain the exquisite linear digital PI programming and organization of life (Abel, 2009a; Abel & Trevors, 2007). Organization requires choice contingency rather than chance contingency or law (Abel, 2008b, 2009b, 2010a). This conclusion comes via deductive logical necessity and clear-cut category differences, not just from best-thus-far empiricism or induction/abduction.

In short, the three perspectives converge. Thermodynamically, the implausibility of finding information rich FSCO/I in islands of function in vast config spaces . . .

. . . — where we can picture the search by using coins as stand-in for one-bit registers —

. . . links directly to the overwhelmingly likely outcome of spontaneous processes. Such is of course a probabilistically liked outcome. And, information is often quantified on the same probability thinking.

Taking a step back to App A my always linked note, following Thaxton Bradley and Olson in TMLO 1984 and amplifying a bit:

. . . Going forward to the discussion in Ch 8, in light of the definition dG = dH – Tds, we may then split up the TdS term into contributing components, thusly:

First, dG = [dE + PdV] – TdS . . . [Eqn A.9, cf def’ns for G, H above]

But, [1] since pressure-volume work [–> the PdV term] may be seen as negligible in the context we have in mind, and [2] since we may look at dE as shifts in bonding energy [which will be more or less the same in DNA or polypeptide/protein chains of the same length regardless of the sequence of the monomers], we may focus on the TdS term. This brings us back to the clumping then configuring sequence of changes in entropy in the Micro-Jets example above:

dG = dH – T[dS”clump” +dSconfig] . . . [Eqn A.10, cf. TBO 8.5]

Of course, we have already addressed the reduction in entropy on clumping and the further reduction in entropy on configuration, through the thought expt. etc., above. In the DNA or protein formation case, more or less the same thing happens. Using Brillouin’s negentropy formulation of information, we may see that the dSconfig is the negative of the information content of the molecule.

A bit of back-tracking will help:

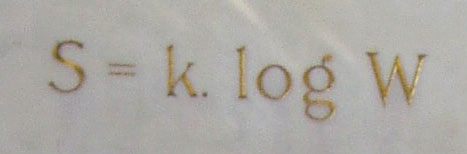

S = k ln W . . . Eqn A.3

{U/D Apr 19: Boltzmann’s tombstone}

Now, W may be seen as a composite of the ways energy as well as mass may be arranged at micro-level. That is, we are marking a distinction between the entropy component due to ways energy [here usually, thermal energy] may be arranged, and that due to the ways mass may be configured across the relevant volume. The configurational component arises from in effect the same considerations as lead us to see a rise in entropy on having a body of gas at first confined to part of an apparatus, then allowing it to freely expand into the full volume:

Free expansion:

|| * * * * * * * * | . . . . . ||

Then:

|| * * * * * * * * ||

Or, as Prof Gary L. Bertrand of university of Missouri-Rollo summarises:

The freedom within a part of the universe may take two major forms: the freedom of the mass and the freedom of the energy. The amount of freedom is related to the number of different ways the mass or the energy in that part of the universe may be arranged while not gaining or losing any mass or energy. We will concentrate on a specific part of the universe, perhaps within a closed container. If the mass within the container is distributed into a lot of tiny little balls (atoms) flying blindly about, running into each other and anything else (like walls) that may be in their way, there is a huge number of different ways the atoms could be arranged at any one time. Each atom could at different times occupy any place within the container that was not already occupied by another atom, but on average the atoms will be uniformly distributed throughout the container. If we can mathematically estimate the number of different ways the atoms may be arranged, we can quantify the freedom of the mass. If somehow we increase the size of the container, each atom can move around in a greater amount of space, and the number of ways the mass may be arranged will increase . . . .

The thermodynamic term for quantifying freedom is entropy, and it is given the symbol S. Like freedom, the entropy of a system increases with the temperature and with volume . . . the entropy of a system increases as the concentrations of the components decrease. The part of entropy which is determined by energetic freedom is called thermal entropy, and the part that is determined by concentration is called configurational entropy.”

In short, degree of confinement in space constrains the degree of disorder/”freedom” that masses may have. And, of course, confinement to particular portions of a linear polymer is no less a case of volumetric confinement (relative to being free to take up any location at random along the chain of monomers) than is confinement of gas molecules to one part of an apparatus. And, degree of such confinement may appropriately be termed, degree of “concentration.”

Diffusion is a similar case: infusing a drop of dye into a glass of water — the particles spread out across the volume and we see an increase of entropy there. (The micro-jets case of course is effectively diffusion in reverse, so we see the reduction in entropy on clumping and then also the further reduction in entropy on configuring to form a flyable microjet.)

So, we are justified in reworking the Boltzmann expression to separate clumping/thermal and configurational components:

S = k ln (Wclump*Wconfig)

= k lnWth*Wc . . . [Eqn A.11, cf. TBO 8.2a]

or, S = k ln Wth + k ln Wc = Sth + Sc . . . [Eqn A.11.1]

We now focus on the configurational component, the clumping/thermal one being in effect the same for at-random or specifically configured DNA or polypeptide macromolecules of the same length and proportions of the relevant monomers, as it is essentially energy of the bonds in the chain, which are the same in number and type for the two cases. Also, introducing Brillouin’s negentropy formulation of Information, with the configured macromolecule [m] and the random molecule [r], we see the increment in information on going from the random to the functionally specified macromolecule:

IB = -[Scm – Scr] . . . [Eqn A.12, cf. TBO 8.3a]

Or, IB = Scr – Scm = k ln Wcr – k ln Wcm

= k ln (Wcr/Wcm) . . . [Eqn A12.1.]

Where also, for N objects in a linear chain, n1 of one kind, n2 of another, and so on to ni, we may see that the number of ways to arrange them (we need not complicate the matter by talking of Fermi-Dirac statistics, as TBO do!) is:

W = N!/[n1!n2! . . . ni!] . . . [Eqn A13, cf TBO 8.7]

So, we may look at a 100-monomer protein, with as an average 5 each of the 20 types of amino acid monomers along the chain , with the aid of log manipulations — take logs to base 10, do the sums in log form, then take back out the logs — to handle numbers over 10^100 on a calculator:

Wcr = 100!/[(5!)^20] = 1.28*10^115

For the sake of initial argument, we consider a unique polymer chain , so that each monomer is confined to a specified location, i.e Wcm = 1, and Scm = 0. This yields — through basic equilibrium of chemical reaction thermodynamics (follow the onward argument in TBO Ch 8) and the Brillouin information measure which contributes to estimating the relevant Gibbs free energies (and with some empirical results on energies of formation etc) — an expected protein concentration of ~10^-338 molar, i.e. far, far less than one molecule per planet. (There may be about 10^80 atoms in the observed universe, with Carbon a rather small fraction thereof; and 1 mole of atoms is ~ 6.02*10^23 atoms. ) Recall, known life forms routinely use dozens to hundreds of such information-rich macromolecules, in close proximity in an integrated self-replicating information system on the scale of about 10^-6 m.

Of course, if one comes at the point from any of these directions, the objections and selectively hyperskeptical demands will be rolled out to fire off salvo after salvo of objections. Selective, as the blind chance needle in haystack models that cannot pass vera causa as a test, simply are not subjected to such scrutiny and scathing dismissiveness by the same objectors. When seriously pressed, the most they are usually prepared to concede, is that perhaps we don’t yet know enough, but rest assured “Science” will triumph so don’t you dare put up “god of the gaps” notions.

To see what I mean, notice [HT: BA 77 et al] the bottomline of a recent article on OOL conundrums:

. . . So the debate rages on. Over the past few decades scientists have edged closer to understanding the origin of life, but there is still some way to go, which is probably why when Robyn Williams asked Lane, ‘What was there in the beginning, do you think?’, the scientist replied wryly: ‘Ah, “think”. Yes, we have no idea, is the bottom line.’

But in fact, adequate cause for FSCO/I is not hard to find: intelligently directed configuration meeting requisites a – e just above. Design.

There are trillions of cases in point.

And that is why I demand that — whatever flaws, elaborations, adjustments etc we may find or want to make — we need to listen carefully and fairly to Granville Sewell’s core point:

. . . The second law is all about probability, it uses probability at the microscopic level to predict macroscopic change: the reason carbon distributes itself more and more uniformly in an insulated solid is, that is what the laws of probability predict when diffusion alone is operative. The reason natural forces may turn a spaceship, or a TV set, or a computer into a pile of rubble but not vice-versa is also probability: of all the possible arrangements atoms could take, only a very small percentage could fly to the moon and back, or receive pictures and sound from the other side of the Earth, or add, subtract, multiply and divide real numbers with high accuracy. The second law of thermodynamics is the reason that computers will degenerate into scrap metal over time, and, in the absence of intelligence, the reverse process will not occur; and it is also the reason that animals, when they die, decay into simple organic and inorganic compounds, and, in the absence of intelligence, the reverse process will not occur.

The discovery that life on Earth developed through evolutionary “steps,” coupled with the observation that mutations and natural selection — like other natural forces — can cause (minor) change, is widely accepted in the scientific world as proof that natural selection — alone among all natural forces — can create order out of disorder, and even design human brains, with human consciousness. Only the layman seems to see the problem with this logic. In a recent Mathematical Intelligencer article [“A Mathematician’s View of Evolution,” The Mathematical Intelligencer 22, number 4, 5-7, 2000] I asserted that the idea that the four fundamental forces of physics alone could rearrange the fundamental particles of Nature into spaceships, nuclear power plants, and computers, connected to laser printers, CRTs, keyboards and the Internet, appears to violate the second law of thermodynamics in a spectacular way.1 . . . .

What happens in a[n isolated] system depends on the initial conditions; what happens in an open system depends on the boundary conditions as well. As I wrote in “Can ANYTHING Happen in an Open System?”, “order can increase in an open system, not because the laws of probability are suspended when the door is open, but simply because order may walk in through the door…. If we found evidence that DNA, auto parts, computer chips, and books entered through the Earth’s atmosphere at some time in the past, then perhaps the appearance of humans, cars, computers, and encyclopedias on a previously barren planet could be explained without postulating a violation of the second law here . . . But if all we see entering is radiation and meteorite fragments, it seems clear that what is entering through the boundary cannot explain the increase in order observed here.” Evolution is a movie running backward, that is what makes it special.

THE EVOLUTIONIST, therefore, cannot avoid the question of probability by saying that anything can happen in an open system, he is finally forced to argue that it only seems extremely improbable, but really isn’t, that atoms would rearrange themselves into spaceships and computers and TV sets . . . [NB: Emphases added. I have also substituted in isolated system terminology as GS uses a different terminology.]

Surely, there is room to listen, and to address concerns on the merits. >>

_______________

I think we need to appreciate that the design inference applies to all three of thermodynamics, information and probability, and that we will find determined objectors who will attack all three in a selectively hyperskeptical manner. We therefore need to give adequate reasons for what we hold, for the reasonable onlooker. END

PS: As it seems unfortunately necessary, I here excerpt the Wikipedia “simple” summary derivation of 2LOT from statistical mechanics considerations as at April 13, 2015 . . . a case of technical admission against general interest, giving the case where distributions are not necessarily equiprobable. This shows the basis of the point that for over 100 years now, 2LOT has been inextricably rooted in statistical-molecular considerations (where, it is those considerations that lead onwards to the issue that FSCO/I, which naturally comes in deeply isolated islands of function in large config spaces, will be maximally implausible to discover through blind, needle in haystack search on chance and mechanical necessity):

With this in hand, I again cite a favourite basic College level Physics text, as summarised in my online note App I:

Yavorski and Pinski, in the textbook Physics, Vol I [MIR, USSR, 1974, pp. 279 ff.], summarise the key implication of the macro-state and micro-state view well: as we consider a simple model of diffusion, let us think of ten white and ten black balls in two rows in a container. There is of course but one way in which there are ten whites in the top row; the balls of any one colour being for our purposes identical. But on shuffling, there are 63,504 ways to arrange five each of black and white balls in the two rows, and 6-4 distributions may occur in two ways, each with 44,100 alternatives. So, if we for the moment see the set of balls as circulating among the various different possible arrangements at random, and spending about the same time in each possible state on average, the time the system spends in any given state will be proportionate to the relative number of ways that state may be achieved. Immediately, we see that the system will gravitate towards the cluster of more evenly distributed states. In short, we have just seen that there is a natural trend of change at random, towards the more thermodynamically probable macrostates, i.e the ones with higher statistical weights. So “[b]y comparing the [thermodynamic] probabilities of two states of a thermodynamic system, we can establish at once the direction of the process that is [spontaneously] feasible in the given system. It will correspond to a transition from a less probable to a more probable state.” [p. 284.] This is in effect the statistical form of the 2nd law of thermodynamics. Thus, too, the behaviour of the Clausius isolated system above [with interacting sub-systemd A and B that transfer d’Q to B due to temp. difference] is readily understood: importing d’Q of random molecular energy so far increases the number of ways energy can be distributed at micro-scale in B, that the resulting rise in B’s entropy swamps the fall in A’s entropy. Moreover, given that [FSCO/I]-rich micro-arrangements are relatively rare in the set of possible arrangements, we can also see why it is hard to account for the origin of such states by spontaneous processes in the scope of the observable universe. (Of course, since it is as a rule very inconvenient to work in terms of statistical weights of macrostates [i.e W], we instead move to entropy, through s = k ln W. Part of how this is done can be seen by imagining a system in which there are W ways accessible, and imagining a partition into parts 1 and 2. W = W1*W2, as for each arrangement in 1 all accessible arrangements in 2 are possible and vice versa, but it is far more convenient to have an additive measure, i.e we need to go to logs. The constant of proportionality, k, is the famous Boltzmann constant and is in effect the universal gas constant, R, on a per molecule basis, i.e we divide R by the Avogadro Number, NA, to get: k = R/NA. The two approaches to entropy, by Clausius, and Boltzmann, of course, correspond. In real-world systems of any significant scale, the relative statistical weights are usually so disproportionate, that the classical observation that entropy naturally tends to increase, is readily apparent.)

This underlying context is easily understood and leads logically to 2LOT as an overwhelmingly likely consequence. Beyond a reasonable scale, fluctuations beyond a very narrow range are statistical miracles, that we have no right to expect to observe.

And, that then refocusses the issue of connected, concurrent energy flows to provide compensation for local entropy reductions.