Yesterday, I crossed a Rubicon, for cause, on seeing the refusal to stop from enabling denial and correct and deal with slander on the part of even the most genteel of the current wave of critics.

It is time to face what we are dealing with squarely: ideologues on the attack. (Now, in a wave of TSZ denizens back here at UD and hoping to swarm down, twist into pretzels, ridicule and dismiss the basic case for design.)

Sal C has been the most prolific recent contributor at UD, and a pivotal case he has put forth is the discovery of a box of five hundred coins, all heads.

What is the best explanation?

The talking point gymnastics to avoid the obvious conclusion that have been going on for a while now, have been sadly instructive on the mindset of the committed ideological materialist and that of his or her fellow travellers.

(For those who came in 30 years or so late, “fellow travellers” is a term of art that described those who made common cause with the outright Marxists, on one argument or motive or another. I will not cite Lenin’s less polite term for such. The term, fellow traveller, is of obviously broader applicability and relevance today.)

Now, I intervened just now in a thread that further follows up on the coin tossing exercise and wish to headline the comment:

_____________

>> I observe:

NR, at 5: >> Biological organisms do not look designed >>

The above clip and the wider thread provide a good example of the sort of polarisation and refusal to examine matters squarely on the merits that too often characterises objectors to design theory.

In the case of coin tossing, all heads, all tails, alternating H and T, etc. are all obvious patterns that are simply describable (i.e. without in effect quoting the strings). Such patterns can be assigned a set of special zones, Z = {z1, z2, z3 . . . zn} of one or more outcomes, in the space of possibilities, W.

Thus is effected a partition of the configuration space.

It is quite obvious that |W| >> |Z|, overwhelmingly so; let us symbolise this |W| >> . . . > |Z|.

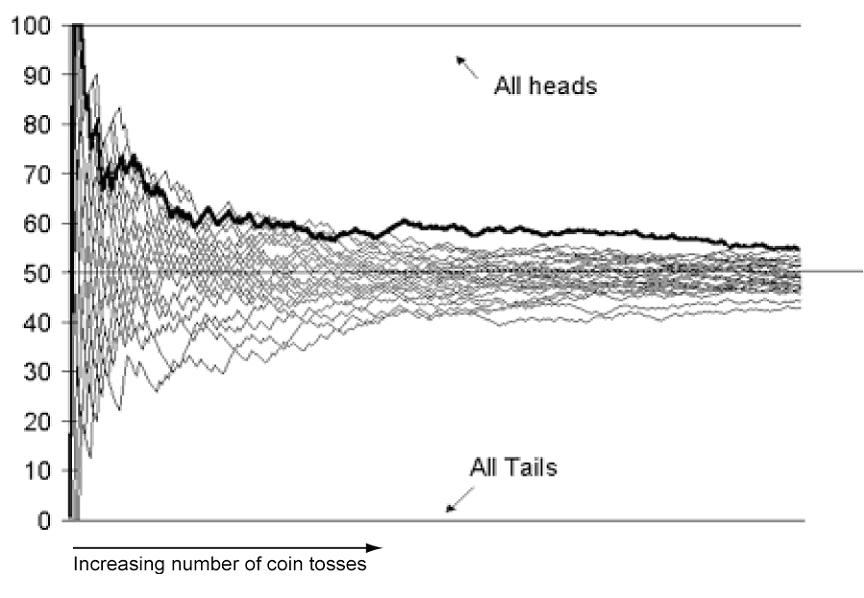

Now, we put forth the Bernoulli-Laplace indifference assumption that is relevant here through the stipulation of a fair coin. (We can examine symmetry etc of a coin, or do frequency tests to see that such will for practical purposes be close enough. It is not hard to see that unless a coin is outrageously biased, the assumption is reasonable. [BTW, this implies that it is formally possible that if a fair coin is tossed 500 times, it is logically possible that it will be all heads. But that is not the pivotal point.])

When we do an exercise of tossing, we are in fact doing a sample of W, in which the partition that a given outcome, si in S [the set of possible samples], comes from, will be dominated by relative statistical weight. S is of course such that |S| >> . . . > |W|. That is, there are far more ways to sample from W in a string of actual samples s1, s2, . . . sn, than there are number of configs in W.

(This is where the Marks-Dembski search for a search challenge, S4S, comes in. Sampling the samplings can be a bigger task than sampling the set of possibilities.)

Where, now, we have a needle in haystack problem that on the gamut of the solar system [our practical universe for chemical level atomic interactions], the number of samples that is possible as an actual exercise is overwhelmingly smaller than S, and indeed than W.

Under these circumstances, we take a sample si, 500 tosses.

The balance of the partitions is such that by all but certainty, we will find a cluster of H & T in no particular order, close to 250 H: 250 T. The farther away one gets from that balance, the less likely it will be, through the sharp peaked-ness of the binomial distribution of fair coin tosses.

Under these circumstances, we have no good reason to expect to see a special pattern like 500 H, etc. Indeed, such a unique and highly noticeable config will predictably — with rather high reliability — not be observed once on the gamut of the observed solar system, even were the solar system dedicated to doing nothing but tossing coins for its lifespan.

That is chance manifest in coin tossing is not a plausible account for 500 H, or the equivalent, a line of 500 coins in a tray all H’s.

However, if we were now to come upon a tray with 500 coins, all H’s, we can very plausibly account for it on a known, empirically grounded causal pattern: intelligent designers exist and have been known to set highly contingent systems to special values suited to their purposes.

Indeed, such are the only empirically warranted sources.

Where, for instance we are just such intelligences.

So, the reasonable person coming on a tray of 500 coins in a row, all H, will infer that per best empirically warranted explanation, design is the credible cause. (And that person will infer the same if a coin tossing exercises presented as fair coin tossing, does the equivalent. We can reliably know that design is involved without knowing the mechanism.)

Nor does this change if the discoverer did not see the event happening. That is, from a highly contingent outcome that does not fit chance very well but does fit design well, one may properly infer design as explanation.

Indeed, that pattern of a specific, recognisable pattern utterly unlikely by chance but by no means inherently unlikely to the point of dismissal by design, is a plausible sign of design as best causal explanation.

The same would obtain if instead of 500 H etc, we discovered that the coins were in a pattern that spelled out, using ASCII code, remarks in English or object code for a computer, etc. In this case, the pattern is recognised as a functionally specific, complex one.

Why then, do we see such violent opposition to inferring design on FSCO/I etc in non-toy cases?

Obviously, because objectors are making or are implying the a priori stipulation (often unacknowledged, sometimes unrecognised) that it is practically certain that no designer is POSSIBLE at the point in question.

For under such a circumstance, chance is the only reasonable candidate left to account for high contingency. (Mechanical necessity does not lead to high contingency.)

So, we see why there is a strong appearance of design, and we see why there is a reluctance or even violently hostile refusal to accept that that appearance can indeed be a good reason to accept that on the inductively reliable sign FSCO/I and related analysis, design is the best causal explanation.

In short, we are back to the problem of materialist ideology dressed up in a lab coat.

I think the time has more than come to expose that, and to highlight the problems with a priori materialism as a worldview, whether it is dressed up in a lab coat or not.

We can start with Haldane’s challenge:

“It seems to me immensely unlikely that mind is a mere by-product of matter. For if my mental processes are determined wholly by the motions of atoms in my brain I have no reason to suppose that my beliefs are true. They may be sound chemically, but that does not make them sound logically. And hence I have no reason for supposing my brain to be composed of atoms. In order to escape from this necessity of sawing away the branch on which I am sitting, so to speak, I am compelled to believe that mind is not wholly conditioned by matter.” [[“When I am dead,” in Possible Worlds: And Other Essays [1927], Chatto and Windus: London, 1932, reprint, p.209.]

This and other related challenges (cf here on in context) render evolutionary materialism so implausible as a worldview that we may safely dismiss it. Never mind how it loves to dress up in a lab coat and shale the coat at us as if to frighten us.

So, the reasonable person, in the face of such evidence, will accept the credibility of the sign — FSCO/I — and the possibility of design that such a strong and empirically grounded appearance points to.

But, notoriously, ideologues are not reasonable persons.

For further illustration, observe above the attempt to divert the discussion into definitions of what an intelligent and especially a conscious intelligent agent is.

Spoken of course, by a conscious intelligent agent who is refusing to accept that the billions of us on the ground are examples of what intelligent designers are. Nope, until you can give a precising definition acceptable to him [i.e. inevitably, consistent with evolutionary materialism — which implies or even denies that such agency is possible leading to self referential absurdity . . . ], he is unwilling to accept the testimony of his own experience and observation.

I call that a breach of common sense and self referential incoherence.>>

____________

The point is, the credibility of materialist ideologues is fatally undermined by their closed-minded demand to conform to unreasonable a prioris. Lewontin’s notorious cat- out- of- the- bag statement in NYRB, January 1997 is emblematic:

. . . . the problem is to get [the public] to reject irrational and supernatural explanations of the world, the demons that exist only in their imaginations, and to accept a social and intellectual apparatus, Science, as the only begetter of truth [[–> NB: this is a knowledge claim about knowledge and its possible sources, i.e. it is a claim in philosophy not science; it is thus self-refuting]. . . .

[T]he practices of science provide the surest method of putting us in contact with physical reality, and that, in contrast, the demon-haunted world rests on a set of beliefs and behaviors that fail every reasonable test [[–> i.e. an assertion that tellingly reveals a hostile mindset, not a warranted claim] . . . .

It is not that the methods and institutions of science somehow compel us to accept a material explanation of the phenomenal world, but, on the contrary, that we are forced by our a priori adherence to material causes [[–> another major begging of the question . . . ] to create an apparatus of investigation and a set of concepts that produce material explanations, no matter how counter-intuitive, no matter how mystifying to the uninitiated. Moreover, that materialism is absolute [[–> i.e. here we see the fallacious, indoctrinated, ideological, closed mind . . . ], for we cannot allow a Divine Foot in the door. [“Billions and Billions of Demons,” NYRB, January 9, 1997. Bold emphasis and notes added. of course, it is a commonplace materialist talking point to dismiss such a cite as “quote mining. I suggest that if you are tempted to believe that convenient dismissal, kindly cf the linked, where you will see the more extensive cite and notes.]

No wonder, in November that year, ID thinker Philip Johnson rebutted:

For scientific materialists the materialism comes first; the science comes thereafter. [[Emphasis original] We might more accurately term them “materialists employing science.” And if materialism is true, then some materialistic theory of evolution has to be true simply as a matter of logical deduction, regardless of the evidence. That theory will necessarily be at least roughly like neo-Darwinism, in that it will have to involve some combination of random changes and law-like processes capable of producing complicated organisms that (in Dawkins’ words) “give the appearance of having been designed for a purpose.”. . . . The debate about creation and evolution is not deadlocked . . . Biblical literalism is not the issue. The issue is whether materialism and rationality are the same thing. Darwinism is based on an a priori commitment to materialism, not on a philosophically neutral assessment of the evidence. Separate the philosophy from the science, and the proud tower collapses. [[Emphasis added.] [[The Unraveling of Scientific Materialism, First Things, 77 (Nov. 1997), pp. 22 – 25.]

Too often, such ideological closed-mindedness and question-begging multiplied by a propensity to be unreasonable, ruthless and even nihilistic or at least to be an enabler going along with such ruthlessness.

Which ends up back at the point that when — not if, such an utterly incoherent system is simply not sustainable, and is so damaging to the moral stability of a society that it will inevitably self-destruct [cf. Plato’s warning here, given 2,350 years ago] — such materialism lies utterly defeated and on the ash-heap of history, there will come a day of reckoning for the enablers, who will need to take a tour of their shame and explain or at least ponder why they went along with the inexcusable.

Hence the ugly but important significance of the following picture in which, shortly after its liberation, American troops forced citizens of nearby Wiemar to tour Buchenwald so that the people of Germany who went along as enablers with what was done in the name of their nation by utterly nihilistic men they allowed to rule over them, could not ever deny the truth of their shame thereafter:

It is time to heed Francis Schaeffer in his turn of the 1980’s series, Whatever Happened to the Human Race, an expose of the implications and agendas of evolutionary materialist secular humanism that was ever so much derided and dismissed at the time, but across time has proved to be dead on target even as we now have reached the threshold of post-birth abortion and other nihilistic horrors:

[youtube 8uoFkVroRyY]

And, likewise, we need to heed a preview of the tour of shame to come, Expelled by Ben Stein:

[youtube V5EPymcWp-g]

Finally, we need to pause and listen to Weikart’s warning from history in this lecture:

[youtube w_5EwYpLD6A]

Yes, I know, these things are shocking, painful, even offensive to the genteel; who are too often to be found in enabling denial of the patent facts and will be prone to blame the messenger instead of deal with the problem.

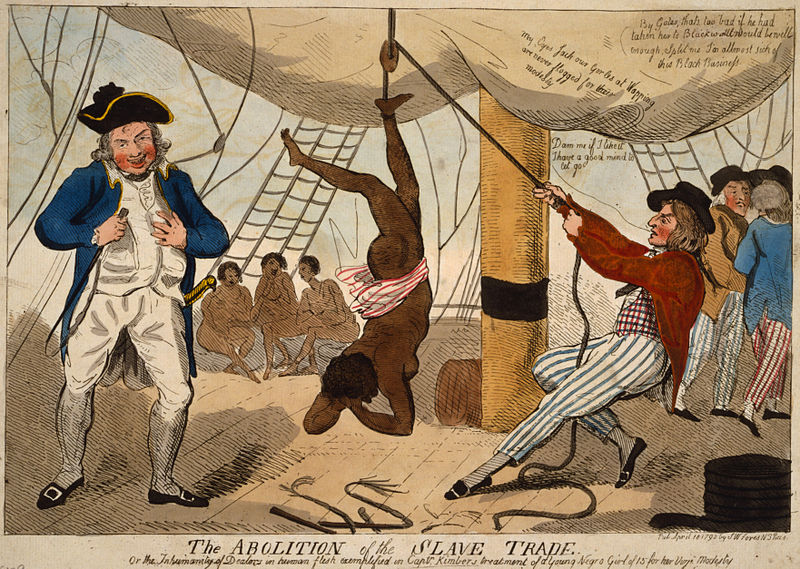

However, as a descendant of slaves who is concerned for our civilisation’s trends in our time, I must speak. Even as the horrors of the slave ship and the plantation had to be painfully, even shockingly exposed two centuries and more past.

I must ask you, what genteel people sipping their slave-sugared tea 200 years ago, thought of images like this:

Sometimes, the key issues at stake in a given day are not nice and pretty, and some ugly things need to be faced, if horrors of the magnitude of the century just past are to be averted in this new Millennium. END