[ID Found’ns Series, cf. also Bartlett here]

Irreducible complexity is probably the most violently objected to foundation stone of Intelligent Design theory. So, let us first of all define it by slightly modifying Dr Michael Behe’s original statement in his 1996 Darwin’s Black Box [DBB]:

What type of biological system could not be formed by “numerous successive, slight modifications?” Well, for starters, a system that is irreducibly complex. By irreducibly complex I mean a single system composed of several well-matched interacting parts that contribute to the basic function, wherein the removal of any one of the [core] parts causes the system to effectively cease functioning. [DBB, p. 39, emphases and parenthesis added. Cf. expository remarks in comment 15 below.]

Behe proposed this definition in response to the following challenge by Darwin in Origin of Species:

If it could be demonstrated that any complex organ existed, which could not possibly have been formed by numerous, successive, slight modifications, my theory would absolutely break down. But I can find out no such case . . . . We should be extremely cautious in concluding that an organ could not have been formed by transitional gradations of some kind. [Origin, 6th edn, 1872, Ch VI: “Difficulties of the Theory.”]

In fact, there is a bit of question-begging by deck-stacking in Darwin’s statement: we are dealing with empirical matters, and one does not have a right to impose in effect outright logical/physical impossibility — “could not possibly have been formed” — as a criterion of test.

If, one is making a positive scientific assertion that complex organs exist and were credibly formed by gradualistic, undirected change through chance mutations and differential reproductive success through natural selection and similar mechanisms, one has a duty to provide decisive positive evidence of that capacity. Behe’s onward claim is then quite relevant: for dozens of key cases, no credible macro-evolutionary pathway (especially no detailed biochemical and genetic pathway) has been empirically demonstrated and published in the relevant professional literature. That was true in 1996, and despite several attempts to dismiss key cases such as the bacterial flagellum [which is illustrated at the top of this blog page] or the relevant part of the blood clotting cascade [hint: picking the part of the cascade — that before the “fork” that Behe did not address as the IC core is a strawman fallacy], it arguably still remains to today.

Now, we can immediately lay the issue of the fact of irreducible complexity as a real-world phenomenon to rest.

For, a situation where core, well-matched, and co-ordinated parts of a system are each necessary for and jointly sufficient to effect the relevant function is a commonplace fact of life. One that is familiar from all manner of engineered systems; such as, the classic double-acting steam engine:

Fig. A: A double-acting steam engine (Courtesy Wikipedia)

Such a steam engine is made up of rather commonly available components: cylinders, tubes, rods, pipes, crankshafts, disks, fasteners, pins, wheels, drive-belts, valves etc. But, because a core set of well-matched parts has to be carefully organised according to a complex “wiring diagram,” the specific function of the double-acting steam engine is not explained by the mere existence of the parts.

Nor, can simply choosing and re-arranging similar parts from say a bicycle or an old-fashioned car or the like create a viable steam engine. Specific mutually matching parts [matched to thousandths of an inch usually], in a very specific pattern of organisation, made of specific materials, have to be in place, and they have to be integrated into the right context [e.g. a boiler or other source providing steam at the right temperature and pressure], for it to work.

If one core part breaks down or is removed — e.g. piston, cylinder, valve, crank shaft, etc., core function obviously ceases.

Irreducible complexity is not only a concept but a fact.

But, why is it said that irreducible complexity is a barrier to Darwinian-style [macro-]evolution and a credible sign of design in biological systems?

First, once we are past a reasonable threshold of complexity, irreducible complexity [IC] is a form of functionally specific complex organisation and implied information [FSCO/I], i.e. it is a case of the specified complexity that is already immediately a strong sign of design on which the design inference rests. (NB: Cf. the first two articles in the ID foundations series — here and here.)

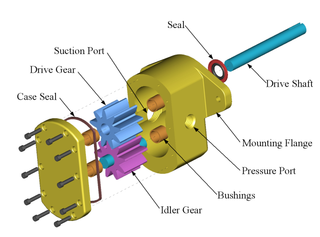

Fig. B, on the exploded, and nodes and arcs “wiring diagram” views of how a complex, functionally specific entity is assembled, will help us see this:

Fig. B (i): An exploded view of a gear pump. (Courtesy, Wikipedia)

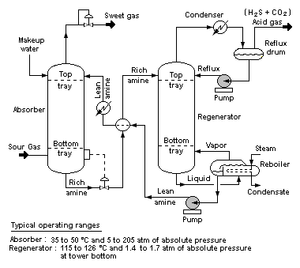

Fig. B(ii): A Piping and Instrumentation Diagram, illustrating how nodes, interfaces and arcs are “wired” together in a functional mesh network (Source: Wikimedia, HT: Citizendia; also cf. here, on polygon mesh drawings.)

We may easily see from Fig. B (i) and (ii) how specific components — which may themselves be complex — sit at nodes in a network, and are wired together in a mesh that specifies interfaces and linkages. From this, a set of parts and wiring instructions can be created, and reduced to a chain of contextual yes/no decisions. On the simple functionally specific bits metric, once that chain exceeds 1,000 decisions, we have an object that is so complex that it is not credible that the whole universe serving as a search engine, could credibly produce this spontaneously without intelligent guidance. And so, once we have to have several well-matched parts arranged in a specific “wiring diagram” pattern to achieve a function, it is almost trivial to run past 125 bytes [= 1,000 bits] of implied function-specifying information.

Of the significance of such a view, J. S Wicken observed in 1979:

Indeed, the implication of that complex, information-rich functionally specific organisation is the source of Sir Fred Hoyle’s metaphor of comparing the idea of spontaneous assembly of such an entity to a tornado in a junkyard assembling a flyable 747 out of parts that are just lying around.

Similarly, it is not expected that if one were to do a Humpty Dumpty experiment — setting up a cluster of vials with sterile saline solution with nutrients and putting in each a bacterium then pricking it so the contents of the cell leak out — it is not expected that in any case, the parts would spontaneously re-assemble to yield a viable bacterial colony.

But also, IC is a barrier to the usual suggested counter-argument, co-option or exaptation based on a conveniently available cluster of existing or duplicated parts. For instance, Angus Menuge has noted that:

For a working [bacterial] flagellum to be built by exaptation, the five following conditions would all have to be met:

C1: Availability. Among the parts available for recruitment to form the flagellum, there would need to be ones capable of performing the highly specialized tasks of paddle, rotor, and motor, even though all of these items serve some other function or no function.

C2: Synchronization. The availability of these parts would have to be synchronized so that at some point, either individually or in combination, they are all available at the same time.

C3: Localization. The selected parts must all be made available at the same ‘construction site,’ perhaps not simultaneously but certainly at the time they are needed.

C4: Coordination. The parts must be coordinated in just the right way: even if all of the parts of a flagellum are available at the right time, it is clear that the majority of ways of assembling them will be non-functional or irrelevant.

C5: Interface compatibility. The parts must be mutually compatible, that is, ‘well-matched’ and capable of properly ‘interacting’: even if a paddle, rotor, and motor are put together in the right order, they also need to interface correctly.

( Agents Under Fire: Materialism and the Rationality of Science, pgs. 104-105 (Rowman & Littlefield, 2004). HT: ENV.)

In short, the co-ordinated and functional organisation of a complex system is itself a factor that needs credible explanation.

However, as Luskin notes for the iconic flagellum, “Those who purport to explain flagellar evolution almost always only address C1 and ignore C2-C5.” [ENV.]

And yet, unless all five factors are properly addressed, the matter has plainly not been adequately explained. Worse, the classic attempted rebuttal, the Type Three Secretory System [T3SS] is not only based on a subset of the genes for the flagellum [as part of the self-assembly the flagellum must push components out of the cell], but functionally, it works to help certain bacteria prey on eukaryote organisms. Thus, if anything the T3SS is not only a component part that has to be integrated under C1 – 5, but it is credibly derivative of the flagellum and an adaptation that is subsequent to the origin of Eukaryotes. Also, it is just one of several components, and is arguably itself an IC system. (Cf Dembski here.)

Going beyond all of this, in the well known Dover 2005 trial, and citing ENV, ID lab researcher Scott Minnich has testified to a direct confirmation of the IC status of the flagellum:

Scott Minnich has properly tested for irreducible complexity through genetic knock-out experiments he performed in his own laboratory at the University of Idaho. He presented this evidence during the Dover trial, which showed that the bacterial flagellum is irreducibly complex with respect to its complement of thirty-five genes. As Minnich testified: “One mutation, one part knock out, it can’t swim. Put that single gene back in we restore motility. Same thing over here. We put, knock out one part, put a good copy of the gene back in, and they can swim. By definition the system is irreducibly complex. We’ve done that with all 35 components of the flagellum, and we get the same effect.” [Dover Trial, Day 20 PM Testimony, pp. 107-108. Unfortunately, Judge Jones simply ignored this fact reported by the researcher who did the work, in the open court room.]

That is, using “knockout” techniques, the 35 relevant flagellar proteins in a target bacterium were knocked out then restored one by one.

The pattern for each DNA-sequence: OUT — no function, BACK IN — function restored.

Thus, the flagellum is credibly empirically confirmed as irreducibly complex.

The “Knockout Studies” concept — a research technique that rests directly on the IC property of many organism features –needs some explanation.

[Continues here]