Information, of course, is notoriously a concept that has many senses of meaning. As it is central to the design inference, let us look (again) at defining it.

We can dispose of one sense right off, Shannon was not directly interested in information but in information-carrying capacity; that is why his metric will peak for a truly random signal, which has as a result minimal redundancy. And, we can also see that the bit measure commonly seen in ICT circles or in our PC memories etc, is actually this measure, 1 k bit is 1,024 = 2^10 binary digits of storage or transmission capacity. One binary digit or bit being a unit of information storing one choice between a pair of alternatives such as yes/no, or true/false, or on/off, or high/low or N-pole/S-pole, etc. Where, obviously, the meaningful substance that is stored or communicated or may be implicit in a coherent organised functional entity is the sense of information that is most often relevant.

That is, as F. R. Connor put it in his telecommunication series of short textbooks,

“Information is not what is actually in a message but what could constitute a message. The word could implies a statistical definition in that it involves some selection of the various possible messages. The important quantity is not the actual information content of the message but rather its possible information content.” [Signals, Edward Arnold, 1972, p. 79.]

So, we come to a version of the Shannon Communication system model:

Elaborating slightly by expanding the encoder-decoder framework (and following the general framework of the ISO OSI model):

In this model, information-bearing messages flow from a source to a sink, by being: (1) encoded, (2) transmitted through a channel as a signal, (3) received, and (4) decoded. At each corresponding stage: source/sink encoding/decoding, transmitting/receiving, there is in effect a mutually agreed standard, a so-called protocol. [For instance, HTTP — hypertext transfer protocol — is a major protocol for the Internet. This is why many web page addresses begin: “http://www . . .”]

However, as the diagram hints at, at each stage noise affects the process, so that under certain conditions, detecting and distinguishing the signal from the noise becomes a challenge. Indeed, since noise is due to a random fluctuating value of various physical quantities [due in turn to the random behaviour of particles at molecular levels], the detection of a message and accepting it as a legitimate message rather than noise that got lucky, is a question of inference to design. In short, inescapably, the design inference issue is foundational to communication science and information theory.

Going beyond this, we can refer to the context of information technology, communication systems and computers, which provides a vital clarifying side-light from another view on how complex, specified information functions in information processing systems and so also what information is as contrasted with data and knowledge:

[In the context of computers, etc.] information is data — i.e. digital representations of raw events, facts, numbers and letters, values of variables, etc. — that have been put together in ways suitable for storing in special data structures [strings of characters, lists, tables, “trees” etc], and for processing and output in ways that are useful [i.e. functional]. . . . Information is distinguished from [a] data: raw events, signals, states etc represented digitally, and [b] knowledge: information that has been so verified that we can reasonably be warranted, in believing it to be true. [GEM/TKI, UWI FD12A Sci Med and Tech in Society Tutorial Note 7a, Nov 2005.]

Going to Principia Cybernetica Web as archived, we find three related discussions:

INFORMATION

1) that which reduces uncertainty. (Claude Shannon); 2) that which changes us. (Gregory Bateson)

Literally that which forms within, but more adequately: the equivalent of or the capacity of something to perform organizational work, the difference between two forms of organization or between two states of uncertainty before and after a message has been received, but also the degree to which one variable of a system depends on or is constrained by (see constraint) another. E.g., the dna carries genetic information inasmuch as it organizes or controls the orderly growth of a living organism. A message carries information inasmuch as it conveys something not already known. The answer to a question carries information to the extent it reduces the questioner’s uncertainty. A telephone line carries information only when the signals sent correlate with those received. Since information is linked to certain changes, differences or dependencies, it is desirable to refer to theme and distinguish between information stored, information carried, information transmitted, information required, etc. Pure and unqualified information is an unwarranted abstraction. information theory measures the quantities of all of these kinds of information in terms of bits. The larger the uncertainty removed by a message, the stronger the correlation between the input and output of a communication channel, the more detailed particular instructions are the more information is transmitted. (Krippendorff)

Information is the meaning of the representation of a fact (or of a message) for the receiver. (Hornung)

The point is, that information itself is not an obvious material artifact, though it is often embedded in such. It is abstract but nonetheless very real, very effective, very functional. So much so that it lies at the heart of cell-based life and of the wave of technology currently further transforming our world.

It is in the light of the above concerns, issues and concepts that, a few days ago, I added the following working rough definition to a current UD post. On doing so, I thought, this is worth headlining for itself. And so, let me now cite:

>>to facilitate discussion [as, a good general definition that does not “bake-in” information being a near-synonym to knowledge is hard to find . . . ] we may roughly identify information as

1: facets of reality [–> a way to speak of an abstract entity] that may capture and so frame — give meaningful FORM to

2: representations of elements of reality — such representations being items of data — that

3: by accumulation of such structured items . . .

4: [NB: which accumulation is in principle quantifiable, e.g. by defining a description language that chains successive y/n questions to specify a unique/particular description or statement, thence I = – log p in the Shannon case, etc],

5: meaningful complex messages may then be created, modulated, encoded, decoded, conveyed, stored, processed and otherwise made amenable to use by a system, entity or agent interacting with the wider world. E.g. consider the ASCII code:

The ASCII code takes seven y/n q’s per character to specify text in English, yielding text size 7 bits per character of FSCO/I for such text

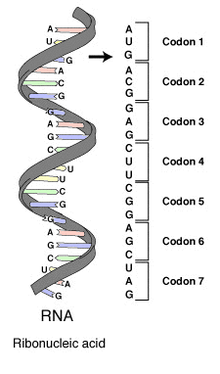

or, the genetic code (notice, the structural patterns set by the first two letters):

The Genetic code uses three-letter codons to specify the sequence of AA’s in proteins and specifying start/stop, and using six bits per AA

or, mRNA . . . notice, U not T:

or, a cybernetic entity using informational signals to guide its actions:

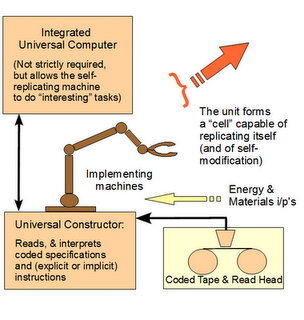

. . . or, a von Neumann kinematic self-replicator:

(Of course, such representations may be more or less accurate or inaccurate, or even deceitful. Thus, knowledge requires information but has to address warrant as credibly truthful. Wisdom, goes beyond knowledge to imply sound insight into fundamental aspects of reality that guide sound, prudent, ethical action.)>>

Food, for thought. END

PS: It would be remiss of me not to tie in the informational thermodynamics link, with a spot of help from Wikipedia on that topic. Let me clip from my longstanding briefing note:

>>To quantify the above definition of what is perhaps best descriptively termed information-carrying capacity, but has long been simply termed information (in the “Shannon sense” – never mind his disclaimers . . .), let us consider a source that emits symbols from a vocabulary: s1,s2, s3, . . . sn, with probabilities p1, p2, p3, . . . pn. That is, in a “typical” long string of symbols, of size M [say this web page], the average number that are some sj, J, will be such that the ratio J/M –> pj, and in the limit attains equality. We term pj the a priori — before the fact — probability of symbol sj. Then, when a receiver detects sj, the question arises as to whether this was sent. [That is, the mixing in of noise means that received messages are prone to misidentification.] If on average, sj will be detected correctly a fraction, dj of the time, the a posteriori — after the fact — probability of sj is by a similar calculation, dj. So, we now define the information content of symbol sj as, in effect how much it surprises us on average when it shows up in our receiver:

I = log [dj/pj], in bits [if the log is base 2, log2] . . . Eqn 1

This immediately means that the question of receiving information arises AFTER an apparent symbol sj has been detected and decoded. That is, the issue of information inherently implies an inference to having received an intentional signal in the face of the possibility that noise could be present. Second, logs are used in the definition of I, as they give an additive property: for, the amount of information in independent signals, si + sj, using the above definition, is such that:

I total = Ii + Ij . . . Eqn 2

For example, assume that dj for the moment is 1, i.e. we have a noiseless channel so what is transmitted is just what is received. Then, the information in sj is:

I = log [1/pj] = – log pj . . . Eqn 3

This case illustrates the additive property as well, assuming that symbols si and sj are independent. That means that the probability of receiving both messages is the product of the probability of the individual messages (pi *pj); so:

Itot = log1/(pi *pj) = [-log pi] + [-log pj] = Ii + Ij . . . Eqn 4

So if there are two symbols, say 1 and 0, and each has probability 0.5, then for each, I is – log [1/2], on a base of 2, which is 1 bit. (If the symbols were not equiprobable, the less probable binary digit-state would convey more than, and the more probable, less than, one bit of information. Moving over to English text, we can easily see that E is as a rule far more probable than X, and that Q is most often followed by U. So, X conveys more information than E, and U conveys very little, though it is useful as redundancy, which gives us a chance to catch errors and fix them: if we see “wueen” it is most likely to have been “queen.”)

Further to this, we may average the information per symbol in the communication system thusly (giving in termns of -H to make the additive relationships clearer):

– H = p1 log p1 + p2 log p2 + . . . + pn log pn

or, H = – SUM [pi log pi] . . . Eqn 5

H, the average information per symbol transmitted [usually, measured as: bits/symbol], is often termed the Entropy; first, historically, because it resembles one of the expressions for entropy in statistical thermodynamics. As Connor notes: “it is often referred to as the entropy of the source.” [p.81, emphasis added.] Also, while this is a somewhat controversial view in Physics, as is briefly discussed in Appendix 1below, there is in fact an informational interpretation of thermodynamics that shows that informational and thermodynamic entropy can be linked conceptually as well as in mere mathematical form. Though somewhat controversial even in quite recent years, this is becoming more broadly accepted in physics and information theory, as Wikipedia now discusses [as at April 2011] in its article on Informational Entropy (aka Shannon Information, cf also here):

At an everyday practical level the links between information entropy and thermodynamic entropy are not close. Physicists and chemists are apt to be more interested in changes in entropy as a system spontaneously evolves away from its initial conditions, in accordance with the second law of thermodynamics, rather than an unchanging probability distribution. And, as the numerical smallness of Boltzmann’s constant kB indicates, the changes in S / kB for even minute amounts of substances in chemical and physical processes represent amounts of entropy which are so large as to be right off the scale compared to anything seen in data compression or signal processing.

But, at a multidisciplinary level, connections can be made between thermodynamic and informational entropy, although it took many years in the development of the theories of statistical mechanics and information theory to make the relationship fully apparent. In fact, in the view of Jaynes (1957), thermodynamics should be seen as an application of Shannon’s information theory: the thermodynamic entropy is interpreted as being an estimate of the amount of further Shannon information needed to define the detailed microscopic state of the system, that remains uncommunicated by a description solely in terms of the macroscopic variables of classical thermodynamics. For example, adding heat to a system increases its thermodynamic entropy because it increases the number of possible microscopic states that it could be in, thus making any complete state description longer. (See article: maximum entropy thermodynamics.[Also,another article remarks: >>in the words of G. N. Lewis writing about chemical entropy in 1930, “Gain in entropy always means loss of information, and nothing more” . . . in the discrete case using base two logarithms, the reduced Gibbs entropy is equal to the minimum number of yes/no questions that need to be answered in order to fully specify the microstate, given that we know the macrostate.>>]) Maxwell’s demon can (hypothetically) reduce the thermodynamic entropy of a system by using information about the states of individual molecules; but, as Landauer (from 1961) and co-workers have shown, to function the demon himself must increase thermodynamic entropy in the process, by at least the amount of Shannon information he proposes to first acquire and store; and so the total entropy does not decrease (which resolves the paradox).

Summarising Harry Robertson’s Statistical Thermophysics (Prentice-Hall International, 1993) — excerpting desperately and adding emphases and explanatory comments, we can see, perhaps, that this should not be so surprising after all. (In effect, since we do not possess detailed knowledge of the states of the vary large number of microscopic particles of thermal systems [typically ~ 10^20 to 10^26; a mole of substance containing ~ 6.023*10^23 particles; i.e. the Avogadro Number], we can only view them in terms of those gross averages we term thermodynamic variables [pressure, temperature, etc], and so we cannot take advantage of knowledge of such individual particle states that would give us a richer harvest of work, etc.)

For, as he astutely observes on pp. vii – viii:

. . . the standard assertion that molecular chaos exists is nothing more than a poorly disguised admission of ignorance, or lack of detailed information about the dynamic state of a system . . . . If I am able to perceive order, I may be able to use it to extract work from the system, but if I am unaware of internal correlations, I cannot use them for macroscopic dynamical purposes. On this basis, I shall distinguish heat from work, and thermal energy from other forms . . .

And, in more details, (pp. 3 – 6, 7, 36, cf Appendix 1 below for a more detailed development of thermodynamics issues and their tie-in with the inference to design; also see recent ArXiv papers by Duncan and Samura here and here):

. . . It has long been recognized that the assignment of probabilities to a set represents information, and that some probability sets represent more information than others . . . if one of the probabilities say p2 is unity and therefore the others are zero, then we know that the outcome of the experiment . . . will give [event] y2. Thus we have complete information . . . if we have no basis . . . for believing that event yi is more or less likely than any other [we] have the least possible information about the outcome of the experiment . . . . A remarkably simple and clear analysis by Shannon [1948] has provided us with a quantitative measure of the uncertainty, or missing pertinent information, inherent in a set of probabilities [NB: i.e. a probability different from 1 or 0 should be seen as, in part, an index of ignorance] . . . .

[deriving informational entropy, cf. discussions here, here, here, here and here; also Sarfati’s discussion of debates and the issue of open systems here . . . ]

H({pi}) = – C [SUM over i] pi*ln pi, [. . . “my” Eqn 6]

[where [SUM over i] pi = 1, and we can define also parameters alpha and beta such that: (1) pi = e^-[alpha + beta*yi]; (2) exp [alpha] = [SUM over i](exp – beta*yi) = Z [Z being in effect the partition function across microstates, the “Holy Grail” of statistical thermodynamics]. . . .

[H], called the information entropy, . . . correspond[s] to the thermodynamic entropy [i.e. s, where also it was shown by Boltzmann that s = k ln w], with C = k, the Boltzmann constant, and yi an energy level, usually ei, while [BETA] becomes 1/kT, with T the thermodynamic temperature . . . A thermodynamic system is characterized by a microscopic structure that is not observed in detail . . . We attempt to develop a theoretical description of the macroscopic properties in terms of its underlying microscopic properties, which are not precisely known. We attempt to assign probabilities to the various microscopic states . . . based on a few . . . macroscopic observations that can be related to averages of microscopic parameters. Evidently the problem that we attempt to solve in statistical thermophysics is exactly the one just treated in terms of information theory. It should not be surprising, then, that the uncertainty of information theory becomes a thermodynamic variable when used in proper context . . . .

Jayne’s [summary rebuttal to a typical objection] is “. . . The entropy of a thermodynamic system is a measure of the degree of ignorance of a person whose sole knowledge about its microstate consists of the values of the macroscopic quantities . . . which define its thermodynamic state. This is a perfectly ‘objective’ quantity . . . it is a function of [those variables] and does not depend on anybody’s personality. There is no reason why it cannot be measured in the laboratory.” . . . . [pp. 3 – 6, 7, 36; replacing Robertson’s use of S for Informational Entropy with the more standard H.]

As is discussed briefly in Appendix 1, Thaxton, Bradley and Olsen [TBO], following Brillouin et al, in the 1984 foundational work for the modern Design Theory, The Mystery of Life’s Origins [TMLO], exploit this information-entropy link, through the idea of moving from a random to a known microscopic configuration in the creation of the bio-functional polymers of life, and then — again following Brillouin — identify a quantitative information metric for the information of polymer molecules. For, in moving from a random to a functional molecule, we have in effect an objective, observable increment in information about the molecule. This leads to energy constraints, thence to a calculable concentration of such molecules in suggested, generously “plausible” primordial “soups.” In effect, so unfavourable is the resulting thermodynamic balance, that the concentrations of the individual functional molecules in such a prebiotic soup are arguably so small as to be negligibly different from zero on a planet-wide scale.

By many orders of magnitude, we don’t get to even one molecule each of the required polymers per planet, much less bringing them together in the required proximity for them to work together as the molecular machinery of life. The linked chapter gives the details. More modern analyses [e.g. Trevors and Abel, here and here], however, tend to speak directly in terms of information and probabilities rather than the more arcane world of classical and statistical thermodynamics, so let us now return to that focus; in particular addressing information in its functional sense, as the third step in this preliminary analysis.>>

Further food for thought.