|

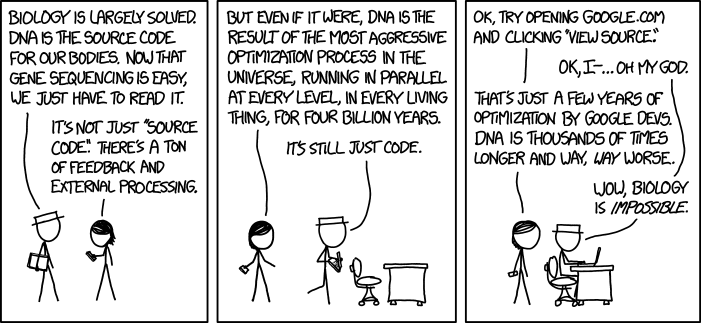

Matthew Cobb is a professor of zoology at the University of Manchester and a regular contributor over at Why Evolution Is True. Recently, while critiquing a cartoon from xkcd (shown above), he argued that our DNA is the mindless product of a series of historical accidents. But then he let the cat out of the bag, at the end of his post:

On a final note, in some cases, within this amazing noise, there are also astonishing examples of complexity which do indeed appear to be the result of optimisation – and they would boggle the mind of anyone, not just a cocky computer scientist in a hat. In Drosophila there is a gene called Dscam, which is involved in neuronal development and has four clusters of exons (bits of the gene that are expressed – hence exon – in contrast to the apparently inert introns).

Each of these exons can be read by the cell in twelve, forty-eight, thirty-three or two alternative ways. As a result, the single stretch of DNA that we call Dscam can encode 38,016 different proteins. (For the moment, this is the record number of alternative proteins produced by a single gene. I suspect there are many even more extreme examples.)

Cobb triumphantly concluded:

In other words, DNA is even more complicated than [xkcd cartoonist] Randall [Munroe] imagines – it is historical, messy, undesigned. And when occasionally it is optimised, the degree of complexity is mind-boggling. Biology is not quite impossible, it is just incredibly difficult!

But the damage was done. Even as he chided cartoonist Randall Munroe for claiming that DNA is subject to “the most aggressive optimisation process in the universe” and insisted that our genes are “a horrible, historical mess” consisting mostly of junk DNA, and that they are really the product of mindless tinkering rather than design, Cobb was forced to concede that amidst all this chaos, there were indeed some “astonishing examples of complexity which do indeed appear to be the result of optimisation” which “would boggle the mind of anyone, not just a cocky computer scientist in a hat.”

Intelligent Design supporters are often accused of appealing to something called an API: an Argument from Personal Incredulity. The acronym comes from Professor Richard Dawkins. The reasoning is supposed to go like this: I cannot imagine how complex structure X could have come about as a result of blind natural processes; therefore an intelligent being must have created it. This, Dawkins rightly points out, is not a rational argument. Certainly it has no place in a science classroom.

But my own conversion to Intelligent Design was not based on an API, but on something which I have decided to call the STOMPS Principle. STOMPS is an acronym for: Smarter Than Our Most Promising Scientists. The reasoning goes like this: if I observe a complex system which is capable of performing a task in a manner which is more ingenious than anything our best and most promising scientists could have ever designed, then it would be rational for me to assume that the system in question was also designed. That is not to say that nothing will shake my conviction, but if you claim that an unguided natural process could have done the job, then I am going to demand that you explain how the process in question could have accomplished this stupendous feat. I shall demand a specification of a mechanism, and a demonstration that this mechanism is at least capable of generating the complex system we are talking about, within the time available, without appealing to mathematical miracles (like winning the Powerball Jackpot ten times in a row). To demand any less would be the height of irrationality.

Professor Matthew Cobb concedes that our junky DNA contains genes which encode for proteins. He concedes that within the “noise” of our junky DNA, there are also “astonishing examples of complexity which do indeed appear to be the result of optimisation,” and that the complexity of this DNA code would “boggle the mind” of even “a cocky computer scientist in a hat.” This sounds like a perfect example of a case where the STOMPS Principle could be legitimately invoked. If Nature contains systems which accomplish a feat in a manner which is far better than what our best scientists can do, then it’s reasonable to infer that these systems were intelligently designed.

At this point, some evolutionists may respond by invoking what philosopher Daniel Dennett has termed Leslie Orgel’s second law: “Evolution is cleverer than you are.” The relevant question here is: cleverer at what? We have seen that all living things employ a genetic code: a set of rules by which information encoded in genetic material (DNA or RNA sequences) is translated into proteins (amino acid sequences) by living cells. Despite diligent inquiry on our part, we have yet to uncover a single instance in Nature of unguided processes generating a code of any sort – let alone one which would “boggle the mind” of even “a cocky computer scientist in a hat.” Whatever else evolution might be clever at, code-making is hardly its forte.

But, we shall be told, evolution refines the code in our DNA all the time – through natural selection winnowing random mutations, as well as purely chance-driven processes such as genetic drift. Who are we to say that it could not have generated this code by an incremental series of refinements, over billions of years?

I used to be a computer programmer, for ten years. I think I know what it means to refine computer code. Evolution doesn’t do anything like that: what it does is corrupt the code in organisms’ cells, in ways that occasionally turn out to improve those organisms’ prospects for survival. That might be good for the organisms, but from a code-bound perspective, it isn’t “good” at all: it’s just the corruption of a code. And corruption is the opposite of generation.

So when I hear someone tell me that “nature, heartless, witless nature” could have not only generated a code, but generated one which even our brightest scientists are in awe of, my response is: “You’re pulling my leg.”

Finally, I’d like to address Professor Matthew Cobb’s argument that “[o]ur genes are not perfectly adapted and beautifully designed,” because our DNA is littered with junk: they are instead the product of “evolution and natural selection.” My response to that argument is: so what? Even if Professor Cobb is right about junk DNA – and I’m inclined to think he is (for reasons I’ll discuss in another post) – that’s beside the point. At most, it shows is that DNA which doesn’t code for anything wasn’t designed. But my question is: what about the DNA which does code for proteins, and which does so in a manner that boggles the ingenuity of our brightest minds? Professor Cobb, it seems, is missing the wood for the trees here.

Junk DNA might be described as degenerate code – but there has to be a code in the first place, before it can degenerate. The existence of junk DNA cannot be used as an argument against design: all it establishes is that the designer of our DNA – whether out of benign neglect, laziness, illness, or ignorance that something has gone amiss – doesn’t always fix the code he created, when it becomes corrupted. Accordingly, junk DNA cannot be used as a legitimate argument against the proposition that the DNA in our cells which codes for genes was designed.

A personal story

A few years ago, I came across an article by an Australian botanist (who is also a creationist) named Alex Williams, entitled, “Astonishing Complexity of DNA Demolishes Neo-Darwinism” (Journal of Creation, 21(3), 2007). At the time I knew very little about specified complexity and other terms in the Intelligent Design lexicon. I heartily dislike jargon, and I was having difficulty deciding whether there was any real scientific merit to the Intelligent Design movement’s claim that certain biological systems must have been designed. But when I read Alex Williams’ article, the case for Intelligent Design finally made sense to me. What impressed me most, with my background in computer science, was that the coding in the cell was far, far more efficient than anything that our best scientists could have come up with. Here are some excerpts from the article:

The traditional understanding of DNA has recently been transformed beyond recognition. DNA does not, as we thought, carry a linear, one-dimensional, one-way, sequential code—like the lines of letters and words on this page… DNA information is overlapping-multi-layered and multi-dimensional; it reads both backwards and forwards… No human engineer has ever even imagined, let alone designed an information storage device anything like it…

- There is no ‘beads on a string’ linear arrangement of genes, but rather an interleaved structure of overlapping segments, with typically five, seven or more transcripts coming from just one segment of code.

- Not just one strand, but both strands (sense and antisense) of the DNA are fully transcribed.

- Transcription proceeds not just one way but both backwards and forwards…

- There is not just one transcription triggering (switching) system for each region, but many.

(Bold emphasis mine – VJT.)

I’d like to make it clear that as someone who believes in a 13.8 billion-year-old universe and in common descent, I do not share Williams’ creationist views. In particular, I think his argument for a young cosmos, based on Haldane’s dilemma, rests on faulty premises. But I do think that Williams is on solid scientific ground when he writes that no human engineer has ever even imagined, let alone designed an information storage device anything like DNA. Here we have an appeal to the STOMPS principle: DNA encodes information in a way which is far cleverer than anything that our most intelligent programmers could have designed, so it is reasonable to infer that DNA itself was designed by a superhuman intelligent agent.

I’d like to conclude this post with a quote from someone whose impartiality is not in doubt: Bill Gates, the founder of Microsoft Corporation, who is also an agnostic:

Biological information is the most important information we can discover, because over the next several decades it will revolutionize medicine. Human DNA is like a computer program but far, far more advanced than any software ever created.

(The Road Ahead, Penguin: London, Revised Edition, 1996 p. 228.)

If an agnostic like Bill Gates, who is an acknowledged expert on computing, thinks that the complexity of human DNA surpasses that of any human software design, then it is surely reasonable to infer that human DNA – or at the very least, its four-billion-year-old progenitor, the DNA in the first living cell, was originally designed by some superhuman Intelligence.

Professor Cobb is undercut by one of his own commenters

We have seen that Professor Matthew Cobb’s argument against DNA having been designed is a philosophically flawed one. But reading through the comments attached to his post, I came across two comments by a reader named Eric (see here and here) which blew Professor Cobb’s case right out of the water, from a computing perspective:

… Matthew’s comment “Our genes are not perfectly adapted and beautifully designed. They are a horrible, historical mess” makes the analogy to human programming better, not worse….

I would guess that the entire etymology of computer programming languages is a result of historical contingency (i.e. a horrible, historical mess) as much as it is a result of optimization or rational choice. The reason Java forms the basis of so many internet-based languages is because that’s what was included in the earliest version of Netscape Navigator, which captured the market at the time. And the reason there are so many Visual Basic type programming languages is because Basic is what ran on the first generation of IBM personal computers. Geez, I know labs that were programming their nuclear physics detector setups in Fortran in the 1990s, and that is a language invented for use with punch cards.

Now computer programming languages will probably always require a more formal and rigorous syntax than natural language, but IMO the specific formal syntaxes that we used today are more due to the vagaries of human history than they are any sort of rational choice of the best options.

For that matter, why the frak do we even bother with www? Http vs. Https? That’s four redundant and therefore worthless letters out of five, the equivalent of 80% “junk DNA” in one of the most common and most recent human-built computer syntaxes. What sense does it make? None. Why do we have it? History.

Eric makes a very interesting point here. What do readers think?

(H/t: Denyse O’Leary.)