It has been said that “Intelligent design (ID) is the view that it is possible to infer from empirical evidence that “certain features of the universe and of living things are best explained by an intelligent cause, not an undirected process such as natural selection” . . .” This puts the design inference at the heart of intelligent design theory, and raises the questions of its degree of warrant and relationship to the — insofar as a “the” is possible — scientific method.

Leading Intelligent Design researcher, William Dembski has summarised the actual process of inference:

“Whenever explaining an event, we must choose from three competing modes of explanation. These are regularity [i.e., natural law], chance, and design.” When attempting to explain something, “regularities are always the first line of defense. If we can explain by means of a regularity, chance and design are automatically precluded. Similarly, chance is always the second line of defense. If we can’t explain by means of a regularity, but we can explain by means of chance, then design is automatically precluded. There is thus an order of priority to explanation. Within this order regularity has top priority, chance second, and design last” . . . the Explanatory Filter “formalizes what we have been doing right along when we recognize intelligent agents.” [Cf. Peter Williams’ article, The Design Inference from Specified Complexity Defended by Scholars Outside the Intelligent Design Movement, A Critical Review, here. We should in particular note his observation: “Independent agreement among a diverse range of scholars with different worldviews as to the utility of CSI adds warrant to the premise that CSI is indeed a sound criterion of design detection. And since the question of whether the design hypothesis is true is more important than the question of whether it is scientific, such warrant therefore focuses attention on the disputed question of whether sufficient empirical evidence of CSI within nature exists to justify the design hypothesis.”]

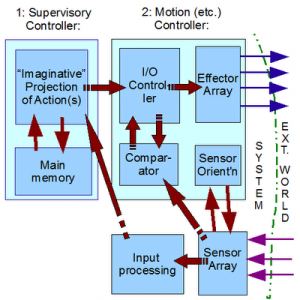

The design inference process as described can be represented in a flow chart:

Fig. A: The Explanatory filter and the inference to design, as applied to various aspects of an object, process or phenomenon, and in the context of the generic scientific method. (So, we first envision nature acting by low contingency law-like mechanical necessity such as with F = m*a . . . think of a heavy unsupported object near the earth’s surface falling with initial acceleration g = 9.8 N/kg or so. That is the first default. Similarly, we may see high contingency knocking out the first default — under similar starting conditions, there is a broad range of possible outcomes. If things are highly contingent in this sense, the second default is: CHANCE. That is only knocked out if an aspect of an object, situation, or process etc. exhibits, simultaneously: (i) high contingency, (ii) tight specificity of configuration relative to possible configurations of the same bits and pieces, (iii) high complexity or information carrying capacity, usually beyond 500 – 1,000 bits. In such a case, we have good reason to infer that the aspect of the object, process, phenomenon etc. reflects design or . . . following the terms used by Plato 2350 years ago in The Laws, Bk X . . . the ART-ificial, or contrivance, rather than nature acting freely through undirected blind chance and/or mechanical necessity. [NB: This trichotomy across necessity and/or chance and/or the ART-ificial, is so well established empirically that it needs little defense. Those who wish to suggest no, we don’t know there may be a fourth possibility, are the ones who first need to show us such before they are to be taken seriously. Where, too, it is obvious that the distinction between “nature” (= “chance and/or necessity”) and the ART-ificial is a reasonable and empirically grounded distinction, just look on a list of ingredients and nutrients on a food package label. The loaded rhetorical tactic of suggesting, implying or accusing that design theory really only puts up a religiously motivated way to inject the supernatural as the real alternative to the natural, fails. (Cf. the UD correctives 16 – 20 here on. as well as 1 – 8 here on.) And no, when say the averaging out of random molecular collisions with a wall gives rise to a steady average, that is a case of empirically reliable lawlike regularity emerging from a strong characteristic of such a process, when sufficient numbers are involved, due to the statistics of very large numbers . . . it is easy to have 10^20 molecules or more . . . at work there is a relatively low fluctuation, unlike what we see with particles undergoing Brownian motion. That is in effect low contingency mechanical necessity in the sense we are interested in, in action. So, for instance we may derive for ideal gas particles, the relationship P*V = n*R*T as a reliable law.] )

Explaining (and discussing) in steps:

1 –> As was noted in background remarks 1 and 2, we commonly observe signs and symbols, and infer on best explanation to underlying causes or meanings. In some cases, we assign causes to (a) natural regularities tracing to mechanical necessity [i.e. “law of nature”], in others to (b) chance, and in yet others we routinely assign cause to (c) intentionally and intelligently, purposefully directed configuration, or design. Or, in leading ID researcher William Dembski’s words, (c) may be further defined in a way that shows what intentional and intelligent, purposeful agents do, and why it results in functional, specified complex organisation and associated information:

. . . (1) A designer conceives a purpose. (2) To accomplish that purpose, the designer forms a plan. (3) To execute the plan, the designer specifies building materials and assembly instructions. (4) Finally, the designer or some surrogate applies the assembly instructions to the building materials. (No Free Lunch, p. xi. HT: ENV.)

Read More ›