In answering yet another round of G’s talking points on design theory and those of us who advocate it, I have outlined a summary of design thinking and its links onward to debates on theology, that I think is worth being somewhat adapted, expanded and headlined.

With your indulgence:

_______________

>> The epistemological warrant for origins science is no mystery, as Meyer and others have summarised. {Let me clip from an earlier post in the same thread:

Let me give you an example of a genuine test (reported in Wiki’s article on the Infinite Monkeys theorem), on very easy terms, random document generation, as I have cited many times:

One computer program run by Dan Oliver of Scottsdale, Arizona, according to an article in The New Yorker, came up with a result on August 4, 2004: After the group had worked for 42,162,500,000 billion billion monkey-years, one of the “monkeys” typed, “VALENTINE. Cease toIdor:eFLP0FRjWK78aXzVOwm)-‘;8.t” The first 19 letters of this sequence can be found in “The Two Gentlemen of Verona”. Other teams have reproduced 18 characters from “Timon of Athens”, 17 from “Troilus and Cressida”, and 16 from “Richard II”.[24]

A website entitled The Monkey Shakespeare Simulator, launched on July 1, 2003, contained a Java applet that simulates a large population of monkeys typing randomly, with the stated intention of seeing how long it takes the virtual monkeys to produce a complete Shakespearean play from beginning to end. For example, it produced this partial line from Henry IV, Part 2, reporting that it took “2,737,850 million billion billion billion monkey-years” to reach 24 matching characters:

RUMOUR. Open your ears; 9r”5j5&?OWTY Z0d…

Of course this is chance generating the highly contingent outcome.

What about chance plus necessity, e.g. mutations and differential reproductive success of variants in environments? The answer is, that the non-foresighted — thus chance — variation is the source of high contingency. Differential reproductive success actually SUBTRACTS “inferior” varieties, it does not add. The source of variation is various chance processes, chance being understood in terms of processes creating variations uncorrelated with the functional outcomes of interest: i.e. non-foresighted.

If you have a case, make it . . . .

In making that case I suggest you start with OOL, and bear in mind Meyer’s remark on that subject in reply to hostile reviews:

The central argument of my book is that intelligent design—the activity of a conscious and rational deliberative agent—best explains the origin of the information necessary to produce the first living cell. I argue this because of two things that we know from our uniform and repeated experience, which following Charles Darwin I take to be the basis of all scientific reasoning about the past. First, intelligent agents have demonstrated the capacity to produce large amounts of functionally specified information (especially in a digital form).

Notice the terminology he naturally uses and how close it is to the terms I and others have commonly used, functionally specific complex information. So much for that rhetorical gambit.

He continues:

Second, no undirected chemical process has demonstrated this power.

Got that?

Hence, intelligent design provides the best—most causally adequate—explanation for the origin of the information necessary to produce the first life from simpler non-living chemicals. In other words, intelligent design is the only explanation that cites a cause known to have the capacity to produce the key effect in question . . . . In order to [[scientifically refute this inductive conclusion] Falk would need to show that some undirected material cause has [[empirically] demonstrated the power to produce functional biological information apart from the guidance or activity a designing mind. Neither Falk, nor anyone working in origin-of-life biology, has succeeded in doing this . . .}

In effect, on identifying traces from the remote past, and on examining and observing candidate causes in the present and their effects, one may identify characteristic signs of certain acting causes. These, on observation, can be shown to be reliable indicators or signs of particular causes in some cases.

From this, by inductive reasoning on inference to best explanation, we may apply the Newtonian uniformity principle of like causing like.

It so turns out that FSCO/I is such a sign, reliably produced by design, and design is the only empirically grounded adequate cause known to produce such. Things like codes [as systems of communication], complex organised mechanisms, complex algorithms expressed in codes, linguistic expressions beyond a reasonable threshold of complexity, algorithm implementing arrangements of components in an information processing entity, and the like are cases in point.

It turns out that the world of the living cell is replete with such, and so we are inductively warranted in inferring design as best causal explanation. Not, on a priori imposition of teleology, or on begging metaphysical questions, or the like; but, on induction in light of tested, reliable signs of causal forces at work.

And in that context the Chi_500 expression,

Chi_500 = Ip*S – 500, bits beyond the solar system threshold

. . . is a metric that starts with our ability to measure explicit or information content, directly [an on/off switch such as for the light in a room has two possible states and stores one bit, two store two bits . . . ] or by considering the relevant analysis of observed patterns of configurations. It then uses our ability to observe functional specificity (does any and any configuration suffice, or do we need well-matched, properly arranged parts with limited room for variation and alternative arrangement before function breaks] to move beyond info carrying capacity to functionally specific info.

This is actually commonly observed in a world of info technology.

I have tried the experiment of opening up the background file for an empty basic Word document then noticing the many seemingly meaningless repetitive elements. So, I pick one effectively at random, and clip it out, saving the result. Then, I try opening the file from Word again. It reliably breaks. Seeming “junk digits” are plainly functionally required and specific.

But, as we saw from the infinite monkeys discussion, it is possible to hit on functionally specific patterns if they are short enough, by chance. Though, discovering when one has done so can be quite hard. The sum of the random document exercises is that spaces of about 10^50 are searchable within available resources. At 25 ASCII characters, at 7 bits per character, that is about 175 bits.

Taking in the fact that for each additional bit used in a system, the config space DOUBLES, the difference between 175 or so bits, and the solar system threshold adopted based on exhausting the capacity of the solar system’s 10^57 atoms and 10^17 s or so, is highly significant. At the {500-bit} threshold, we are in effect only able to take a sample in the ratio of one straw’s size to a cubical haystack as thick as our galaxy, 1,000 light years. As CR’s screen image case shows, and as imagining such a haystack superposed on our galactic neighbourhood would show, by sampling theory, we could only reasonably expect such a sample to be typical of the overwhelming bulk of the space, straw.

In short, we have a very reasonable practical threshold for cases where examples of functionally specific information and/or organisation are sufficiently complex that we can be comfortable that such cannot plausibly be accounted for on blind — undirected — chance and mechanical necessity.

{This allows us to apply the following flowchart of logical steps in a case . . . ladder of conditionals . . . structure, the per aspect design inference, and on a QUANTITATIVE approach grounded in a reasonable threshold metric model:

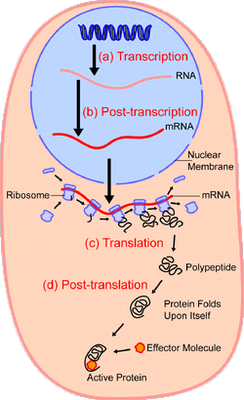

On the strength of that, we have every epistemic right to infer that cell based life shows signs pointing to design. {For instance, consider how ribosomes are used to create new proteins in the cell:

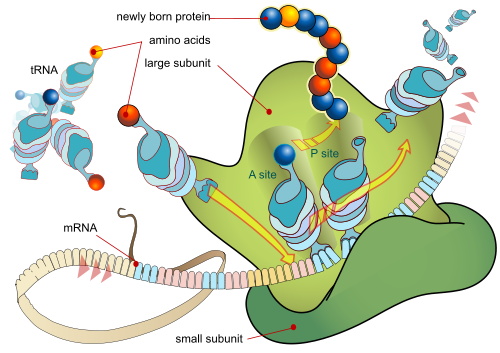

And, in so doing, let us zoom in on the way that the Ribosome uses a control tape, mRNA, to step by step assemble a new amino acid chain, to make a protein:

This can be seen as an animation, courtesy Vuk Nikolic:

[vimeo 31830891]

Let us note the comparable utility of punched paper tape used in computers and numerically controlled industrial machines in a past generation:

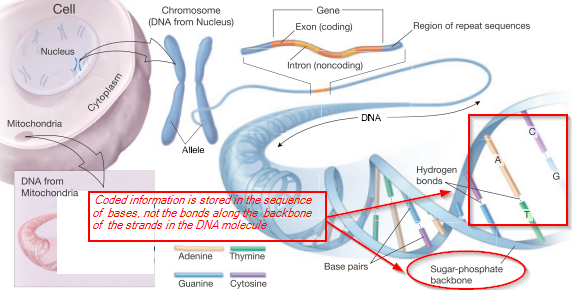

Given some onward objections, May 4th I add an info graphic on DNA . . .

And a similar one on the implied communication system’s general, irreducibly complex architecture:

In turn, that brings up the following clip from the ID Foundation series article on Irreducible Complexity, on Menuge’s criteria C1 – 5 for getting to such a system (which he presented in the context of the Flagellum):

But also, IC is a barrier to the usual suggested counter-argument, co-option or exaptation based on a conveniently available cluster of existing or duplicated parts. For instance, Angus Menuge has noted that:

For a working [bacterial] flagellum to be built by exaptation, the five following conditions would all have to be met:

C1: Availability. Among the parts available for recruitment to form the flagellum, there would need to be ones capable of performing the highly specialized tasks of paddle, rotor, and motor, even though all of these items serve some other function or no function.

C2: Synchronization. The availability of these parts would have to be synchronized so that at some point, either individually or in combination, they are all available at the same time.

C3: Localization. The selected parts must all be made available at the same ‘construction site,’ perhaps not simultaneously but certainly at the time they are needed.

C4: Coordination. The parts must be coordinated in just the right way: even if all of the parts of a flagellum are available at the right time, it is clear that the majority of ways of assembling them will be non-functional or irrelevant.

C5: Interface compatibility. The parts must be mutually compatible, that is, ‘well-matched’ and capable of properly ‘interacting’: even if a paddle, rotor, and motor are put together in the right order, they also need to interface correctly.

( Agents Under Fire: Materialism and the Rationality of Science, pgs. 104-105 (Rowman & Littlefield, 2004). HT: ENV.)

In short, the co-ordinated and functional organisation of a complex system is itself a factor that needs credible explanation.

However, as Luskin notes for the iconic flagellum, “Those who purport to explain flagellar evolution almost always only address C1 and ignore C2-C5.” [ENV.]

And yet, unless all five factors are properly addressed, the matter has plainly not been adequately explained. Worse, the classic attempted rebuttal, the Type Three Secretory System [T3SS] is not only based on a subset of the genes for the flagellum [as part of the self-assembly the flagellum must push components out of the cell], but functionally, it works to help certain bacteria prey on eukaryote organisms. Thus, if anything the T3SS is not only a component part that has to be integrated under C1 – 5, but it is credibly derivative of the flagellum and an adaptation that is subsequent to the origin of Eukaryotes. Also, it is just one of several components, and is arguably itself an IC system. (Cf Dembski here.)

Going beyond all of this, in the well known Dover 2005 trial, and citing ENV, ID lab researcher Scott Minnich has testified to a direct confirmation of the IC status of the flagellum:

Scott Minnich has properly tested for irreducible complexity through genetic knock-out experiments he performed in his own laboratory at the University of Idaho. He presented this evidence during the Dover trial, which showed that the bacterial flagellum is irreducibly complex with respect to its complement of thirty-five genes. As Minnich testified: “One mutation, one part knock out, it can’t swim. Put that single gene back in we restore motility. Same thing over here. We put, knock out one part, put a good copy of the gene back in, and they can swim. By definition the system is irreducibly complex. We’ve done that with all 35 components of the flagellum, and we get the same effect.” [Dover Trial, Day 20 PM Testimony, pp. 107-108. Unfortunately, Judge Jones simply ignored this fact reported by the researcher who did the work, in the open court room.]

That is, using “knockout” techniques, the 35 relevant flagellar proteins in a target bacterium were knocked out then restored one by one.

The pattern for each DNA-sequence: OUT — no function, BACK IN — function restored.

Thus, the flagellum is credibly empirically confirmed as irreducibly complex. [Cf onward discussion on Knockout Studies, here.]

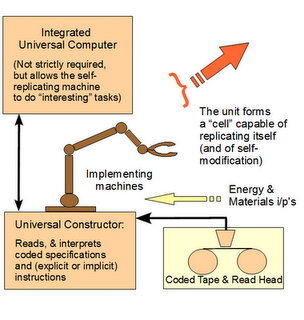

The kinematic von Neumann self-replicating machine [vNSR] concept is then readily applicable to the living cell:

Mignea’s model of minimal requisites for a self-replicating cell [speech here], are then highly relevant as well:

HT CR, here’s a typical representation of cell replication through Mitosis:

[youtube C6hn3sA0ip0]

And, we may then ponder Michael Denton’s reflection on the automated world of the cell, in his foundational book, Evolution, a Theory in Crisis (1986):

An extension of this, gives us reason to infer that body plans similarly show signs of design. And, related arguments give us reason to infer that a cosmos fine tuned in many ways that converge on enabling such C-chemistry, aqueous medium cell based life on habitable terrestrial planets or similarly hospitable environments, also shows signs of design.

Not on a prioi impositions, but on induction from evidence we observe and reliable signs that we establish inductively. That is, scientifically.

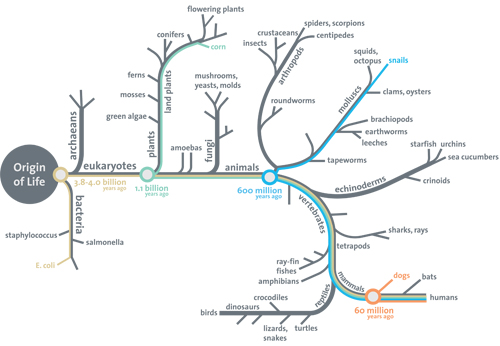

Added, May 11: Remember, this focus on the cell is in the end because it is the root of the Darwinist three of life and as such origin of life is pivotal:

Multiply that by the evidence that there is a definite, finitely remote beginning to the observed cosmos, some 13.7 BYA being a common estimate, and 10 – 20 BYA a widely supported ballpark. That says, it is contingent, has underlying enabling causal factors, and so is a contingent, caused being.

All of this to this point is scientific, with background logic and epistemology.

Not theology, revealed or natural.

It owes nothing to the teachings of any religious movement or institution.

However, it does provide surprising corroboration to the statements of two apostles who went out on a limb philosophically by committing the Christian faith in foundational documents to reason/communication being foundational to observed reality, our world. In short the NT concepts of the Logos [John 1, cf Col 1, Heb 1, Ac 17] and that the evident, discernible reality of God as intelligent creator from signs in the observed cosmos [Rom 1 cf Heb 11:1 – 6, Ac 17 and Eph 4:17 – 24], are supported by key findings of science over the past 100 or so years.

There are debates over timelines and interpretations of Genesis, as well there would be.

They do not matter, in the end, given the grounds advanced on the different sides of the debate. We can live with Gen 1 – 11 being a sweeping, often poetic survey meant only to establish that the world is not a chaos, and it is not a product of struggling with primordial chaos or wars of the gods or the like. The differences between the Masoretic genealogies and those in the ancient translation, the Septuagint, make me think we need to take pause on attempts to precisely date creation on such evidence. Schaeffer probably had something right in his suggestion that one would be better advised to see this as describing the flow and outline of Biblical history rather than a precise, sequential chronology. And that comes up once we can see how consistently reliable the OT is as reflecting its times and places, patterns and events, even down to getting names right.

So, debating Genesis is to follow a red herring and go off to pummel a strawman smeared with stereotypes and set up for rhetorical conflagration. A fallacy of distraction, polarisation and personalisation. As is too often found as a habitual pattern of objectors to design theory.

What is substantial is the evidence on origins of our world and of the world of cell based life in the light of its challenge to us in our comfortable scientism.

And, in that regard, we have again — this is the umpteenth time, G; and you have long since worn out patience and turning the other cheek in the face of personalities, once it became evident that denigration was a main rhetorical device at work — had good reason to see that design theory is a legitimate scientific endeavour, regardless of rhetorical games being played to make it appear otherwise.>>

_______________

In short, it is possible to address the design inference and wider design theory without resort to ideologically loaded debates. And, as a first priority, we should. END

______________

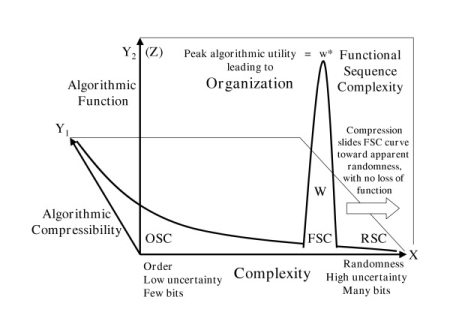

PS: In support of my follow up to EA at 153 below, at 157, it is worth adding (May 8th) the Trevors-Abel diagram from 2005 (SOURCE), contrasting the patterns of OSC, RSC and FSC:

Figure 4: Superimposition of Functional Sequence Complexity onto Figure 2. The Y1 axis plane plots the decreasing degree of algorithmic compressibility as complexity increases from order towards randomness. The Y2 (Z) axis plane shows where along the same complexity gradient (X-axis) that highly instructional sequences are generally found. The Functional Sequence Complexity (FSC) curve includes all algorithmic sequences that work at all (W). The peak of this curve (w*) represents “what works best.” The FSC curve is usually quite narrow and is located closer to the random end than to the ordered end of the complexity scale. Compression of an instructive sequence slides the FSC curve towards the right (away from order, towards maximum complexity, maximum Shannon uncertainty, and seeming randomness) with no loss of function.