[ID Found’ns Series, cf. also Bartlett here]

Irreducible complexity is probably the most violently objected to foundation stone of Intelligent Design theory. So, let us first of all define it by slightly modifying Dr Michael Behe’s original statement in his 1996 Darwin’s Black Box [DBB]:

What type of biological system could not be formed by “numerous successive, slight modifications?” Well, for starters, a system that is irreducibly complex. By irreducibly complex I mean a single system composed of several well-matched interacting parts that contribute to the basic function, wherein the removal of any one of the [core] parts causes the system to effectively cease functioning. [DBB, p. 39, emphases and parenthesis added. Cf. expository remarks in comment 15 below.]

Behe proposed this definition in response to the following challenge by Darwin in Origin of Species:

If it could be demonstrated that any complex organ existed, which could not possibly have been formed by numerous, successive, slight modifications, my theory would absolutely break down. But I can find out no such case . . . . We should be extremely cautious in concluding that an organ could not have been formed by transitional gradations of some kind. [Origin, 6th edn, 1872, Ch VI: “Difficulties of the Theory.”]

In fact, there is a bit of question-begging by deck-stacking in Darwin’s statement: we are dealing with empirical matters, and one does not have a right to impose in effect outright logical/physical impossibility — “could not possibly have been formed” — as a criterion of test.

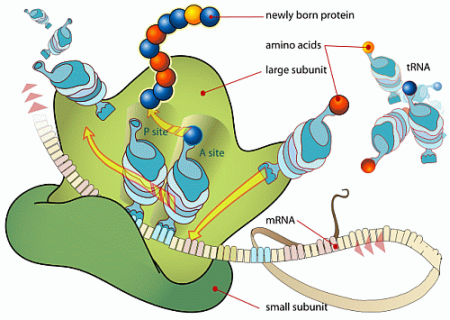

If, one is making a positive scientific assertion that complex organs exist and were credibly formed by gradualistic, undirected change through chance mutations and differential reproductive success through natural selection and similar mechanisms, one has a duty to provide decisive positive evidence of that capacity. Behe’s onward claim is then quite relevant: for dozens of key cases, no credible macro-evolutionary pathway (especially no detailed biochemical and genetic pathway) has been empirically demonstrated and published in the relevant professional literature. That was true in 1996, and despite several attempts to dismiss key cases such as the bacterial flagellum [which is illustrated at the top of this blog page] or the relevant part of the blood clotting cascade [hint: picking the part of the cascade — that before the “fork” that Behe did not address as the IC core is a strawman fallacy], it arguably still remains to today.

Now, we can immediately lay the issue of the fact of irreducible complexity as a real-world phenomenon to rest.

For, a situation where core, well-matched, and co-ordinated parts of a system are each necessary for and jointly sufficient to effect the relevant function is a commonplace fact of life. One that is familiar from all manner of engineered systems; such as, the classic double-acting steam engine:

Fig. A: A double-acting steam engine (Courtesy Wikipedia)

Such a steam engine is made up of rather commonly available components: cylinders, tubes, rods, pipes, crankshafts, disks, fasteners, pins, wheels, drive-belts, valves etc. But, because a core set of well-matched parts has to be carefully organised according to a complex “wiring diagram,” the specific function of the double-acting steam engine is not explained by the mere existence of the parts.

Nor, can simply choosing and re-arranging similar parts from say a bicycle or an old-fashioned car or the like create a viable steam engine. Specific mutually matching parts [matched to thousandths of an inch usually], in a very specific pattern of organisation, made of specific materials, have to be in place, and they have to be integrated into the right context [e.g. a boiler or other source providing steam at the right temperature and pressure], for it to work.

If one core part breaks down or is removed — e.g. piston, cylinder, valve, crank shaft, etc., core function obviously ceases.

Irreducible complexity is not only a concept but a fact.

But, why is it said that irreducible complexity is a barrier to Darwinian-style [macro-]evolution and a credible sign of design in biological systems?

Read More ›